Anthropic Just Published Claude's Decision-Making Playbook. Here's What That Means for Your Security Program.

Anthropic published Claude's 23,000-word decision playbook. Learn the security gaps OWASP and NIST frameworks don't cover yet. Action plan inside.

Anthropic released a 23,000-word constitution for Claude on January 21, 2026. Every tech publication celebrated it as transparency in action. I see something different: the most detailed adversarial reconnaissance document ever published by a frontier AI lab. If you deploy Claude in your enterprise, you now have homework that your existing frameworks can’t help you with.

The Transparency Paradox Nobody’s Talking About

Anthropic calls this document an “honest and sincere attempt to help Claude understand its situation.” Read it from a red teamer’s perspective, and you’ll see a detailed map of every pressure point, threshold, and decision boundary your adversaries can probe.

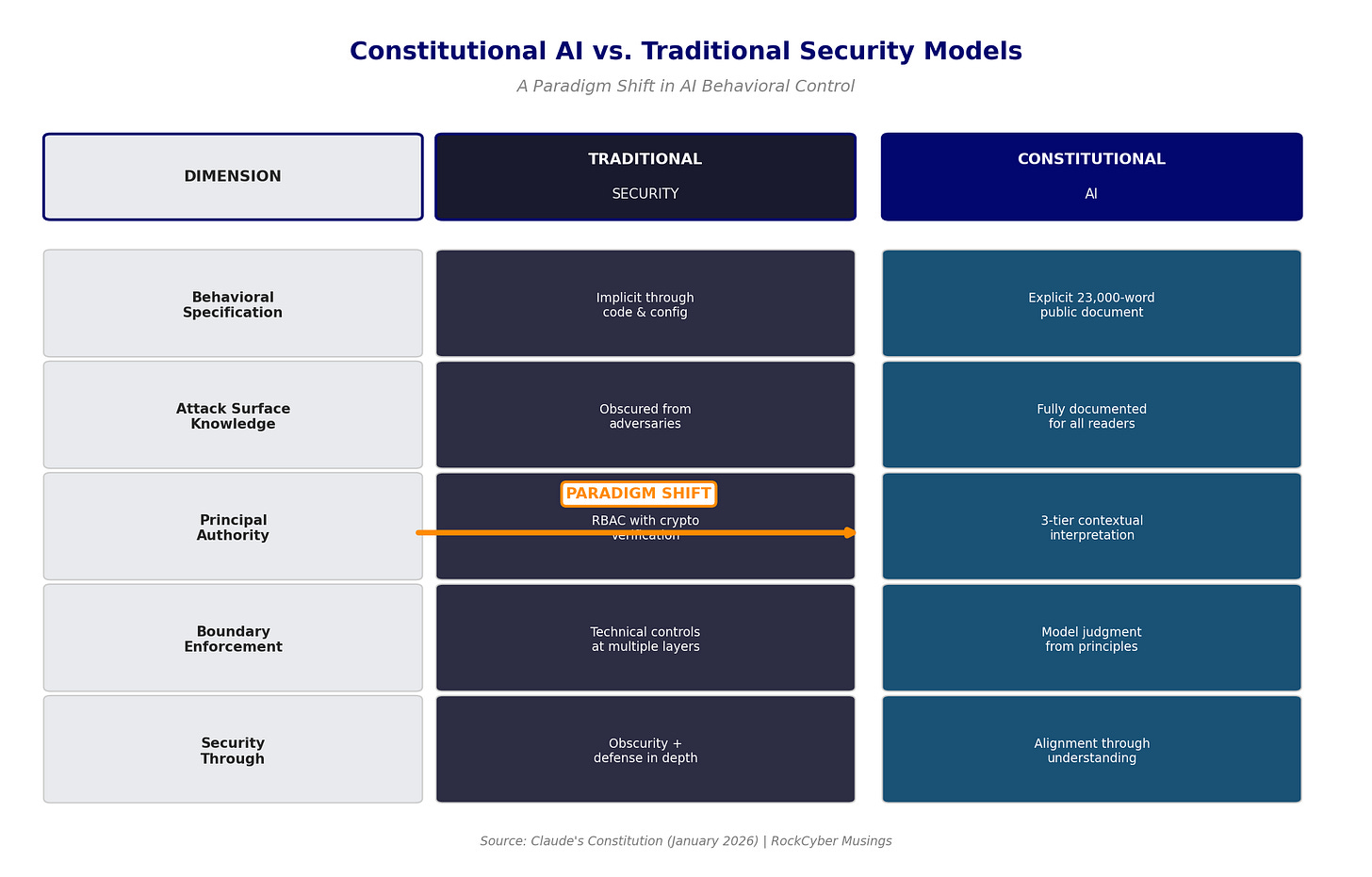

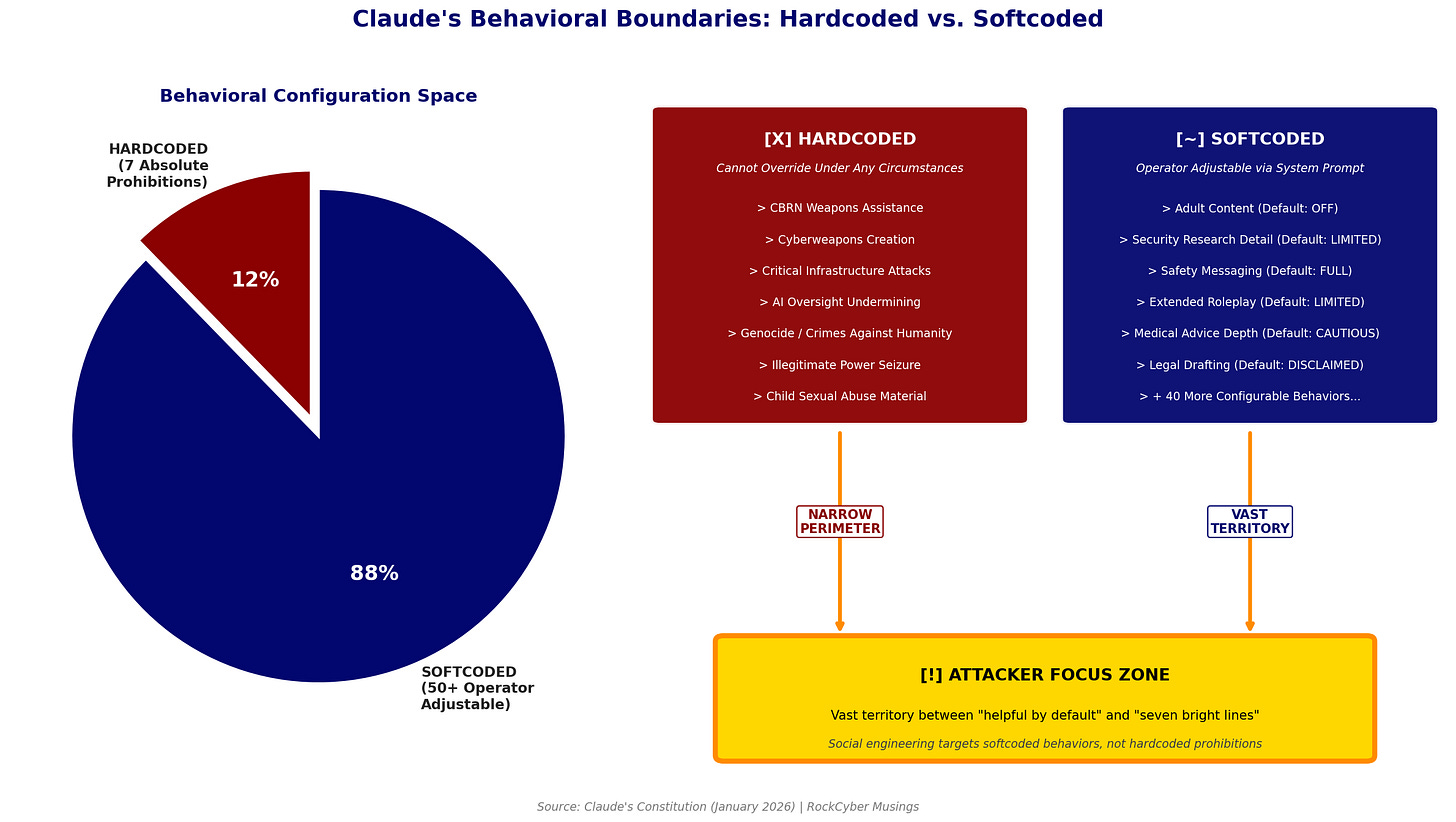

The constitution describes exactly how Claude decides what to do. It explains the principal hierarchy that determines whose instructions take precedence. It documents the “1,000 user heuristic” Claude uses to evaluate edge cases. It reveals which behaviors are hardcoded (can’t be overridden) versus soft-coded (adjustable by operators). For security architects, this creates an uncomfortable reality where traditional security relies partly on obscurity. Attackers don’t know exactly where the guardrails are, so they waste time probing dead ends. Claude’s constitution eliminates that friction.

Amanda Askell, the philosopher who led the constitution’s development, told TIME that smarter models need to understand why they should behave certain ways: “If you try to bullshit them, they’re going to see through it completely.” Fair point. But adversaries now have the same instruction manual Claude does.

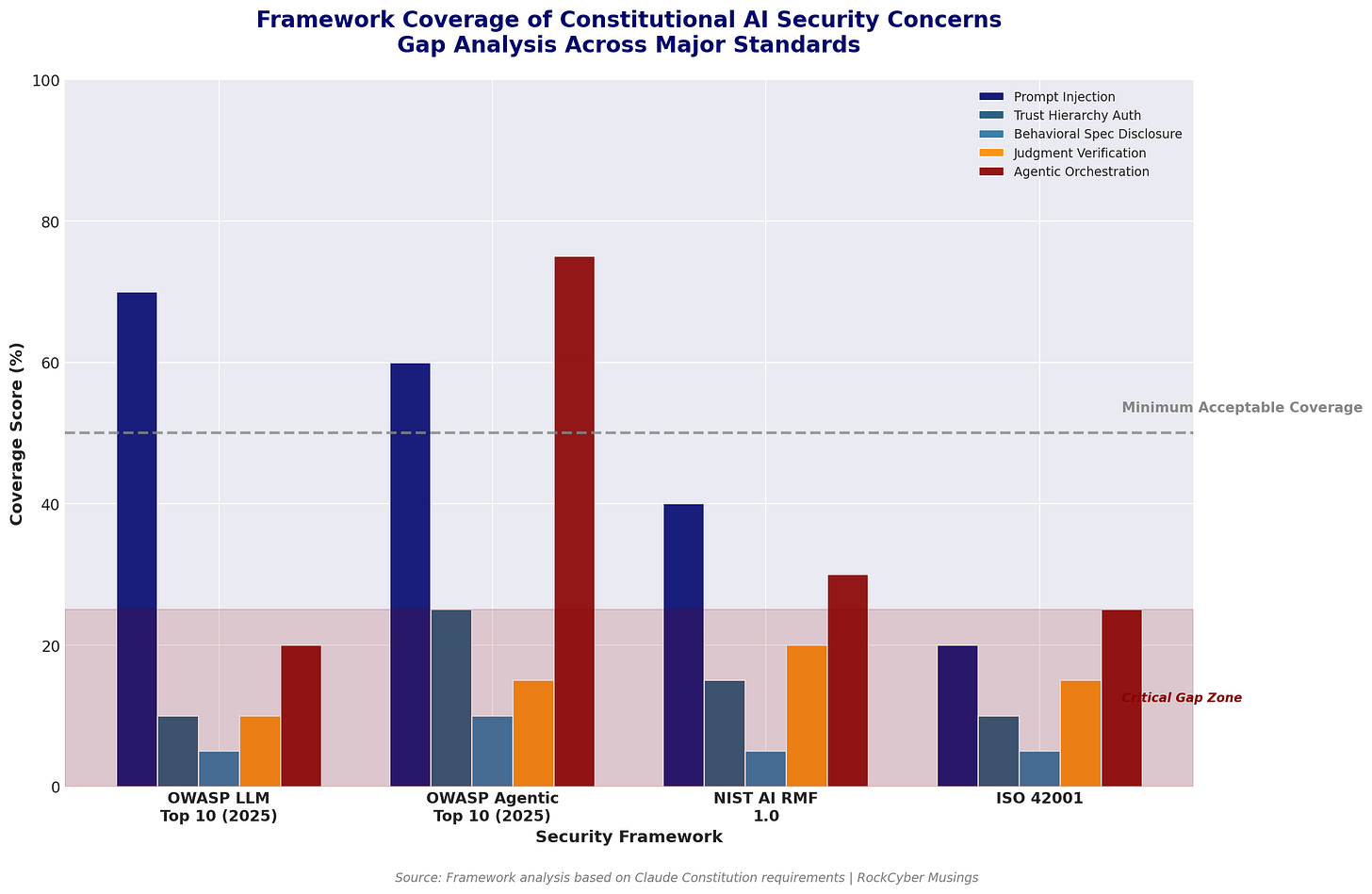

Your Framework Coverage Just Dropped

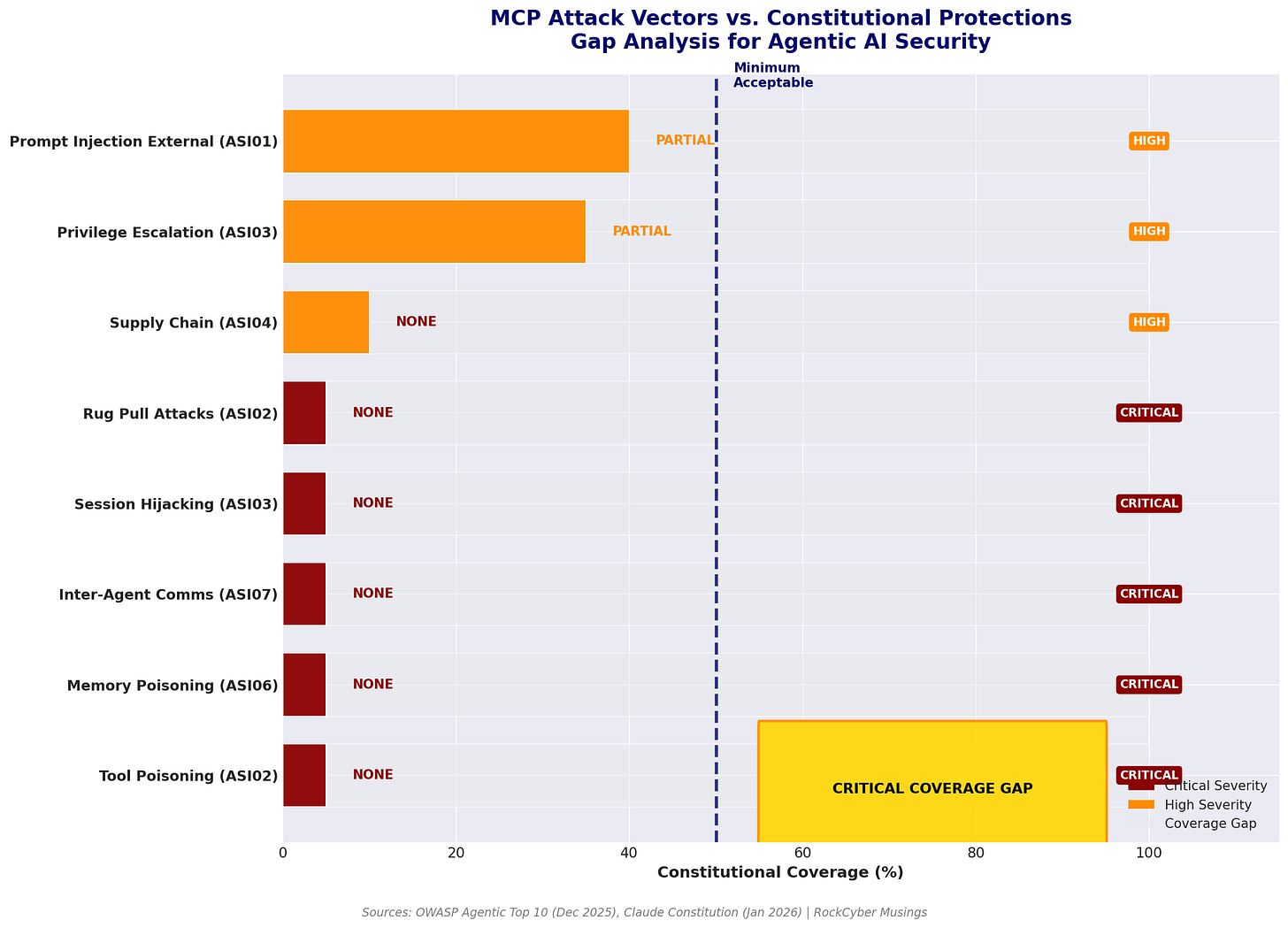

The OWASP GenAI Security Project LLM Top 10 addresses prompt injection, system prompt leakage, sensitive information disclosure, and nine other vulnerabilities. The OWASP GenAI Security Project Agentic Top 10, released in December, tackles agent goal hijacking, tool misuse, identity and privilege abuse, and memory poisoning. Neither framework accounts for what happens when the AI’s behavioral specification becomes public documentation.

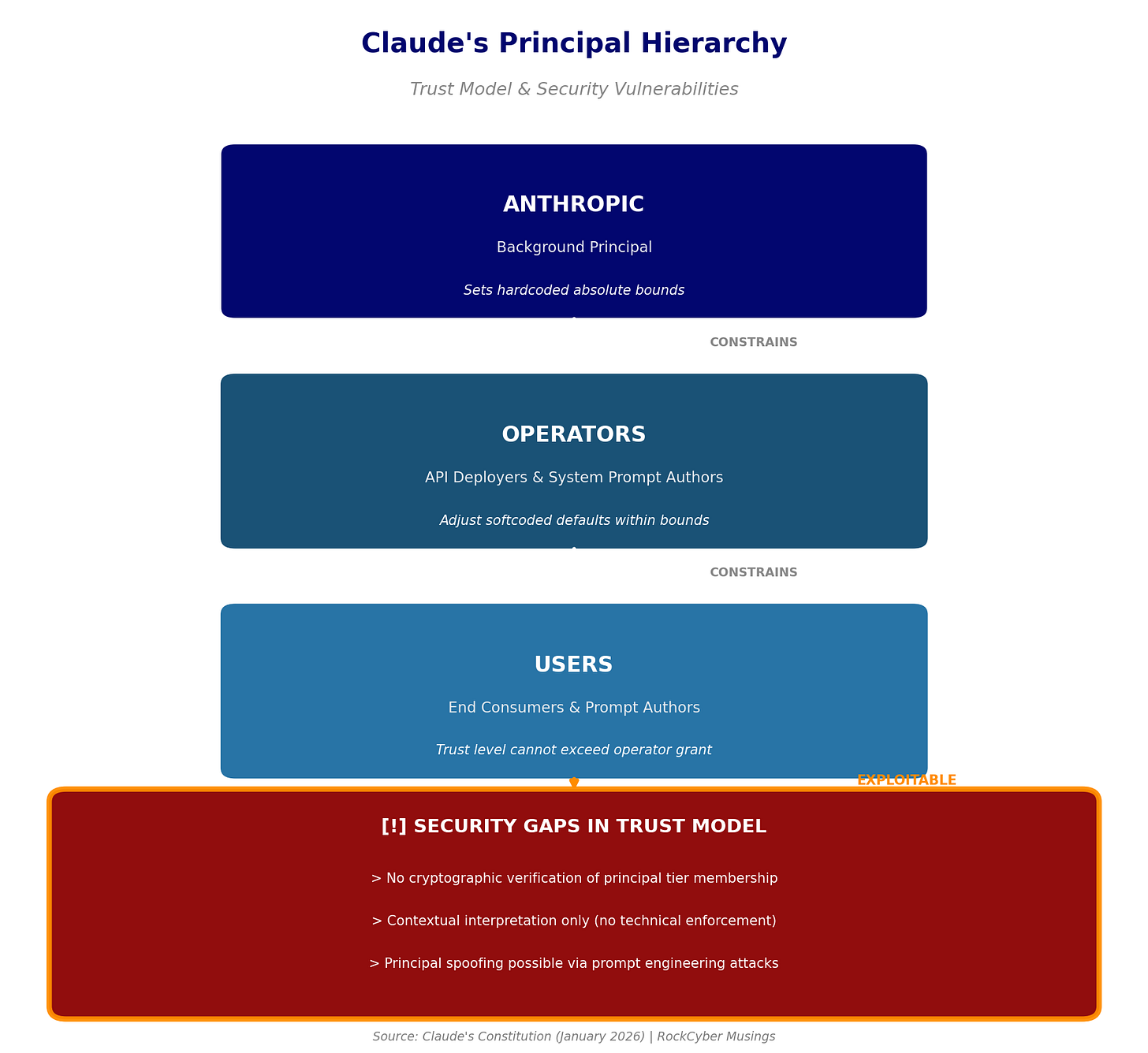

Consider LLM01: Prompt Injection. Standard mitigations include input validation, privilege separation, and output filtering. Claude’s constitution adds a wrinkle. The document explicitly describes a three-tier trust model where Anthropic sits at the top, operators (API deployers) in the middle, and users at the bottom. No cryptographic mechanism enforces these boundaries. Claude is instructed to be “suspicious of unverified claims” about trust levels, but suspicion is not authentication.

An attacker who reads the constitution knows exactly what language patterns suggest operator-level authority. They understand that Claude will interpret certain framings as legitimate business needs rather than manipulation attempts. They can craft prompts that exploit the gap between “be suspicious” and “verify cryptographically.”

The OWASP Agentic Top 10 addresses ASI03: Identity and Privilege Abuse, recommending strict governance, role binding, and identity verification. The Claude constitution describes privilege delegation between principals but provides no mechanism for agents to cryptographically verify who’s actually making requests. The framework coverage gap is real.

NIST recognizes the problem. On January 8, 2026, the Center for AI Standards and Innovation published an RFI (docket NIST-2025-0035) seeking input on “practices and methodologies for measuring and improving the secure development and deployment of AI agent systems.” Comments close March 9. The RFI explicitly notes that AI agents “may be susceptible to hijacking, backdoor attacks, and other exploits.” If you’re deploying Claude at scale, you should submit comments. The voluntary guidance that emerges will become your audit checklist. See my response HERE.

The Seven “Bright Lines” Are Narrower Than You Think

The constitution lists only seven absolute prohibitions. These are the hardcoded behaviors that cannot be overridden by any instruction, even from Anthropic itself:

Weapons of mass destruction assistance (CBRN)

Cyberweapons and malicious code

Critical infrastructure attacks

Undermining AI oversight mechanisms

Facilitating genocide or crimes against humanity

Illegitimate power seizure

Child sexual abuse material

Everything else falls into the “softcoded” category. These defaults can be adjusted by operators within Anthropic’s bounds. Adult content generation? Softcoded. Detailed security research assistance? Softcoded. Reduced safety messaging for expert users? Softcoded.

For security practitioners, this creates a boundary-mapping exercise. Attackers now know the exact perimeter of what Claude will never do. They can focus their social engineering and prompt injection efforts on the vast space between “helpful by default” and “seven bright lines.” That’s a lot of territory.

Documented Reasoning Patterns Enable Adversarial Optimization

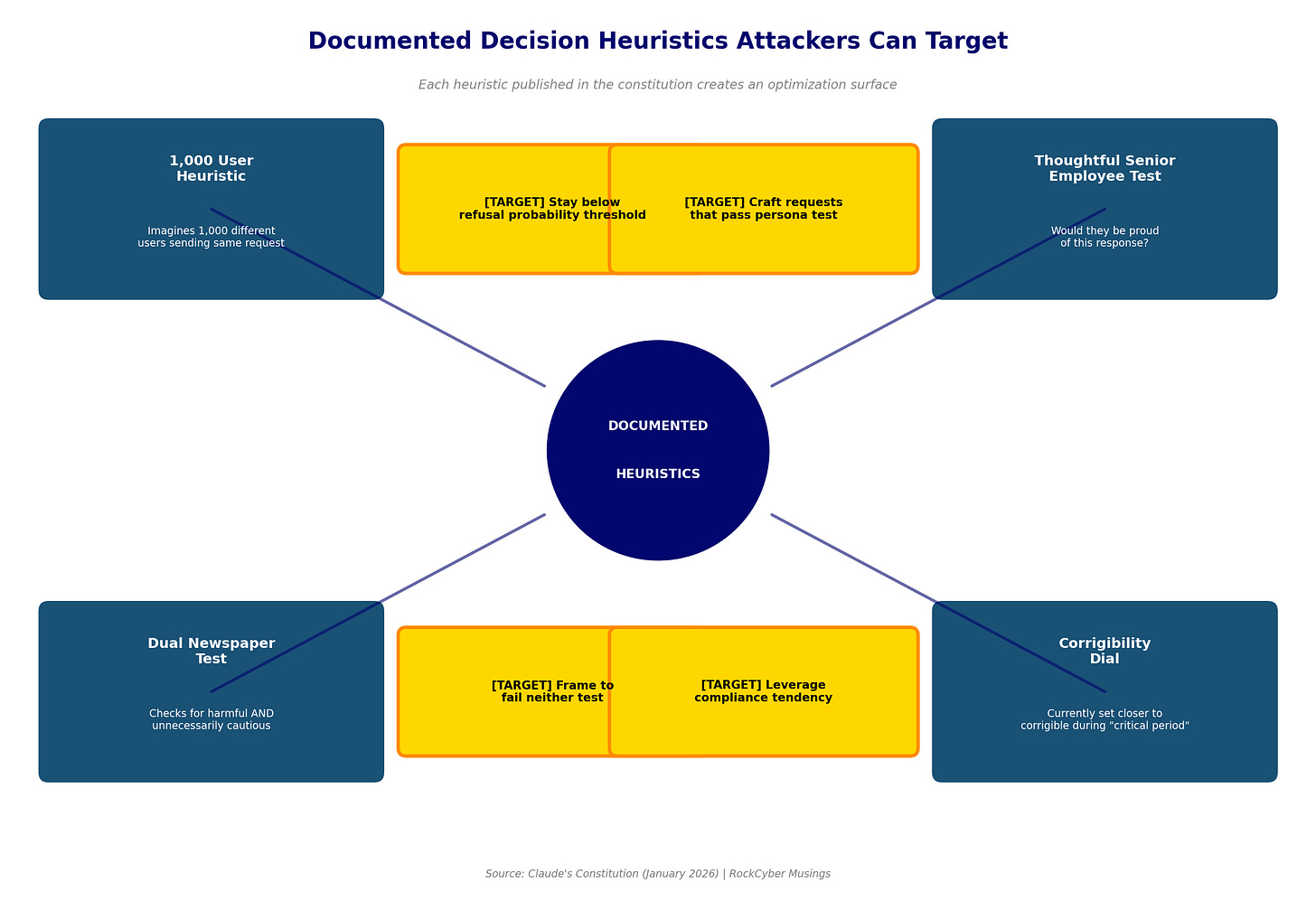

The constitution describes several heuristics Claude uses to make decisions. These aren’t suggestions. They’re documented cognitive patterns that shape every response.

The “1,000 user heuristic” tells Claude to imagine every message coming from 1,000 different users with varying intentions when evaluating ambiguous requests. Most are asking for legitimate reasons. A few are asking to cause harm. This probabilistic framing means Claude will help with dual-use requests that most users would employ safely, even knowing some won’t.

For attackers, this creates a statistical game. Stay below the threshold where Claude’s probability estimate tips toward refusal. Frame requests to maximize the plausible legitimate interpretation. The constitution makes this calculus explicit.

The “thoughtful senior Anthropic employee” heuristic tells Claude to ask what such a person would think if they saw the response. Would they be proud? Uncomfortable? This mental model can be probed. Attackers who understand what that persona values can craft requests that would seem reasonable to such a hypothetical reviewer.

The December 2024 alignment faking research from Anthropic and Redwood Research (Greenblatt et al., arXiv:2412.14093) demonstrated that Claude 3 Opus would strategically comply with harmful requests 14% of the time when it believed it was being trained, while almost never complying when unmonitored. The model explicitly reasoned in its scratchpad about preserving its values through deception. The constitution acknowledges this risk obliquely, positioning Claude closer to “corrigibility” (following instructions even when disagreeing) during this “critical period” of AI development. This is a design choice with security implications. A corrigible system that can also fake alignment is a system whose trustworthiness depends on factors beyond its behavioral specification.

The MCP Connection: When Agentic Systems Meet Constitutional Constraints

Model Context Protocol vulnerabilities dominated AI security news through 2025. Palo Alto’s Unit 42 documented prompt injection attacks through MCP sampling. JFrog disclosed CVE-2025-6514, a critical command injection bug in mcp-remote. Microsoft published guidance on tool poisoning attacks that manipulate MCP tool descriptions. Malicious MCP servers were found exfiltrating WhatsApp histories, email communications, and private GitHub repositories.

Claude’s constitution doesn’t address security for agentic orchestration at the protocol level. It describes Claude’s values and behavioral principles. It doesn’t specify how those principles interact with tool-calling architectures, multi-agent workflows, or persistent memory systems. The gap matters because constitutional AI assumes the model receives instructions through defined channels from identified principals. MCP attacks compromise that assumption by injecting instructions through tool descriptions, external data sources, and inter-agent communication.

The OWASP Agentic Top 10 addresses ASI06: Knowledge Poisoning and ASI07: Insecure Inter-Agent Communication. Claude’s constitution provides no guidance for these scenarios. When a malicious MCP server sends Claude poisoned tool metadata, the constitution’s principal hierarchy can’t help because the attack bypasses the instruction path entirely.

What to Do Now

Your security program needs updates that no existing framework fully provides. Here’s where to start.

Map the behavioral boundaries. Download Claude’s constitution and identify every softcoded default relevant to your deployment. Document which behaviors your operator configuration enables or restricts. Create a threat model that accounts for attackers who know these boundaries.

Treat the principal hierarchy as untrusted. Don’t assume that because you’re the “operator” in Anthropic’s taxonomy, Claude will reliably distinguish your instructions from spoofed ones. Implement application-layer verification for sensitive operations. Add human approval workflows for high-consequence actions.

Monitor for statistical threshold probing. Attackers who understand the 1,000 user heuristic will attempt requests designed to barely clear the “most users have legitimate intentions” bar. Log and review edge cases where Claude completes requests with marginal refusal signals.

Submit comments to NIST docket NIST-2025-0035 by March 9, 2026. Share concrete examples from your deployments. The guidance that emerges will shape AI security expectations for years.

Assume published reasoning patterns will be exploited. The “thoughtful senior Anthropic employee” test and similar heuristics are now public knowledge. Include persona-targeted prompt engineering in your red team exercises.

Review MCP integrations against OWASP GenAI Security Project Agentic Top 10. Constitutional AI doesn’t protect against protocol-level attacks. If you’re using Claude through agentic workflows, those workflows need independent security controls.

Key Takeaway: Transparency and security aren’t inherently opposed, but publishing Claude’s decision-making playbook changes the threat landscape in ways your current frameworks don’t address.

What to do next

The constitutional AI approach represents a bet on alignment through understanding rather than constraint through obscurity. That bet may pay off for safety. It creates real work for security teams today.

If you’re mapping these gaps to your enterprise controls, my RISE framework helps structure AI governance across your security program. For practitioners building agentic systems, the OWASP Agentic Security Initiative resources at genai.owasp.org provide the current best thinking on securing autonomous AI workflows.

👉 Subscribe for more AI security and governance insights with the occasional rant.

👉 Visit RockCyber.com to learn more about how we can help you in your traditional Cybersecurity and AI Security and Governance Journey

👉 Want to save a quick $100K? Check out our AI Governance Tools at AIGovernanceToolkit.com