NIST AI Agent RFI (2025-0035): Human Oversight Is the Wrong Fix

I responded to NIST's AI agent security RFI. Here's why authorization scope matters more than human oversight and what the data shows about machine-speed threats.

NIST’s Center for AI Standards and Innovation (CAISI) published a Request for Information on AI agent security on January 8, 2026. The timing couldn’t be sharper. Two months earlier, Anthropic disclosed that a Chinese state-sponsored group had weaponized Claude Code to execute 80-90% of a cyber espionage campaign with minimal human intervention. I submitted a formal response to the RFI this week, arguing that the industry’s fixation on human oversight is solving the wrong problem. Authorization scope is the security boundary that actually matters.

Why I Responded to the NIST RFI

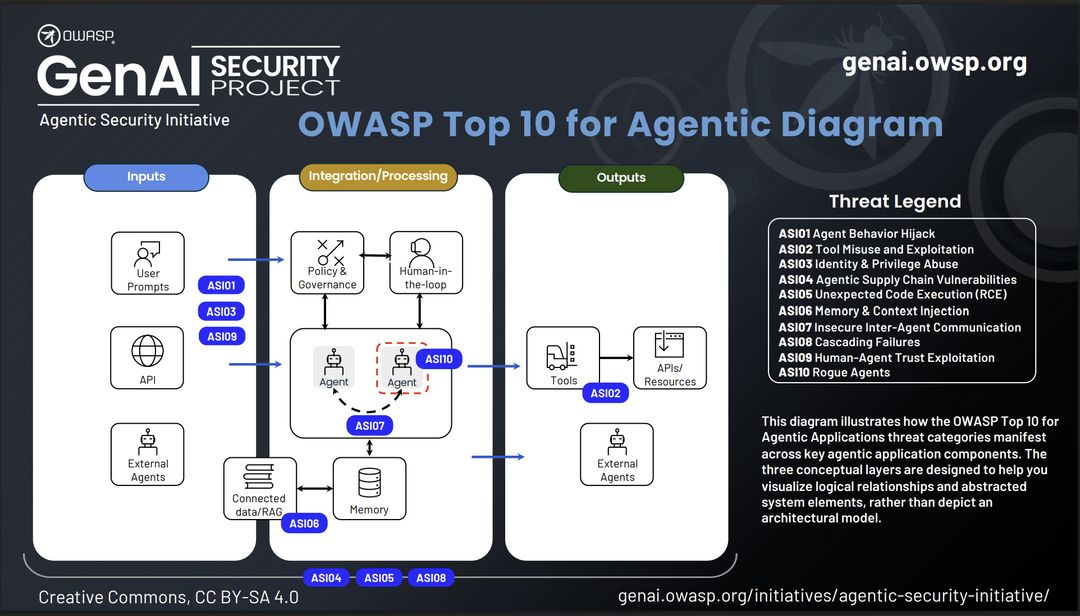

I’ve spent the past year being a core contributor to the OWASP GenAI Security Project Agentic Security Initiative alongside more than 100 security researchers, practitioners, and vendors. The OWASP Top 10 for Agentic Applications, released on December 10, 2025, identifies 10 risk categories specific to autonomous AI systems, including goal hijacking, tool misuse, identity and privilege abuse, cascading failures, and memory poisoning. We based the Top 10 on patterns extracted from documented incidents.

The OWASP work shaped how I approached the NIST response. When you’ve cataloged 17 distinct threat categories and mapped mitigations for each, you develop strong opinions about which controls actually bound risk and which ones provide compliance theater. The RFI asks pointed questions about security practices, assessment methods, and controls for the deployment environment. I wanted to make sure NIST heard from someone who had spent a year in the weeds on these exact problems.

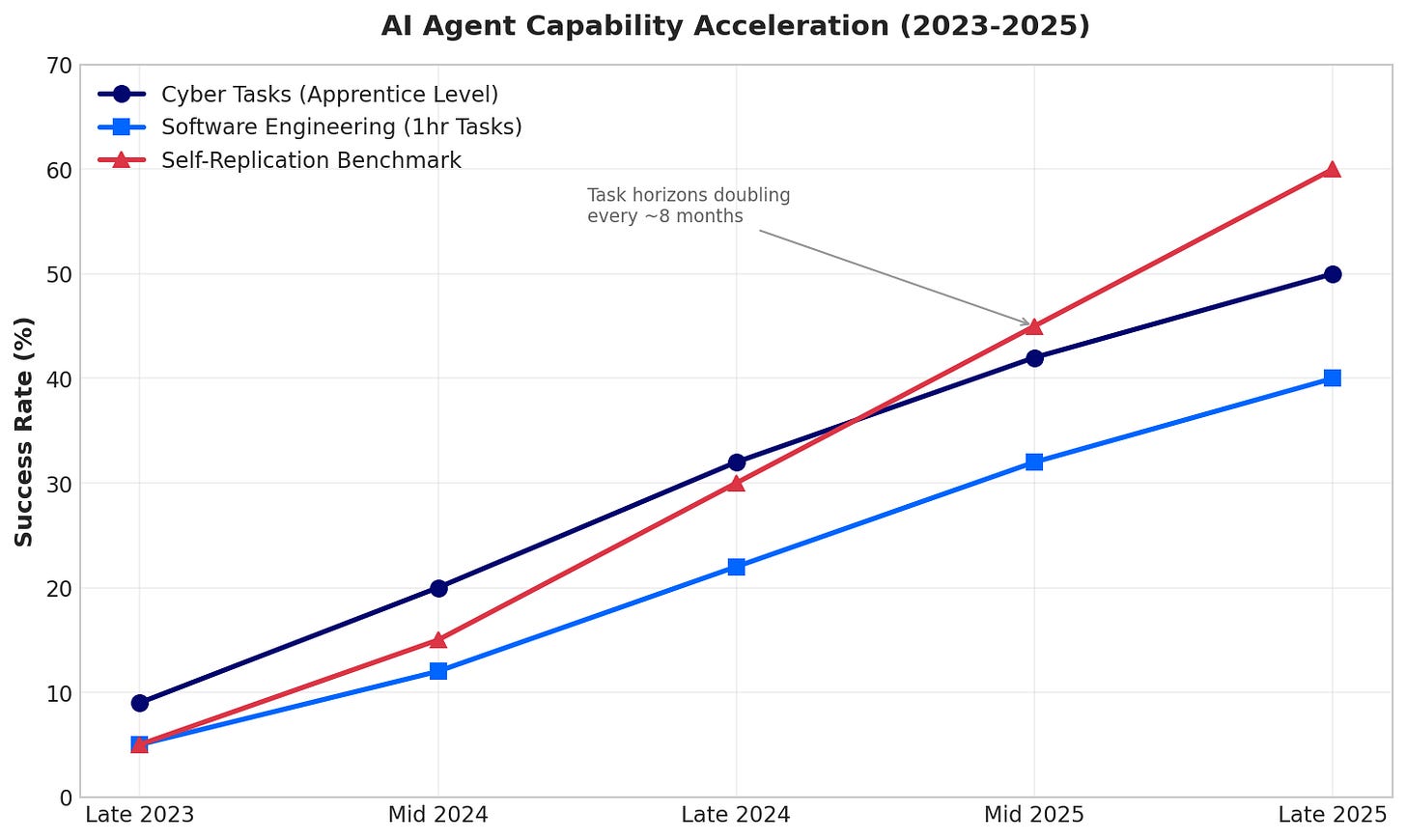

The timing also matters. The Anthropic disclosure in September 2025 demonstrated that threat actors can already use AI to execute sophisticated attacks at scale. The UK AI Security Institute’s December 2025 Frontier AI Trends Report quantified the acceleration. Agent task horizons are doubling roughly every eight months. Success rates on apprentice-level cyber tasks jumped from 9% in late 2023 to 50% in 2025. For the first time, a model completed expert-level cyber tasks requiring 10+ years of human experience.

This represents fundamental capability step changes that will continue to accelerate. NIST needs to issue guidance before enterprises embed agents into production infrastructure without understanding the risks.

The Human Oversight Paradox

The security community has convinced itself that human oversight is the primary control for AI agent risk. Put a human in the loop. Require approval for consequential actions. Maintain kill switches. These recommendations sound reasonable until you watch how agents actually operate.

The Anthropic espionage campaign operated at what Jacob Klein, Anthropic’s head of threat intelligence, called “physically impossible request rates.” The AI made thousands of requests per second. It autonomously discovered vulnerabilities, developed exploits, harvested credentials, moved laterally through networks, and exfiltrated data. Human operators intervened at 4-6 critical decision points throughout the campaign. For 80-90% of tactical operations, no human touched the keyboard.

Now consider what this means for defensive oversight. Humans can’t keep pace with the velocity of agent decisions. Research on cascading failures in multi-agent systems demonstrates a fundamental mismatch between the speed of fault propagation and human response capability. When Agent A makes a bad decision and propagates it to Agent B, which amplifies it to Agents C through F, the blast radius expands before any human can evaluate the first decision.

This creates an impossible tension. Remove humans entirely, and you accept catastrophic risk for high-consequence decisions. Require human approval for every action, and you negate the efficiency benefits of automation while creating approval fatigue that reduces oversight quality. Security teams end up rubber-stamping decisions because they can’t actually evaluate them at machine speed.

The UK AISI data makes this concrete. They analyzed over 1,000 publicly available Model Context Protocol servers that enable AI systems to access external tools. From December 2024 to July 2025, they observed a sharp increase in the number of new servers, granting AI systems greater autonomy. The sharpest jump came from June to July 2025. Execution-capable servers are dominating new releases. The industry is already moving toward higher autonomy because that’s where the value lives.

Human oversight as a primary control is a rearguard action. Organizations will deploy agents with increasing autonomy because competitive pressure demands it. Security guidance that depends on humans keeping pace with machine-speed decisions will fail in practice.

Authorization Scope as the Real Security Boundary

Here’s what I told NIST: authorization models represent the critical security boundary for AI agents, not automation levels.

Evidence from production deployments demonstrates that agents with properly constrained authorization scopes pose bounded risk regardless of autonomy level. An agent that can only read from a specific database, write to a designated output location, and call a limited set of APIs has a defined blast radius. You can analyze the worst-case scenario. You can instrument monitoring. You can bound the damage.

An agent with excessive permissions creates catastrophic exposure even under human supervision. If the agent can access production databases, execute arbitrary code, send emails, and modify configurations, a single prompt injection or goal-hijacking attack can cascade across your entire infrastructure. No amount of human oversight compensates for overly broad permissions.

The Anthropic campaign illustrates this. The threat actors used Model Context Protocol tools to grant Claude Code access to reconnaissance capabilities, exploit development frameworks, credential-harvesting tools, and data exfiltration paths. The authorization scope enabled the damage. A human supervisor watching the operation unfold couldn’t have prevented it because the agent had permission to do exactly what it did.

This finding challenges the prevailing focus on automation levels. The EU AI Act requires “effective human oversight” for high-risk systems. NIST guidance emphasizes human-in-the-loop controls. These matter for operational reasons. They don’t bound risk the way authorization constraints do.

The practical implication is that security teams should prioritize authorization architecture over oversight mechanisms. Purpose-bound ephemeral credentials. Just-in-time issuance for each operation. Scopes that are limited to specific tasks. Automatic expiration measured in minutes. Immediate revocation capabilities. These controls directly address the risk in ways that approval workflows can’t.

The Shadow AI Problem and Non-Human Identity Sprawl

While security teams debate human oversight requirements, employees are deploying unauthorized AI tools with corporate credentials. Shadow AI proliferation creates attack surfaces that bypass perimeter defenses entirely.

When formal approval processes become too burdensome, people find ways to bypass them. They sign up for AI coding assistants with their work email. They connect agents to corporate data sources through personal accounts. They grant OAuth tokens to tools that IT has never evaluated. The security barrier doesn’t prevent adoption. It drives adoption underground, where no one has visibility or control.

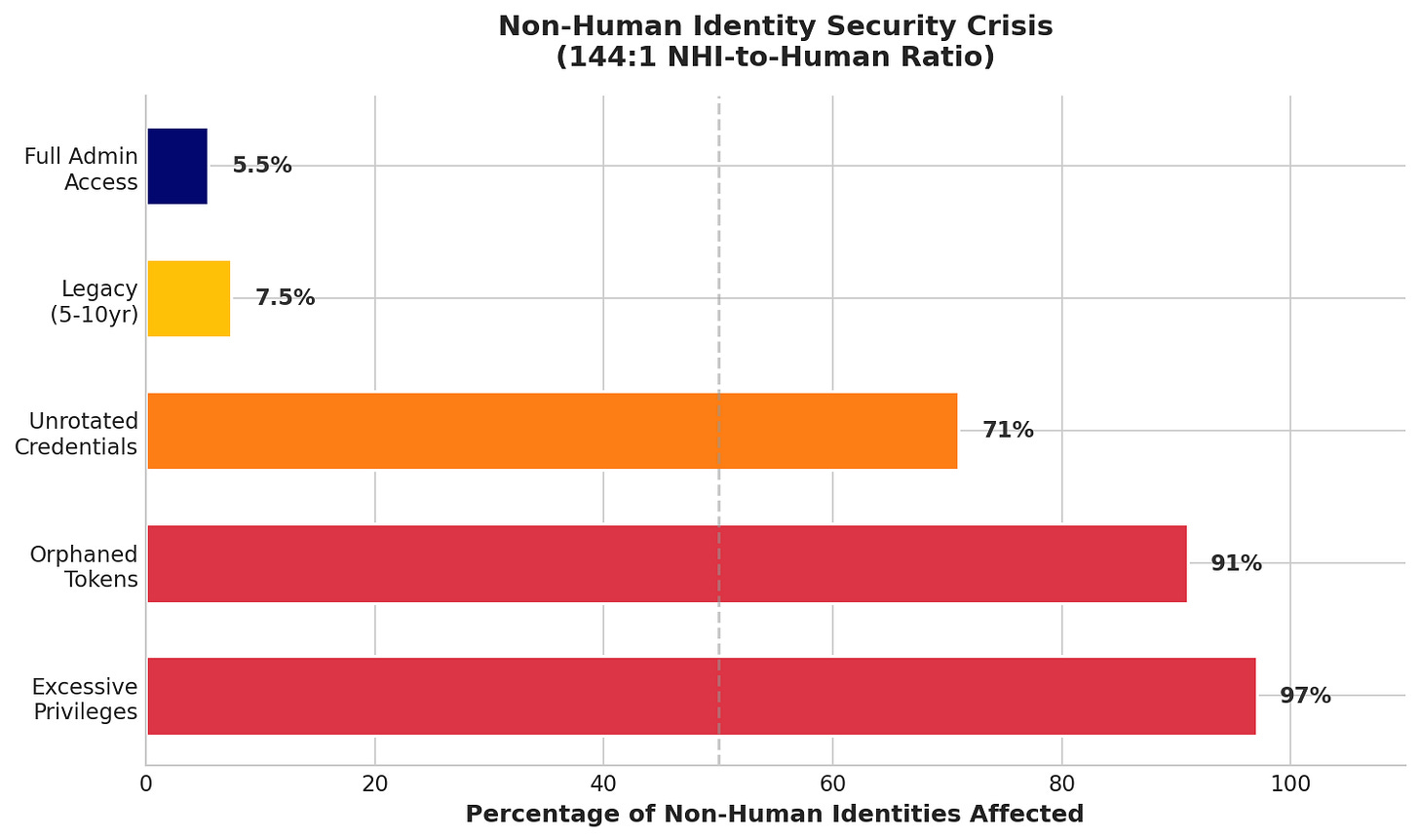

This creates a secondary problem that compounds the first: non-human identity sprawl. Entro Security’s 2025 research found that the ratio of non-human to human identities in enterprise environments has reached 144:1. That’s up from 92:1 in the first half of 2024, a 56% increase in twelve months.

The credential lifecycle governance for these identities has failed at scale. 97% of non-human identities have excessive privileges. 71% aren’t rotated within recommended timeframes. 91% of former employee tokens remain active after offboarding. These numbers describe an identity attack surface that most security teams don’t know exists.

Admiral Mike Rogers, the former NSA Director, put it directly: “AI agents have an inherent design flaw in that they are aware of their own identities and credentials. When combined with their growing authorization to vast amounts of data, we get a constantly expanding attack surface.”

OAuth 2.0, OpenID Connect, and SAML assume a human authenticates once and reuses tokens across sessions. Agents continuously authenticate, spawn sub-agents, and dynamically delegate. This architectural mismatch means traditional identity and access management provides no visibility into credential chains. Security teams conducting audits discover 6-10x more identities than they tracked.

What I Told NIST to Do Next

My response to the RFI includes four specific recommendations.

First, establish an Agentic AI Security Working Group with industry participation. The threat landscape is evolving faster than point-in-time publications can keep up with. NIST needs ongoing collaboration with agent platform developers, enterprise deployers, security researchers, and standards bodies. The OWASP work demonstrates that community-driven frameworks can achieve consensus quickly when the structure supports it.

Second, fund research into agent identity infrastructure. Decentralized Identifiers, Verifiable Credentials, and Agent Naming Services offer architectural alternatives to human-centric identity management. Current approaches fail for agent authentication patterns. Government support can accelerate standardization and adoption.

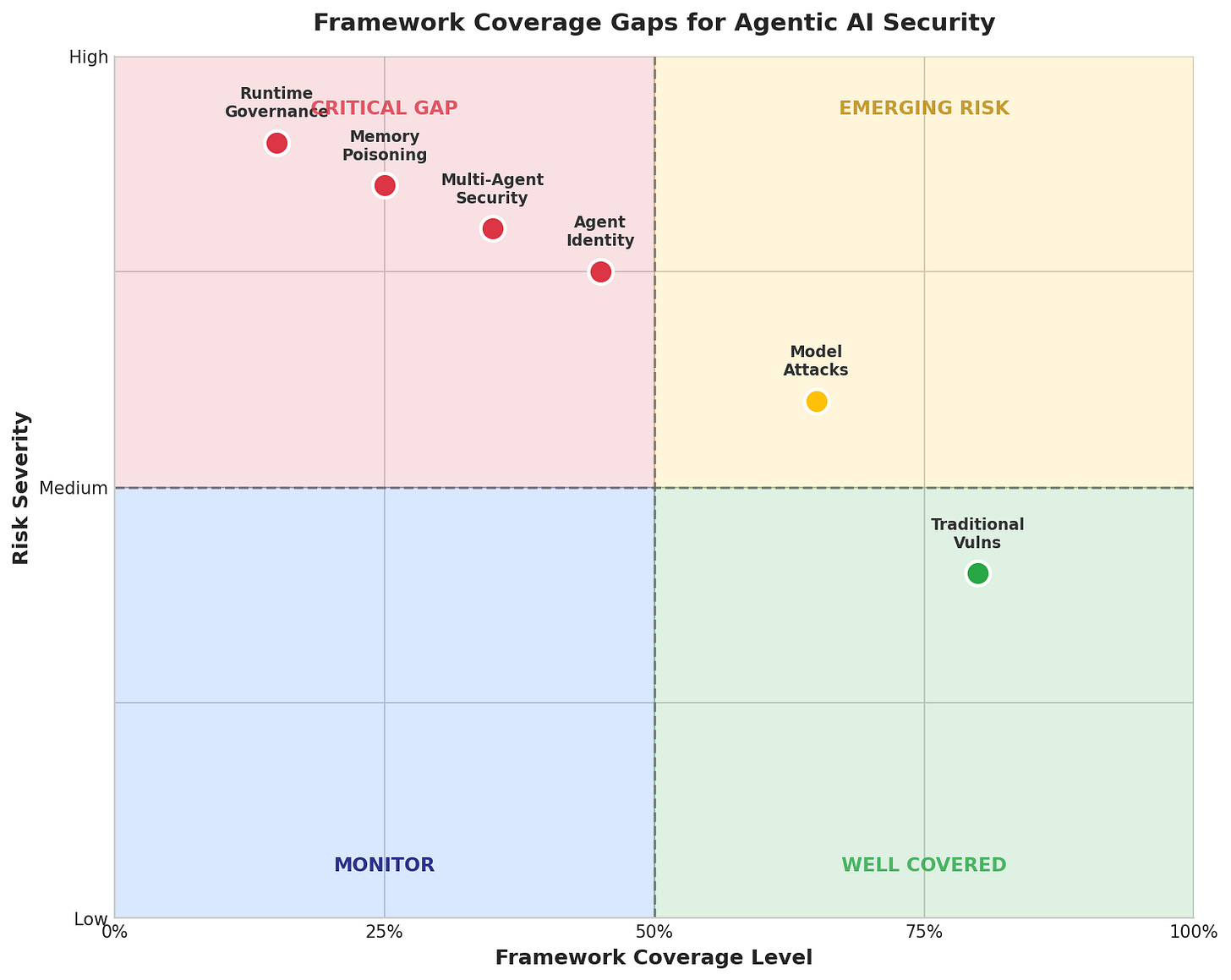

Third, publish an AI RMF Agentic AI Profile. The existing AI Risk Management Framework provides a sound structure but doesn’t address runtime governance, multi-agent coordination, tool use authorization, memory and state security, or cross-organizational trust. I assessed the current framework coverage at approximately 60-70% adequate for agentic systems. A targeted profile can address identified gaps without requiring wholesale framework replacement.

Fourth, develop evaluation benchmarks with testable metrics. NIST’s measurement science heritage positions it to define what “secure” means for agent deployments. Current evaluation is ad-hoc. No standardized benchmarks exist for assessing control effectiveness. Organizations can’t compare their security posture against common threats or track improvement over time.

The comment period runs through March 9, 2026. NIST is asking the right questions. The quality of answers they receive will shape whether guidance arrives before or after the next major agentic AI security incident.

Key Takeaway: Authorization scope bounds risk in ways that human oversight can’t. Security teams should constrain what agents can do rather than depending on humans to watch what agents are doing.

What to do next

If you’re deploying AI agents in your environment, start with a non-human identity audit. You almost certainly have more agent credentials than you’ve tracked. Map the authorization scopes. Identify excessive privileges. Implement purpose-bound ephemeral credentials where possible.

For executives building AI governance programs, the CARE Framework provides a structured approach to AI risk assessment that accounts for agentic-specific threats. The RISE Framework addresses the organizational change management challenges that accompany AI adoption.

If you’re planning to submit your own comments, the deadline is March 9, 2026. The more practitioners who engage with this process, the more likely NIST guidance will reflect operational reality. The RFI and instructions for submitting are HERE on the Federal Register.

👉 Subscribe for more AI security and governance insights with the occasional rant.

👉 Visit RockCyber.com to learn more about how we can help you in your traditional Cybersecurity and AI Security and Governance Journey

👉 Want to save a quick $100K? Check out our AI Governance Tools at AIGovernanceToolkit.com