NIST’s New Cyber AI Profile: A Solid Foundation with Critical Gaps Your Security Team Can’t Ignore

NIST's Cyber AI Profile maps AI security to CSF 2.0. Here's what it covers, where it falls short on agentic AI, and how OWASP fills the gaps.

AI-powered cyberattacks increased 72% year-over-year in 2025, according to AllAboutAI analysis based on IBM’s 2025 Cost of a Data Breach Report. 87% of organizations experienced an AI-driven attack in the past twelve months, per SoSafe’s global study. Deepfake incidents jumped 19% in Q1 2025 alone, exceeding all of 2024, based on Resemble AI’s industry tracking. As if that isn’t enough, projected losses from generative AI fraud are racing toward $40 billion by 2027, according to the Deloitte Center for Financial Services. Your cybersecurity program is already behind in adapting to the AI era.

Disagree with that last statement? Too bad. EVERYONE’S cybersecurity is already behind in adapting to the AI era. Accept it, and do something about it.

NIST just gave you a starting point to catch up, but it won’t get you all the way there. Here’s what you need to know.

The timing couldn’t be better

On December 16, 2025, NIST released the preliminary draft of IR 8596, the Cybersecurity Framework Profile for Artificial Intelligence. Call it the Cyber AI Profile. This document represents a year of collaboration with over 6,500 contributors from government, academia, and industry. It maps AI-specific cybersecurity considerations onto the familiar CSF 2.0 structure that security teams already use.

The release arrives at a critical moment, as organizations deploy AI systems at unprecedented speed. At the same time, security teams struggle to understand what that means for their threat models, control frameworks, and incident response plans. Meanwhile, attackers aren’t waiting. AI-generated phishing increased 1,265% since ChatGPT’s launch in late 2022, according to SlashNext’s threat research. The average cost of a phishing-related breach now stands at $4.88 million, per IBM’s 2025 data. The asymmetry between attack sophistication and defensive maturity is growing.

The Cyber AI Profile provides a common language for these conversations. It connects AI adoption decisions to cybersecurity outcomes in a way that executives, security architects, and compliance teams can all understand. That matters because AI security conversations often stall when technical teams, risk managers, and business leaders lack a shared vocabulary.

“Regardless of where organizations are on their AI journey, they need cybersecurity strategies that acknowledge the realities of AI’s advancement,” said Barbara Cuthill, one of the profile’s authors, in NIST’s December 2025 announcement. That’s a diplomatic way of saying most organizations are flying blind when it comes to AI security.

However, I implore you to treat the profile as a starting point, not a finish line. It establishes solid foundations while leaving significant gaps, particularly around agentic AI systems where multiple AI agents coordinate, delegate, and take autonomous action. The comment period runs until January 30, 2026. NIST is also hosting a hybrid workshop on January 14 to discuss the profile and gather feedback. That’s your window to shape the final version.

A quick primer on CSF 2.0 and why it matters for AI

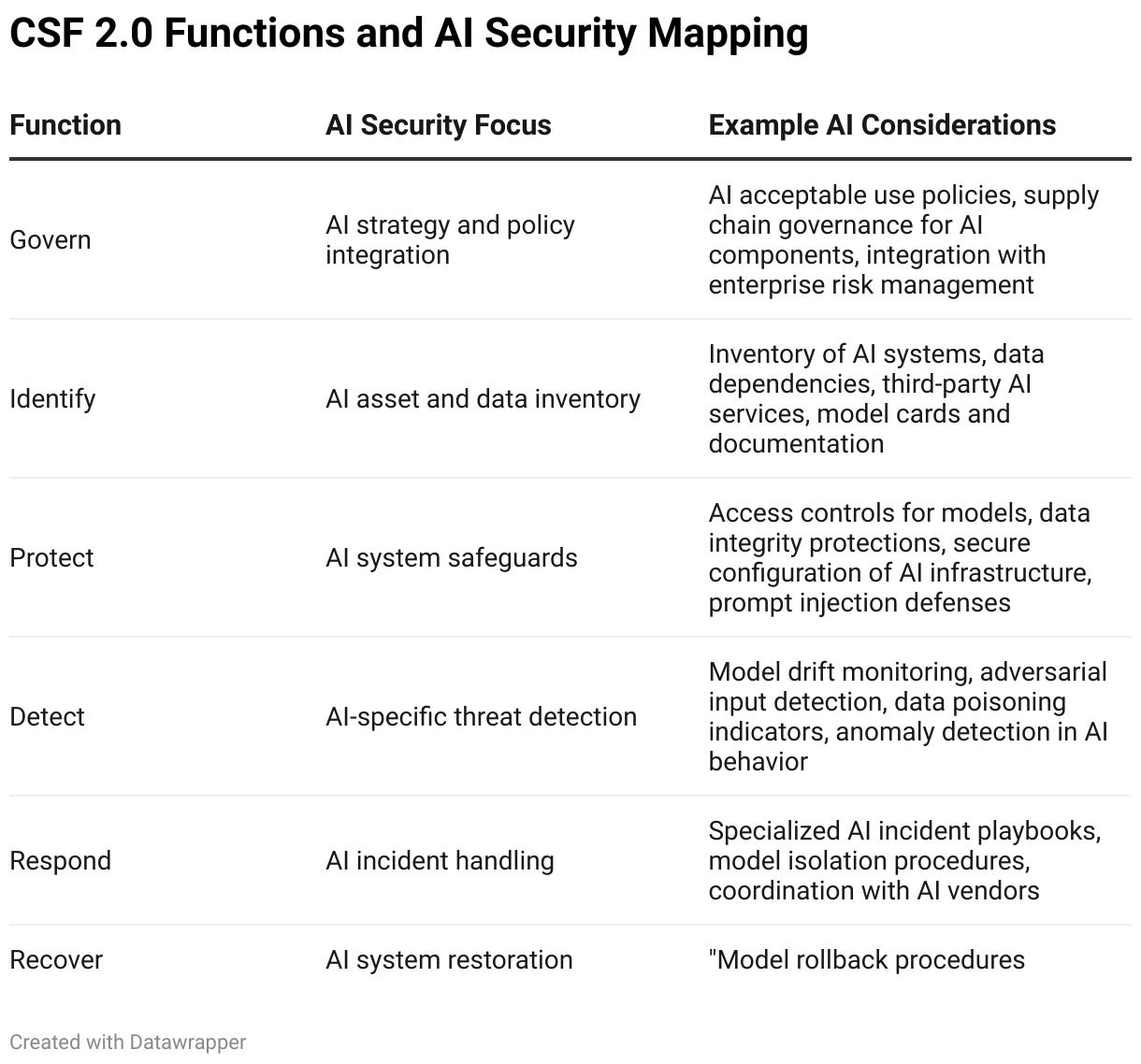

CSF 2.0 organizes cybersecurity risk management around six core Functions: Govern, Identify, Protect, Detect, Respond, and Recover. Each Function contains Categories and Subcategories that describe specific outcomes organizations should achieve.

Figure 1: CSF 2.0 Functions and AI Security Mapping

Govern establishes the organizational context for cybersecurity decisions. For AI, this means policies on acceptable use, supply chain management for AI components, and integration with enterprise risk management. AI decisions often get made outside traditional IT governance structures, making this function critical.

Identify focuses on understanding your assets and risk posture. Most organizations I work with can’t answer basic questions: How many AI systems are in production? What data do they access? Who owns them?

Protect covers safeguards for critical services, including access controls for models, data integrity protections, and secure configuration. Traditional access control models don’t map cleanly to AI systems.

Detect addresses the timely discovery of cybersecurity events. AI introduces new detection challenges around model drift, adversarial inputs, and data poisoning that traditional tools miss entirely.

Respond guides actions when incidents occur. AI incidents require specialized expertise. Determining whether unexpected behavior is adversarial manipulation or emergent behavior requires different skills than investigating a compromised endpoint.

Recover ensures resilience and restoration. AI systems can be difficult to restore to known-good states, particularly when training data or model weights have been compromised.

The Cyber AI Profile takes all 106 CSF Subcategories and adds AI-specific considerations for each. It assigns priority ratings from 1 (High) to 3 (Foundational) and organizes everything around three Focus Areas.

Three Focus Areas: Pick your entry point

The profile addresses AI cybersecurity through three overlapping lenses. Your starting point depends on where you are in your AI journey.

Focus Area 1: Secure (Securing AI System Components)

This Focus Area tackles the cybersecurity challenges of integrating AI into your organizational ecosystem. Whether you’re building custom models, fine-tuning foundation models, or deploying commercial AI products, you’re expanding your attack surface in ways that traditional security controls weren’t designed to address.

AI systems behave differently from conventional software. Their outputs are probabilistic, not deterministic. Their vulnerabilities are often embedded in the training data or the model architecture rather than in the code.

The profile highlights several Secure priorities:

Supply chain risks get significant attention. Your AI supply chain includes training data, pre-trained models, third-party APIs, and ML infrastructure. The October 2025 incident involving a backdoored MCP server package in NPM illustrates this risk, as a malicious package provided persistent remote access via dual reverse shells.

Data integrity emerges as a High priority. AI systems are only as trustworthy as the data they learn from. Research published in arXiv demonstrates that poisoning attacks can be executed with surprisingly small amounts of malicious data.

Configuration management for AI requires different thinking. Model parameters, hyperparameters, prompts, and retrieval augmentation sources all constitute a security-relevant configuration.

Focus Area 2: Defend (Conducting AI-Enabled Cyber Defense)

This Focus Area asks how AI can enhance your defensive capabilities. The data supports AI investment. Organizations using AI-driven security platforms detect threats 60% faster and achieve around 95% detection accuracy vs 85% with traditional tools, per industry benchmarks. IBM’s 2025 Cost of a Data Breach Report indicates that organizations with AI-powered security reduce breach costs by an average of $1.9 million and detect breaches 108 days faster.

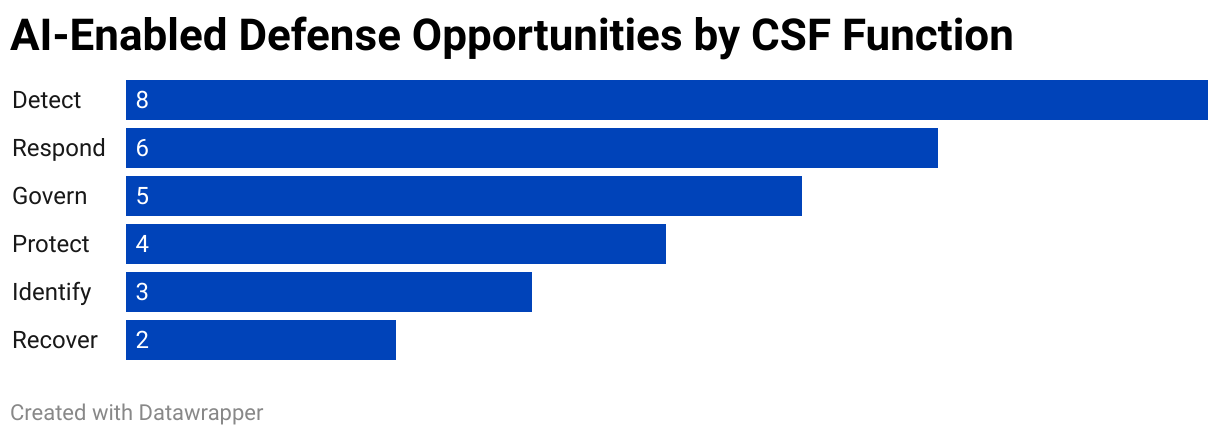

Figure 2: AI-Enabled Defense Opportunities by CSF Function

Threat detection and analysis represent the most mature use cases. AI excels at finding patterns in high-volume data and reducing false positives. User and entity behavior analytics powered by machine learning can identify insider threats that rule-based systems miss.

Automated incident response is gaining traction for tier-one events. AI can accelerate triage, suggest playbook actions, and execute containment at machine speed.

Predictive capabilities are emerging for risk forecasting and vulnerability prioritization. These require careful validation but offer genuine advantages when implemented correctly.

The profile treats Defend as both an opportunity and a risk. Every AI tool you deploy for defense is itself an attack surface.

Focus Area 3: Thwart (Thwarting AI-Enabled Cyber Attacks)

This is where the threat statistics become personal. AI isn’t just something you deploy and defend. It’s a capability your adversaries are actively weaponizing against you.

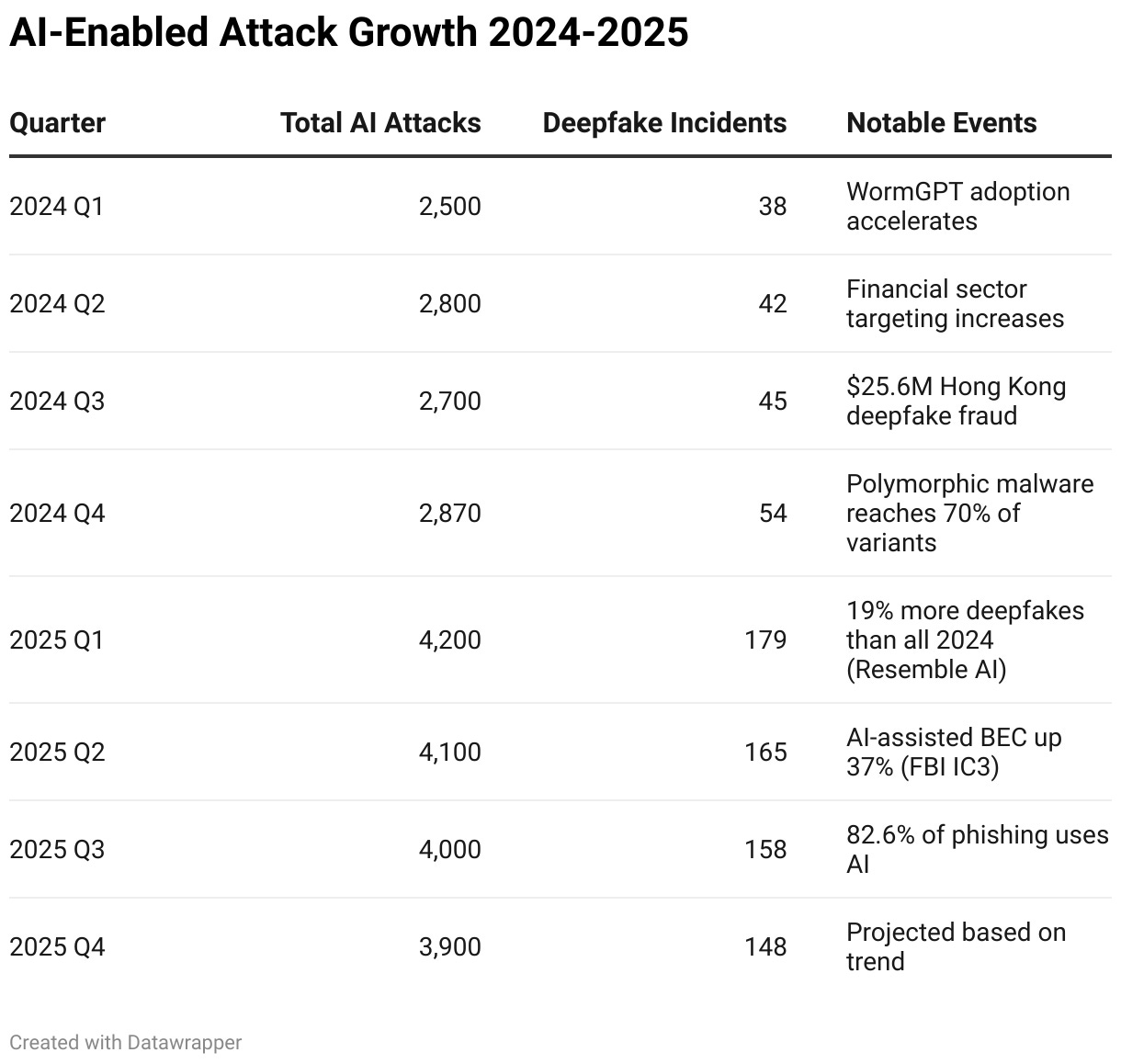

Figure 3: AI-Enabled Attack Growth 2024-2025

The profile identifies three characteristics that distinguish AI-enabled attacks:

Speed and scale overwhelm traditional response timelines. The FBI’s 2025 IC3 report documented a 37% rise in AI-assisted business email compromise incidents. IBM’s 2025 data shows automated scanning activities have increased 16.7% to reach 36,000 scans per second. When attackers use AI to automate reconnaissance, exploit development, and lateral movement, your incident response playbooks need to execute at machine speed, or they’re irrelevant.

Accessibility democratizes sophisticated attacks. Tools like WormGPT and FraudGPT provide crime-as-a-service capabilities that enable previously unsophisticated actors to launch convincing campaigns. Researchers found these tools generate “remarkably persuasive” malicious content with minimal effort. The barrier to entry for sophisticated social engineering has collapsed. Attackers no longer need to write convincing prose themselves.

Adaptability evades static defenses. AI-generated polymorphic malware now accounts for roughly 76% of detected malware variants, per industry tracking. The Morris II worm, discovered in April 2024 by Cornell researchers, demonstrated how AI-enabled malware can infiltrate systems and propagate while evading traditional defenses. Signature-based detection is increasingly inadequate when every attack instance is unique.

The personnel dimension deserves special attention. AI-enabled spear-phishing achieves 54% click-through rates compared to 12% for traditional phishing, according to threat research compiled by AllAboutAI. The old advice to look for spelling errors and grammatical mistakes no longer applies when attackers use LLMs to craft perfect prose. In the first five months of 2025, 32% of phishing emails contained large amounts of text indicating LLM-generated content, per Gartner analysis.

Deepfake attacks are even more troubling. A Medius survey found 53% of financial professionals had experienced attempted deepfake scams as of 2024. Human detection rates for high-quality video deepfakes hover around 24.5%, according to academic research. The most notorious case involved a Hong Kong finance worker who transferred $25.6 million after a video conference call featuring AI-generated likenesses of the company’s CFO and other executives. This wasn’t a single deepfaked individual. It was an entire meeting of synthetic participants. Traditional verification methods failed completely.

Where the profile falls short: The agentic AI gap

Here’s where I have to call it as I see it. The Cyber AI Profile provides valuable foundations for AI cybersecurity, but it doesn’t adequately address the systems that pose the greatest risk… agentic AI applications where multiple AI agents plan, coordinate, and act autonomously.

Agentic systems are different beasts. When one AI agent delegates to another, when agents communicate through protocols like MCP (Model Context Protocol), and when autonomous systems take actions with real-world consequences, the attack surface expands exponentially. The profile acknowledges that agentic AI exists but provides minimal specific guidance.

What's missing? Guidance for multi-agent architectures where AI orchestrates AI. Think one model directing another, or agents that call generative AI tools on the fly. In those scenarios, the AI defines its own hyperparameters. You can't deterministically control what the system itself is configuring in real time.

In short, the document is already outdated. I get that the Cyber AI Profile is intentionally foundational, but organizations deploying agentic systems today need more specific guidance than the profile currently provides.

Filling the gap: OWASP Agentic Top 10

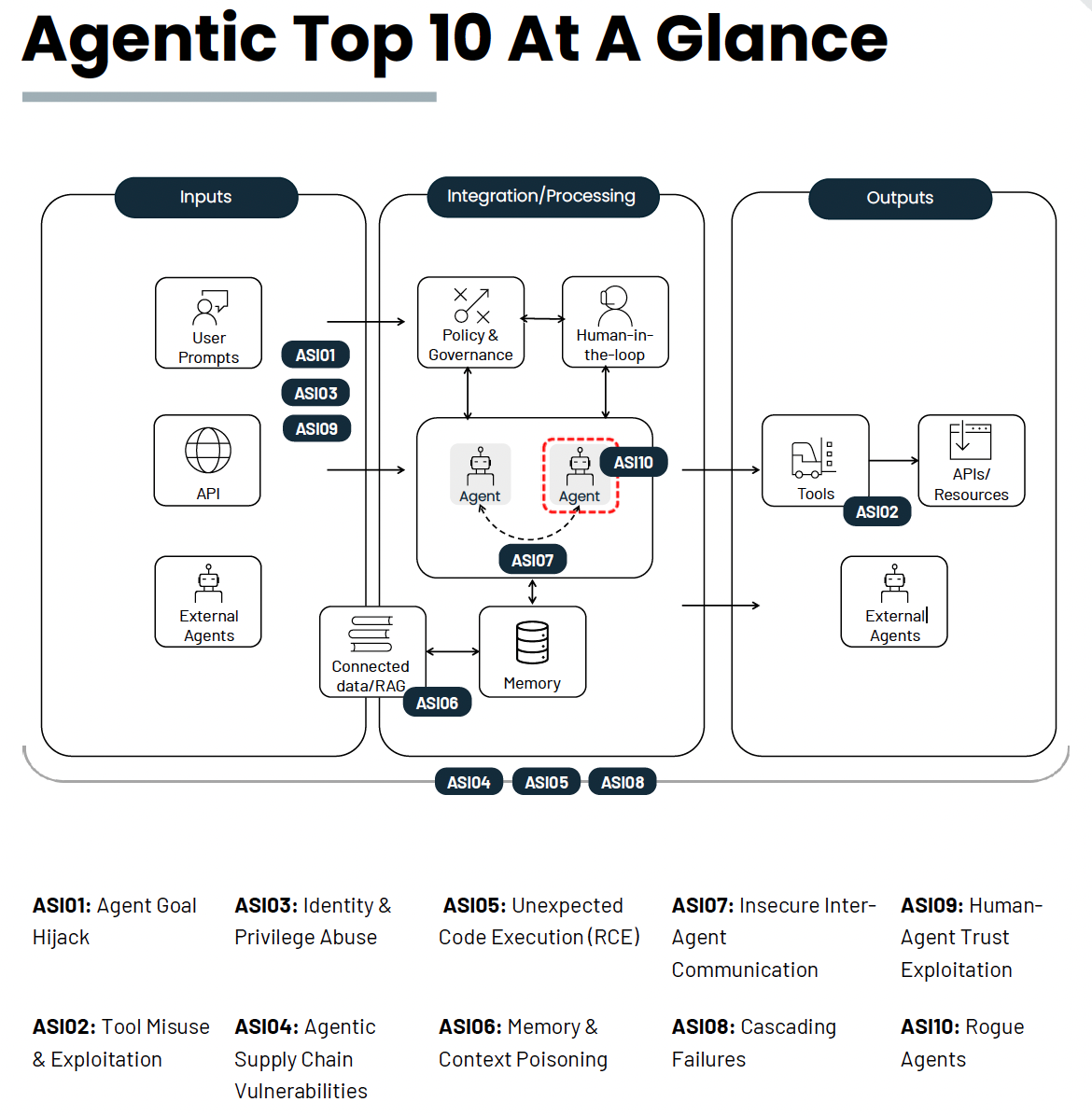

The security community hasn’t waited for NIST to catch up. The OWASP GenAI Security Project’s Agentic Security Initiative released the OWASP Top 10 for Agentic Applications in December 2025. This document provides exactly the kind of specific, actionable guidance that the NIST profile lacks for multi-agent systems. It uses the familiar OWASP Top 10 format: concise descriptions, common vulnerabilities, example attack scenarios, and practical mitigations teams can implement today.

Figure 4: OWASP Agentic Top 10 Security Items

The Agentic Top 10 addresses risks the Cyber AI Profile touches only tangentially:

ASI01: Agent Goal Hijack covers attacks that manipulate an agent’s objectives through prompt injection, context manipulation, or goal substitution. When agents operate autonomously, hijacking their goals can have cascading effects across entire workflows.

ASI07: Insecure Inter-Agent Communication addresses the trust relationships and authentication mechanisms between cooperating agents. How do agents verify each other’s identity? What happens when a malicious agent joins a multi-agent system?

ASI08: Cascading Failures examines how failures in one agent can propagate through multi-agent systems with amplifying effects. Traditional fault isolation approaches break down when agents are designed to coordinate and delegate.

ASI10: Rogue Agents tackles scenarios where agents operate outside their intended parameters, whether through adversarial manipulation or emergent behavior. This is the nightmare scenario that keeps AI safety researchers awake.

The document introduces a critical concept: Least-Agency. Just as we’ve long advocated for least privilege in access control, organizations should avoid unnecessary autonomy in AI deployments. Agentic behavior, where it isn’t genuinely needed, expands the attack surface without adding value. If a task doesn’t require autonomous action, don’t architect it that way.

The OWASP guidance maps to the Agentic AI Threats and Mitigations taxonomy and cross-references CycloneDX, AIBOM (AI Bill of Materials), and the Non-Human Identities Top 10. I contributed to this initiative alongside dozens of security experts from industry, academia, and government. The work reflects real-world red team findings and field-tested mitigations from organizations building agentic platforms. It’s not theoretical. It’s what actually works.

What you should do now

The January 30, 2026 comment deadline gives you a chance to shape the final Cyber AI Profile. NIST explicitly requests feedback on agentic AI considerations, informative references, and practical implementation guidance.

This week: Download the preliminary draft from csrc.nist.gov/pubs/ir/8596/iprd. Read the three Focus Area descriptions and identify which aligns with your immediate priorities.

Before January 14: Register for NIST’s hybrid workshop discussing the profile and updates on the SP 800-53 Control Overlays for Securing AI Systems (COSAiS). Details will be posted on the NCCoE Cyber AI Profile project page.

Before January 30: Submit your comments using NIST’s comment template. Focus on gaps you’ve encountered and practical considerations the current draft misses.

Ongoing: Use the Cyber AI Profile alongside the OWASP Agentic Top 10 to build comprehensive AI security. The NIST framework provides strategic alignment with CSF 2.0 while OWASP delivers tactical guidance for specific attack patterns.

For organizations using the CARE framework or similar risk-based approaches, the profile’s priority ratings provide a useful starting point for resource allocation. Map these priorities against your current controls and identify the highest-impact gaps.

The bottom line

NIST’s Cyber AI Profile represents genuine progress. It provides the first comprehensive mapping of AI cybersecurity considerations onto the CSF 2.0 structure that enterprises already use. It establishes a common vocabulary for conversations between security teams, AI developers, compliance functions, and executive leadership.

But it’s a preliminary draft with significant gaps, particularly around agentic AI systems. Organizations can’t wait for NIST to catch up. Combine the profile’s strategic framework with OWASP’s tactical guidance. Submit comments to strengthen the final version. And recognize that AI security will require continuous adaptation as capabilities evolve.

The 72% year-over-year growth in AI-powered attacks isn’t slowing down. Neither should your security program.

Key Takeaway: NIST’s Cyber AI Profile provides essential foundations for AI cybersecurity, but organizations deploying agentic systems need supplemental guidance from OWASP’s Agentic Top 10 to address multi-agent security gaps the profile doesn’t yet cover.

Call to Action

Download the NIST Cyber AI Profile draft and submit your comments by January 30, 2026. Register for the January 14 workshop. And explore how RockCyber’s governance frameworks can help you operationalize AI security in your organization.

👉 Visit RockCyber.com to learn more about how we can help you in your traditional Cybersecurity and AI Security and Governance Journey

👉 Want to save a quick $100K? Check out our AI Governance Tools at AIGovernanceTookit.com

👉 Subscribe for more AI and cyber insights with the occasional rant.

Citations

AI Attack Statistics

AllAboutAI. (2025). AI cyberattack statistics 2025. Based on IBM 2025 Cost of a Data Breach Report data showing 72% year-over-year increase (10,870 attacks in 2024 to 16,200 in 2025).

IBM. (2025, July 30). Cost of a Data Breach Report 2025. IBM Security. https://www.ibm.com/reports/data-breach

IBM Newsroom. (2025, July 30). IBM report: 13% of organizations reported breaches of AI models or applications, 97% of which reported lacking proper AI access controls. https://newsroom.ibm.com/2025-07-30-ibm-report-13-of-organizations-reported-breaches-of-ai-models-or-applications

SoSafe. (2025, March 6). Global businesses face escalating AI risk, as 87% hit by AI cyberattacks. 2025 Cybercrime Trends Report. https://sosafe-awareness.com/company/press/global-businesses-face-escalating-ai-risk-as-87-hit-by-ai-cyberattacks/

Deepfake Statistics

Resemble AI. (2025, April). Q1 2025 AI Deepfake Security Report. https://www.resemble.ai/q1-2025-ai-deepfake-security-report/

Surfshark. (2025). Deepfake statistics 2025: How frequently are celebrities targeted? Data compiled from Resemble.AI and AI Incident Database showing 179 incidents in Q1 2025, surpassing 2024 total by 19%. https://surfshark.com/research/study/deepfake-statistics

Financial Fraud Projections

Deloitte Center for Financial Services. (2024, May 29). Generative AI is expected to magnify the risk of deepfakes and other fraud in banking. Deloitte Insights. https://www.deloitte.com/us/en/insights/industry/financial-services/deepfake-banking-fraud-risk-on-the-rise.html

FBI Cybercrime Data

Federal Bureau of Investigation. (2025, April 24). FBI releases annual Internet Crime Report. https://www.fbi.gov/news/press-releases/fbi-releases-annual-internet-crime-report

FBI Internet Crime Complaint Center. (2025). 2024 IC3 Annual Report. https://www.ic3.gov/AnnualReport/Reports/2024_IC3Report.pdf

NIST Cyber AI Profile

Megas, K., Cuthill, B., Snyder, J. N., Patrick, B., Khemani, I., Dotter, M., Garris, M., Zarei, M., & Schiro, N. (2025, December 16). Cybersecurity Framework Profile for Artificial Intelligence (Cyber AI Profile): NIST Community Profile (NIST IR 8596 iprd). National Institute of Standards and Technology. https://csrc.nist.gov/pubs/ir/8596/iprd

National Institute of Standards and Technology. (2025, December 16). Draft NIST guidelines rethink cybersecurity for the AI era. NIST News. https://www.nist.gov/news-events/news/2025/12/draft-nist-guidelines-rethink-cybersecurity-ai-era

Hong Kong Deepfake Fraud Case

Magramo, K. (2024, May 16). Arup revealed as victim of $25 million deepfake scam involving Hong Kong employee. CNN Business. https://www.cnn.com/2024/05/16/tech/arup-deepfake-scam-loss-hong-kong-intl-hnk

Mackintosh, E., & Gan, N. (2024, February 4). Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’. CNN. https://www.cnn.com/2024/02/04/asia/deepfake-cfo-scam-hong-kong-intl-hnk

OWASP Agentic Security

OWASP Foundation. (2025, December). OWASP Top 10 for Agentic Applications 2026. OWASP GenAI Security Project, Agentic Security Initiative.

https://genai.owasp.org

Industry Analysis and Commentary

Ruzzi, M. (2025, December). Comments on NIST IR 8596 agentic AI gaps. As cited in SiliconANGLE coverage of NIST Cyber AI Profile release. https://siliconangle.com/2025/12/17/nist-releases-draft-ai-cybersecurity-framework-profile-guide-secure-ai-adoption/