NIST CSF 2.0 MCP Server: shipping an open source engine that turns framework into action

Launch an open source engine that turns NIST CSF 2.0 into assessments, plans, and executive reports. Built by RockCyber.

What do they say? “Laziness is the mother of innovation?” Well, maybe not exactly, but that’s the case here. I built something I wished I had on every consulting engagement. The NIST CSF 2.0 MCP Server gives you an open source way to drive assessments, planning, and reporting with speed and consistency. It speaks the language your team already uses and the agents you are testing. It exposes the entire NIST CSF 2.0 model through tools that work with AI assistants. It shortens the path from “we should assess” to “here is the plan and the report.” Today, it works with Claude through MCP. I need your help to finish the OpenAI side (TL;DR - I spent most of Labor Day on it. It’s a pain in the ass, and I know there are plenty of you out there smarter than me).

The GitHub repo is located at https://github.com/rocklambros/nist-csf-2-mcp-server

Why I created it

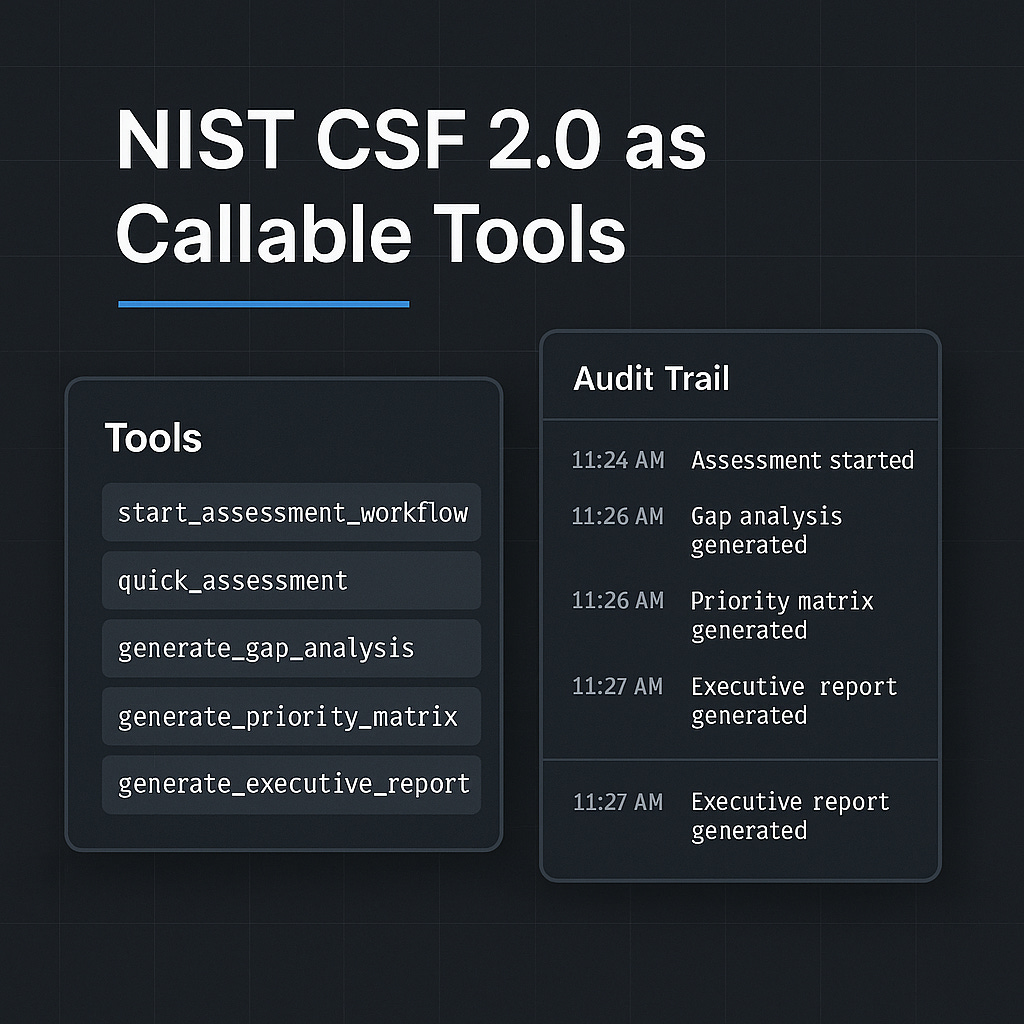

Most security programs tend to stall in the same spots. Inventory, baselines, gap analysis, and reporting take too long. Work repeats across auditors, customers, and internal reviews. AI assistants can help, but they need structure, guardrails, and a shared source of truth. That is why I built a server that makes the NIST Cybersecurity Framework 2.0 available as a real system, not a PDF. The model covers 6 functions, 23 categories, and 106 subcategories, which are all wired into a database and a suite of 39 tools.

I created it to remove friction. To give teams a shared set of verbs for assessment and planning. To let leaders see clear progress without guessing. To let AI agents do useful work that maps to the framework the business expects.

Lessons Learned

Vibe coding isn’t as simple as people make it out to be. What set out as a weekend project took almost 40 full hours to complete (and I’d argue it’s still not fully complete).

Claude Code is FANTASTIC. Keep in mind that using the Claude Opus model is 3x as expensive as using the Claude Sonnet model. I found out the hard way.

OpenAI really needs to make it easier to integrate MCP servers, similar to how Anthropic does via Claude desktop.

For the next one, I’ll probably use Python vs. TypeScript. For a relatively small MCP server, the performance tradeoff will offset the headaches I had with TypeScript errors and warnings breaking the Docker build.

What it is in plain terms

The server is a Model Context Protocol host with a full NIST CSF 2.0 data model, business logic for assessments, and a set of tools that your assistant can call. It also exposes an HTTP REST API for custom apps. You can run it in development without authentication to test, then switch to API keys or OAuth 2.1 for production. It stores profiles, scores, gaps, and reports in SQLite with a schema built for repeatable audits. Test coverage targets 95 percent across unit, integration, security, and performance paths.

The goal isn’t another GRC portal. The goal is a clean engine that other tools and assistants can call to perform real work. You get relatively deterministic functions, stable interfaces, and traceable outputs.

Capabilities that convert to business outcomes

You need outcomes more than features. Here is what the server enables.

Consistent profiles. Use

start_assessment_workflowto create an organization profile and initialize current and target states. The server captures scope, context, and owners. You get baseline metadata ready for assessment.Sample prompts are in the project’s README.md and PROMPT.md files

Faster baselines. Use

quick_assessmentto score against every subcategory in minutes. The toolset covers the full model. You get a current profile, a target profile, and a gap list.Complete assessments. Use

assess_maturityto score every subcategory with evidence references and an audit trail. The output rolls up by function and category. You get subcategory scores you can defend.Prioritized plans. Run

generate_gap_analysisandgenerate_priority_matrixto get ranked work. The logic weighs maturity, risk, and effort. It produces a simple, defensible sequence of actions.Repeatable reporting. Use

generate_executive_reportfor C-suite-friendly summaries andcreate_custom_reportfor deeper dives. Each report ties back to functions and subcategories.Compliance lift without bloat. Use

generate_compliance_reportto map to adjacent regimes like ISO 27001 or PCI DSS. The schema supports crosswalks and evidence references.Real tracking. Use

track_progressto record completed actions against plan. Progress rolls up to functions and categories so leadership sees movement where it matters.

The server exposes 39 specialized tools across framework lookup, assessment, analysis, planning, reporting, and progress tracking. That coverage is the difference between a demo and a system you can use on day one.

How NIST CSF 2.0 shows up in the product

The framework is not an overlay. It is the core data model.

Functions. Govern, Identify, Protect, Detect, Respond, Recover.

Categories and subcategories. Each has IDs, descriptions, and implementation examples baked into the lookup tools.

Profiles. Current and target states for your organization, as well as for specific products or units.

Assessments. Scored at the subcategory level with evidence hooks and an audit trail.

Gaps and plans. Derived objects that connect scores, risks, and actions with timelines.

Data point: every operation stores to an audit_trail, and reports are saved with metadata to ensure clear lineage from input to output. That alone cuts time during audits and customer reviews.

What works today, and where I need help

Claude integration works today through MCP. You can connect to Claude Desktop or any MCP-capable client, load the server, and start running tools. The assistant will discover the toolset and request the necessary inputs. That means you can have an honest conversation that ends with an assessment profile, a gap analysis, and an executive report.

The HTTP REST API is live, but I have not yet completed a clean integration with OpenAI Actions in a manner that withstands real-world use. I attempted to stand up an OpenAPI spec and wire it into a custom GPT. I encountered proxy and certificate issues, where curl commands would work but tests in OpenAI would fail. If you speak OpenAPI in your sleep and have wired custom GPT Actions to nontrivial backends, I want your help. This is open source for a reason. Help me make the OpenAI path a first-class option.

Data point: the codebase ships with Zod validation on every exposed input and uses parameterized queries across the board to prevent SQL injection. The authentication stack supports no-auth for development, API keys for lightweight deployments, and OAuth 2.1 with JWT for enterprise deployments.

Use cases that matter in your first 90 days

Here is how I would use it if I were your vCISO.

Day 1 to 10. Baseline. Run

start_assessment_workflowto set the current profile across all functions. Capture evidence references for hot spots. Share the executive report with your leadership team.Day 11 to 30. Plan. Use

generate_priority_matrixto pick the first 10 interventions. Feed that list into your ticketing system. Tie tasks to subcategories so you get credit where it counts.Day 31 to 60. Move. Execute control improvements and use

track_progressto update status. Re-run a narrow assessment on the functions you touched.Day 61 to 90. Prove. Regenerate the executive report and show function-level movement. Maintain a monthly report cadence thereafter.

Data point: the server includes a create_implementation_plan function that outputs a dated plan with owners, durations, and dependencies. That artifact enables work without the need for a separate project plan tool.

For AI assistants and custom apps

Agents need structured tools, not vague prompts. MCP gives Claude that structure right now. The server advertises tool names, inputs, and outputs. Your assistant calls assess_maturity, gets structured results, then calls generate_gap_analysis, then generate_executive_report. Each step is an explicit tool call. You can review the audit trail and see each call in order.

For custom apps, the HTTP API exposes the same functions. You can wire a simple dashboard or add the engine behind an internal portal. You can keep SQLite for speed or point the repository at a managed store if you need scale. The logic layer is written in clean TypeScript, making it easy to adapt.

Data point: The project targets Node 18 and TypeScript 5 with a Dockerized build, allowing for predictable deployment across development and production environments.

Security and validation without ceremony

You should not have to trade simplicity for safety. The server defaults to safe patterns.

Input validation. Zod schemas on every endpoint and tool.

Auth tiers. No-auth for dev, API keys for small teams, OAuth 2.1 with JWT for enterprise.

SQL safety. Parameterized queries throughout.

Testing. Jest with a goal of 95 percent coverage across key paths.

Data point: the audit_trail table records who called what tool, when, with which parameters, and what the output summary looked like. That is how you pass a customer audit without a fire drill.

Where this helps most

New program stand-ups. Fast baselines, clear plans, and credible reports.

Board and customer reviews. Simple narratives that match the framework your customers know.

Tool rationalization. Tie control changes to subcategories and track impact.

Supplier assessments. Hand suppliers a repeatable questionnaire powered by the same engine.

If you lead a large enterprise, this becomes a control service behind your intake, release gates, and quarterly reviews. If you run a smaller shop, this becomes your turnkey way to show maturity and progress without buying a large platform.

Data point: the framework query tools include csf_lookup, search_framework, and get_related_subcategories, which helps analysts and agents find the right control language in seconds instead of hunting across PDFs.

Getting started in ten minutes

Clone the repo:

git clone https://github.com/rocklambros/nist-csf2-mcp-serverInstall and seed:

npm installthennpm run devPoint Claude Desktop at the MCP server

Run

quick_assessmentto see scores across the 106 subcategoriesGenerate your first executive report

If you prefer HTTP, start the API with npm run start:http. For both MCP and HTTP, use npm run start:dual.

Everything you need to know is in the project’s README.md file, and you can find sample prompts in PROMPTS.md

Data point: the schema ships preloaded with the full NIST CSF 2.0 model, which lets you test right away without data entry.

Roadmap and how to contribute

This is community work. Here is where help moves the ball.

OpenAI Actions. Help finish a clean OpenAPI spec and resolve origin and schema constraints so custom GPTs can call the HTTP API without friction.

Auth hardening. Review the OAuth 2.1 flows and token handling and suggest improvements for real enterprise use.

Database adapters. Add Postgres and MySQL options for teams that want managed stores without code forks.

Crosswalks. Contribute mappings to ISO 42001 for AI governance, NIST AI RMF, HIPAA, and other regimes you run.

Telemetry. Propose a simple analytics pattern for tool usage that respects privacy and helps teams tune adoption.

Open an issue, start a discussion, or send a pull request. Clear descriptions, small patches, and tests make it easy to merge.

Data point: the codebase follows ESLint and Prettier rules, which keeps contributions readable and reduces review time.

Where this fits with RockCyber

This project reflects how I work with clients. I believe in simple engines that produce real artifacts and connect to the frameworks that matter. If you want help installing the server and wiring it into your program, reach out. If you want a partner to run the outcomes on a cadence, my Virtual Chief Information Security Officer and Virtual Chief AI Officer service can carry out the execution while your team scales.

You can always find more of my thoughts in RockCyber Musings and learn about services at RockCyber. If you are building AI governance and need a pragmatic path, my CARE and RISE frameworks will feel familiar once you see this server in action.

Data point: the generate_executive_report output is written for leaders. It explains maturity, gaps, and next actions in business terms. That is the report you can send to your board without a rewrite.

Key Takeaway: The NIST CSF 2.0 MCP Server turns a trusted framework into callable tools that produce baselines, plans, and reports fast, and it is open source so you can use it today and help make it better tomorrow.

Call to Action

👉 Clone the GitHub repo is located at https://github.com/rocklambros/nist-csf-2-mcp-server, start to play and contribute

👉 Book a Complimentary AI Risk Review HERE

👉 Subscribe for more AI security and governance insights with the occasional rant.