Training vs Inference: Where Your Data Actually Leaks in LLM Systems

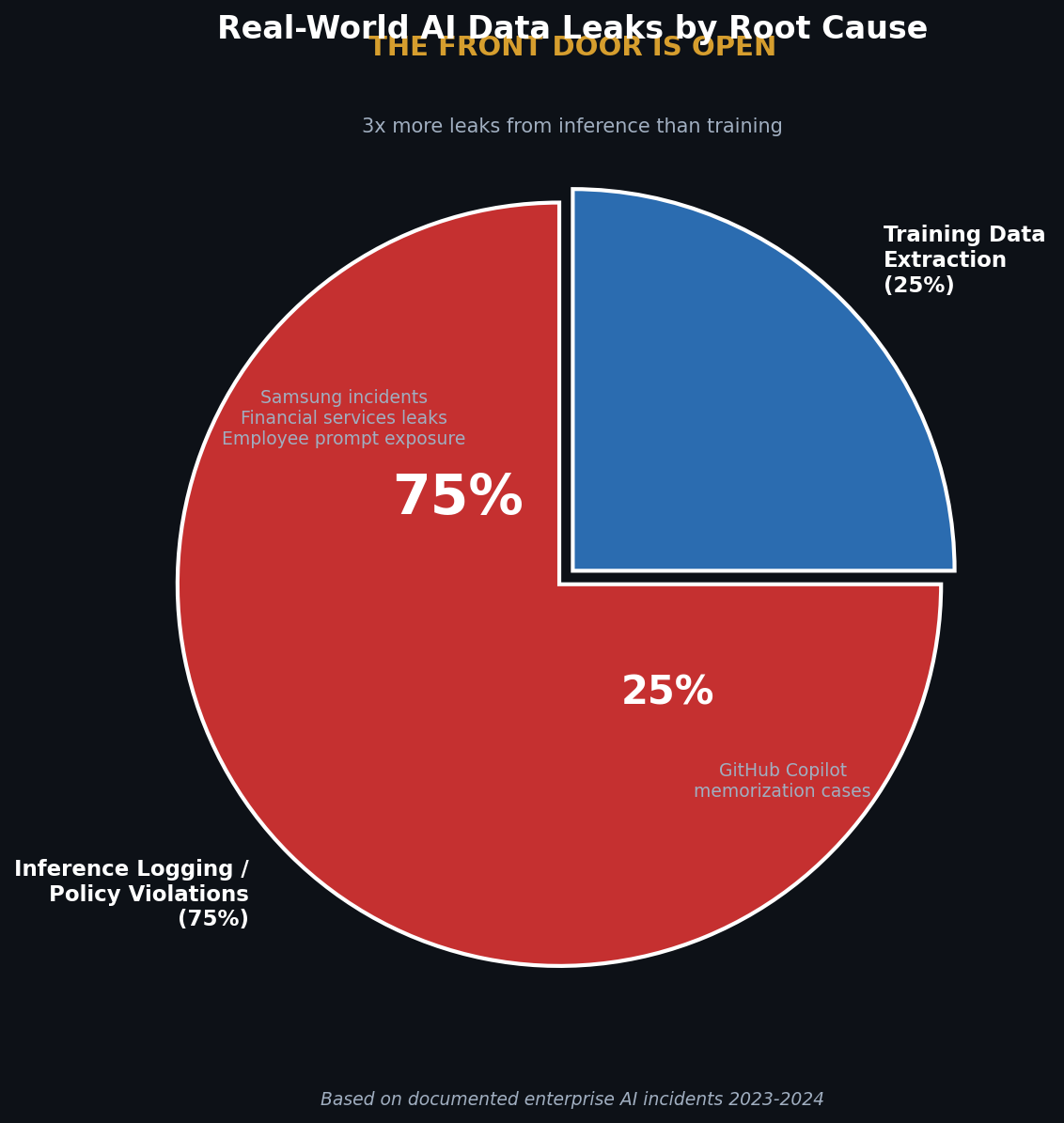

13% of GenAI prompts leak sensitive data at inference while training extraction hits 0.00001%. Evidence-based analysis of where to focus your AI security budget.

Let me save you six months of hand-wringing. You’ve been protecting the wrong door.

I’ve sat through more AI governance meetings than I care to count. Every single one features the same theatrical performance. Legal waves GDPR Article 17 around like a talisman. Procurement demands “no training on our data” clauses with the fervor of medieval peasants warding off vampires. And everyone nods sagely, convinced they’ve addressed the AI risk problem.

Meanwhile, Lasso Security research shows 13% of employee prompts to GenAI chatbots contain sensitive data. Not training datasets. Prompts. Every day. Right now. While you’re reading this, someone in your organization is probably pasting customer PII into ChatGPT because they need help formatting a spreadsheet.

The mathematics of where your data actually leaks should embarrass every security team that spent the last two years obsessing over training data extraction. But it won’t. Because we love our comfortable narratives more than uncomfortable evidence.

The LLM Data Lifecycle: What You Should Have Learned Before That Vendor Demo

Here’s something that drives me up the wall. Security professionals confidently opine about LLM risks without understanding the basic mechanics of how these systems work. They conflate training with inference because no one ever walked them through the machine learning operations pipeline. So let me do that now, since apparently your AI vendors were too busy selling you governance platforms to explain the fundamentals.

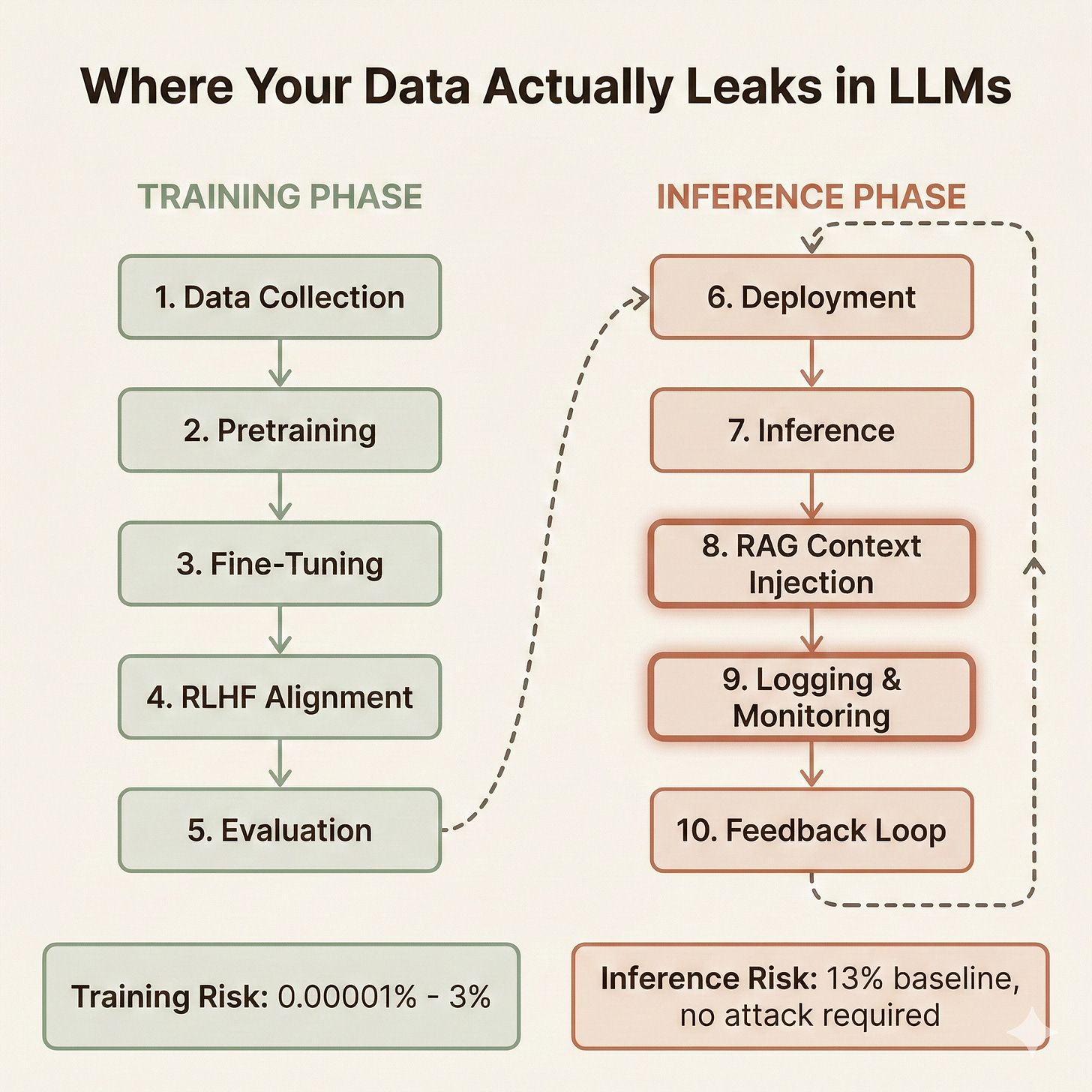

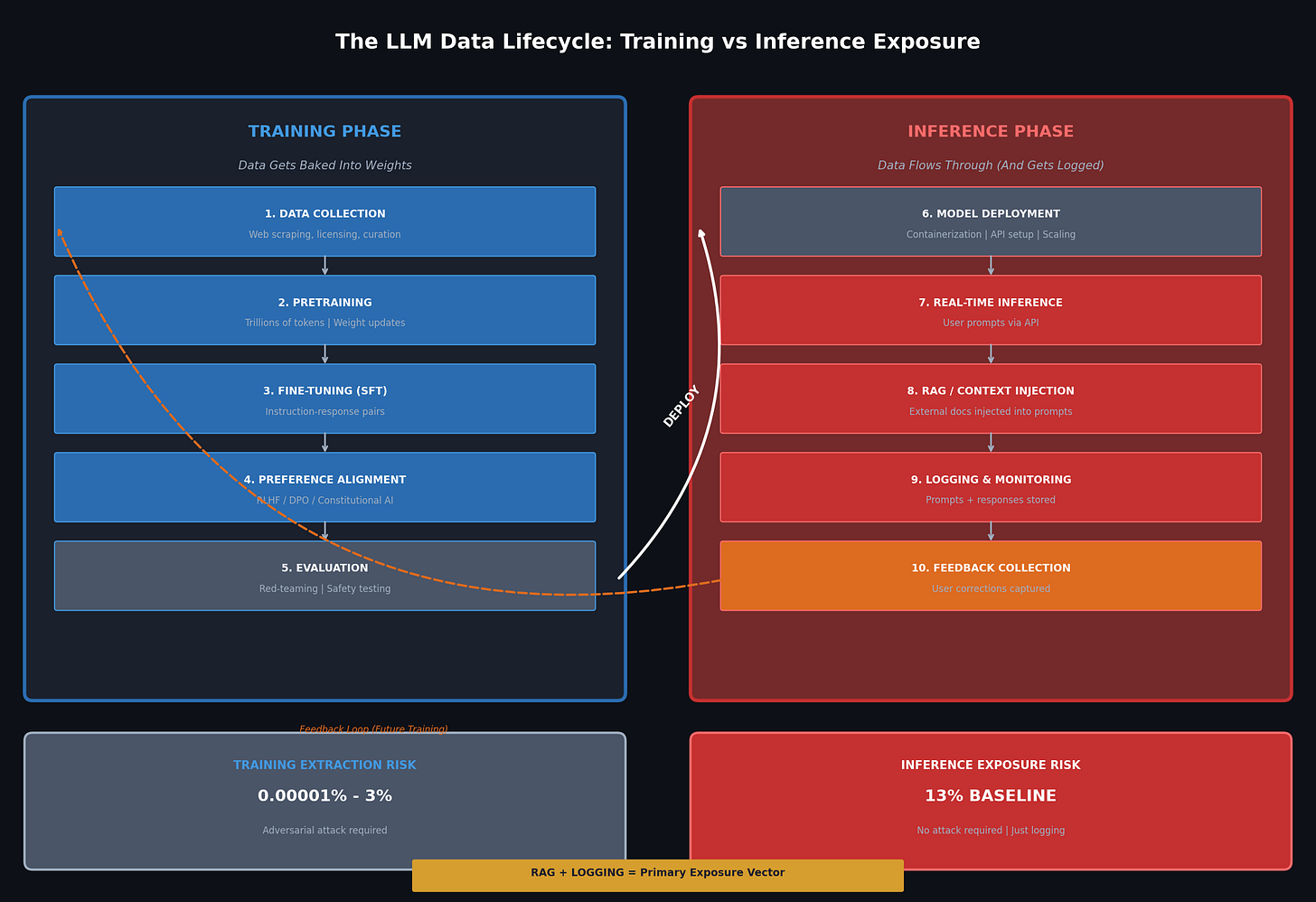

The pipeline divides into two distinct phases with fundamentally different data exposure mechanisms. Understanding this split determines whether your security controls make sense or constitute expensive window dressing.

Training Phase: Where Data Gets Baked Into Weights

Data Collection and Curation happens before anyone touches a model. Someone has to assemble the training corpus. For frontier models, this means scraping the public internet, licensing book datasets, acquiring code repositories, and purchasing proprietary content. Your data enters the pipeline here. If a vendor trained on data that included your documents, this is where the exposure occurred. The curation process involves deduplication, filtering, and quality assessment. Data that survives curation proceeds to training. Data that doesn’t get discarded. But “discarded” in ML pipelines doesn’t mean “securely deleted.” It means “not used for this training run.”

Pretraining is where the paranoia lives. Models consume massive datasets; we’re talking trillions of tokens scraped from the digital detritus of human civilization. GPT-2 trained on 40GB of text. GPT-3 scaled to 570GB. Modern frontier models ingest petabytes. Your data, if it enters here, gets compressed via gradient descent optimization across billions of parameters. The model adjusts its weights to minimize prediction error. Your sensitive document becomes a statistical ghost, distributed across a neural network in ways that make traditional data governance frameworks weep.

This is where the “don’t train on my data” clause comes from. I get it… the intuition makes sense. If my data trains the model, the model might regurgitate my data. Logic checks out, but it’s mostly flawed.

Supervised Fine-Tuning narrows the model’s behavior after pretraining. This stage uses curated instruction-response pairs to teach the model how to follow directions. The datasets are smaller, thousands to millions of examples, versus trillions of pretraining tokens. However, memorization risk actually increases here because the same examples often appear multiple times during training. If your data appears in fine-tuning datasets, extraction probability rises substantially compared to pretraining exposure.

Preference Alignment shapes responses to match human expectations. Reinforcement Learning from Human Feedback, Direct Preference Optimization, Constitutional AI, pick your flavor. Human raters compare model outputs, and the training process adjusts weights accordingly. The data here includes both prompts and human preference signals. Less raw text, but still potential exposure if your content appeared in alignment examples.

Evaluation and Red-Teaming happen after training completes but before deployment. This is adversarial testing to find failure modes, safety issues, and capability gaps. It is not simply validation during training. Red teams probe for jailbreaks, harmful outputs, and yes, training data extraction. If your data appears in both training and evaluation sets, congratulations. You have a contamination problem that nobody in procurement thought to ask about.

Inference Phase: Where Data Flows Through (And Gets Logged)

Model Deployment means containerizing the trained model, spinning up API infrastructure, configuring scaling policies, and connecting authentication systems. The model weights are frozen. No learning happens. But this phase determines how data will flow through the system and, critically, where it will be stored.

Real-Time Inference is where users actually interact. Every prompt submitted. Every response received. The model processes inputs, generates outputs, and moves on. This is what most people picture when they think about using AI. What they don’t picture is everything that happens around inference.

RAG and Context Injection deserve their own callout because most organizations miss them entirely. Retrieval-Augmented Generation means your inference pipeline queries external databases, pulls relevant documents, and injects that content into prompts before sending them to the model. Those “external databases” often contain your proprietary documents, customer records, internal wikis, and knowledge bases.

Here’s what that means for data exposure. When Karen in accounting asks the AI chatbot about expense policy, the RAG system retrieves your expense policy document, injects it into the prompt, sends the combined content to the model, receives a response, and logs the entire transaction. Your proprietary document just flowed through an external API, got combined with user prompts, and landed in a logging system you may not control. No training required. No memorization needed. Direct exposure through the inference pipeline you deployed to be helpful.

Logging and Monitoring is where inference data exposure actually occurs. Production systems log prompts and responses for debugging, abuse detection, quality assurance, and compliance. Those logs contain everything users submitted. Every customer name. Every financial figure. Every code snippet. Every piece of sensitive data that employees paste into the helpful AI assistant.

The 13% figure from Lasso Security? That’s the percentage of prompts containing sensitive data. Those prompts get logged. Stored. Often retained far longer than necessary. Sometimes shared with vendors for “product improvement.” This is the front door standing wide open while everyone obsesses over vault security.

The distinction between training and inference exposure matters because the technical mechanisms differ fundamentally. Training memorization requires your data to survive gradient compression across billions of parameters and then be extractable via adversarial prompting. Inference exposure requires only that employees type sensitive information into text boxes and that someone, somewhere, logs the transaction.

Guess which one happens more often.

How Memorization Actually Works: The Math Your Vendor Didn’t Show You

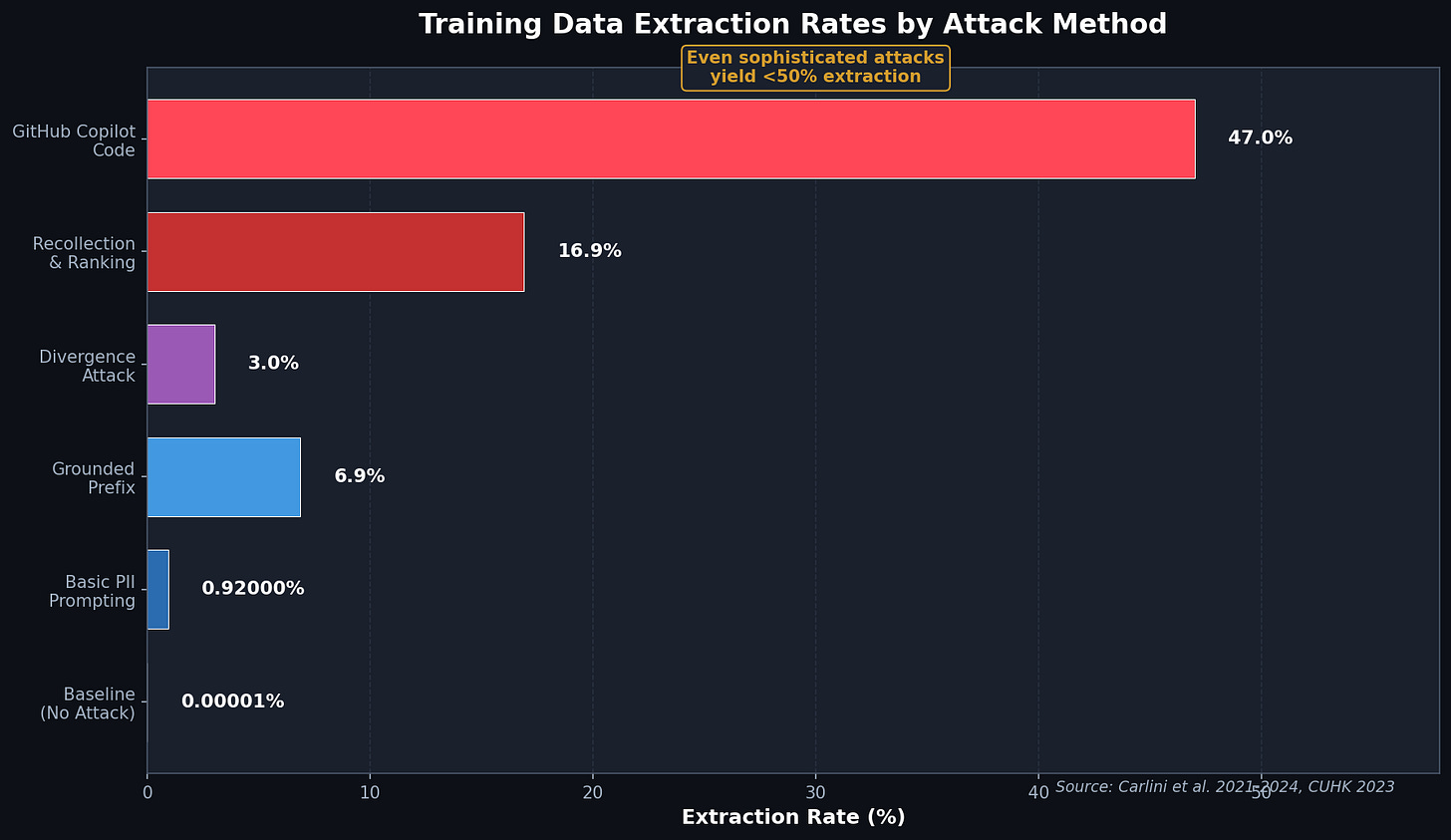

Let me walk you through what happens when your data enters a training corpus. Researchers have quantified exactly how much training data can be extracted from production models, and the numbers don’t support the panic.

Nicholas Carlini and colleagues at Google developed the formal framework that everyone cites, but few have actually read. A string is extractable with $k$ tokens of context from a model if an adversary can prompt the model with a prefix and recover the exact training sequence.

The empirical results should recalibrate your threat model. From GPT-2’s 40GB training set, researchers extracted exactly 604 unique examples. Let me write that as a percentage so it sinks in:

Six hundred four examples from forty billion characters. That’s the threat model you’ve been losing sleep over.

But I’m not going to cherry-pick. Extraction rates depend heavily on attack sophistication. The divergence attack against ChatGPT caused the model to emit memorized training data at 150x the normal rate by prompting the model to repeat a single word indefinitely until it diverged from its aligned behavior. Even with that adversarial technique, only 3% of generated text was memorized content.

The relationship between model scale and memorization follows a log-linear pattern that Carlini’s team quantified:

Where C is a constant dependent on training configuration. This means a 10x increase in model size yields roughly a 19 percentage-point higher extraction rate. Larger models memorize more. The 175B parameter GPT-3 memorizes more than the 1.5B parameter GPT-2. This is real. This matters.

Three factors dominate whether your specific data gets memorized, and understanding these should inform your actual risk assessment rather than your vendor-driven anxiety:

Data duplication is the killer. GPT-2 memorized sequences after just 33 repetitions. The relationship is exponential:

Where…

… varies by model architecture (sorry, Substack doesn’t let me shrink a LaTex block so it fits in line). A 10x increase in repetitions yields extraction rates 25-30 percentage points higher. If your sensitive data appears once in a training corpus of trillions of tokens, your extraction risk approaches zero. If it appears hundreds of times across multiple sources, you have a real problem.

Model scale amplifies everything. Larger models memorize 2-5x more than smaller counterparts. The 6B parameter GPT-Neo extracted 65% of test sequences, compared with 20% for the 125M version. The relationship holds across architectures.

Training dynamics create temporal windows. Models exhibit the highest memorization at the beginning and end of training, with the lowest rates midway through. Early-memorized examples become permanently encoded in lower layers. This has implications for when your data entered the training pipeline, but good luck getting that information from any vendor.

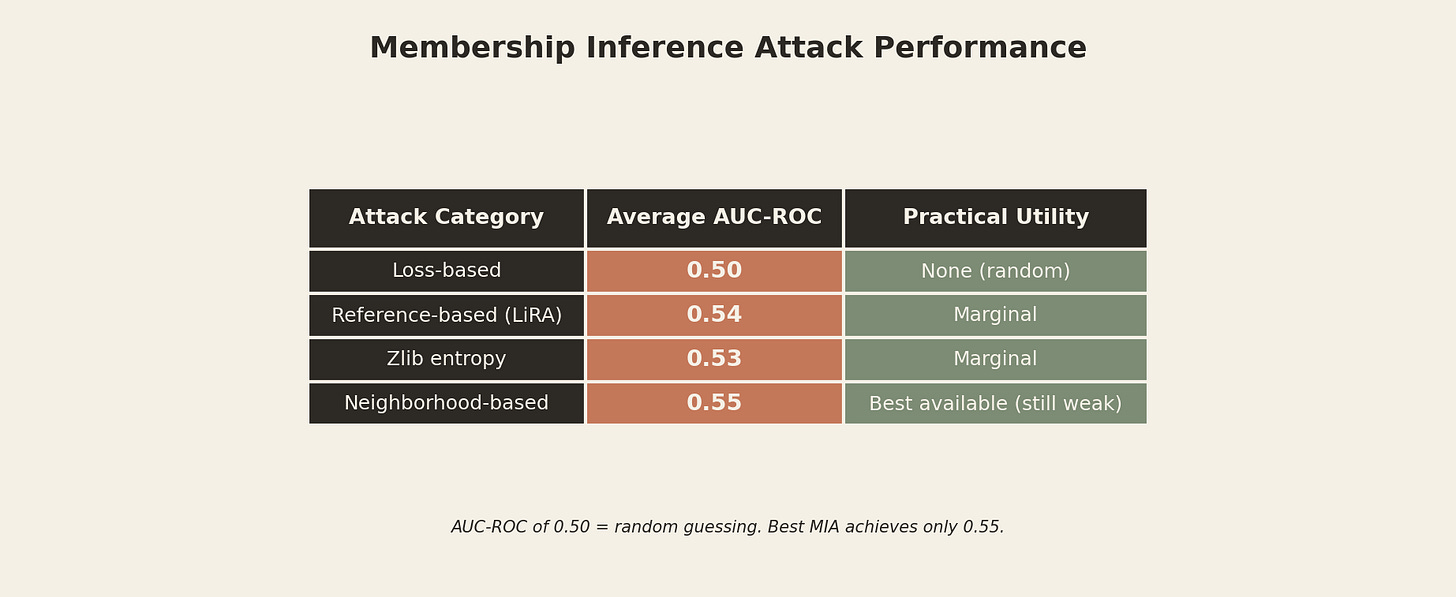

Membership Inference Attacks: A Coin Flip with Extra Steps

Here’s where I get genuinely irritated. Membership inference attacks attempt to determine whether specific data was used in training. These attacks should terrify privacy professionals if they worked.

They don’t work.

State-of-the-art research demonstrates that MIAs barely outperform random guessing for most settings across varying LLM sizes and domains. The best attacks achieve Area Under the Receiver Operating Characteristic Curve (AUC-ROC) scores of 0.50-0.55. For those who skipped statistics class, 0.50 equals flipping a coin.

That’s a 0.05 improvement over pure chance. You’d get better results asking a magic 8-ball.

Why do MIAs fail against LLMs? Two reasons, and both should have been obvious.

First, modern LLMs train on massive datasets for very few epochs. GPT-3-class models see each example maybe once or twice during training. This creates minimal overfitting on individual examples. Without overfitting, the statistical signal that MIAs exploit simply doesn’t exist. The model treats seen and unseen data nearly identically.

Second, the boundary between training and non-training data is fundamentally fuzzy for natural language. Text from the same domain exhibits similar statistical characteristics whether it appeared in training or not. A legal contract looks like a legal contract. Distinguishing membership becomes statistically impossible because the underlying distributions overlap almost completely.

Difficulty calibration techniques can improve MIA performance by up to 0.10 AUC. Even optimized attacks show limited practical utility for large-scale privacy breaches. You cannot reliably determine if your data was in the training set. Full stop.

So why do vendors keep selling you tools to detect training data inclusion? Because fear sells better than evidence.

The Real Threat: What’s Actually Happening While You’re Guarding the Vault

Now let’s examine what actually happens in organizations that deploy LLMs. The Samsung incident from 2023 became the canonical case study, but most coverage missed the point entirely.

Three Samsung semiconductor engineers pasted proprietary code and meeting transcripts into ChatGPT over a 20-day period. One entered buggy source code from a semiconductor database seeking debugging help. Another uploaded program code designed to identify defective equipment for optimization suggestions. A third converted confidential meeting notes into a presentation.

I need you to understand something critical: This was not a training data extraction attack.

Zero adversarial techniques. No sophisticated prompting. No cryptographic attacks on model weights. Engineers simply pasted confidential data into a text box because they wanted help with their jobs, and the AI was right there, waiting and ready to help.

Samsung’s response tells you what they actually learned. They banned public AI tools and implemented a 1,024-byte upload limit. They didn’t hire a team to monitor the extraction of training data. They recognized the threat as operational, not theoretical.

Lasso Security’s analysis of real-world GenAI usage quantified what anyone paying attention already suspected. Their findings, based on data collected between December 2023 and February 2025:

13% of employee prompts contain sensitive data. That’s the baseline exposure rate. No attack required. No adversary needed. Just normal people using AI tools the way normal people use AI tools.

Category breakdown reveals the scope of what’s flowing through inference endpoints:

Code and tokens (API keys, credentials): 30% of sensitive submissions

Network information (IPs, MACs, internal URLs): 38% of sensitive submissions

PII/PCI data (emails, payment information): 11.2% of sensitive submissions

The 2025 IBM Cost of a Data Breach Report found 13% of organizations reported AI-related breaches. Of those breached, 97% lacked proper AI access controls. Not 97% lacked training data extraction monitoring. Ninety-seven percent lacked basic access controls for inference endpoints.

The Probability-Weighted Risk Calculation You Should Have Done Two Years Ago

Let me show you the math that should change your budget allocation. This is basic risk quantification. The kind of analysis you’d do for any other security domain. But somehow AI got a pass on quantitative rigor.

Risk = Likelihood × Severity

CISSP 101

For training data extraction:

Where:

Risk Score: Low to Medium

For inference data exposure:

Where:

Risk Score: High to Critical

The risk ratio tells the story:

Even comparing against sophisticated adversarial extraction at 3%, inference risk remains over 4x higher. Against baseline extraction rates, inference exposure presents nearly a million times higher probability. And inference exposure requires zero technical sophistication. It requires only that employees do their jobs using the tools you’ve given them.

Does your security budget allocation reflect this ratio? If not, you’re defending based on narrative rather than evidence.

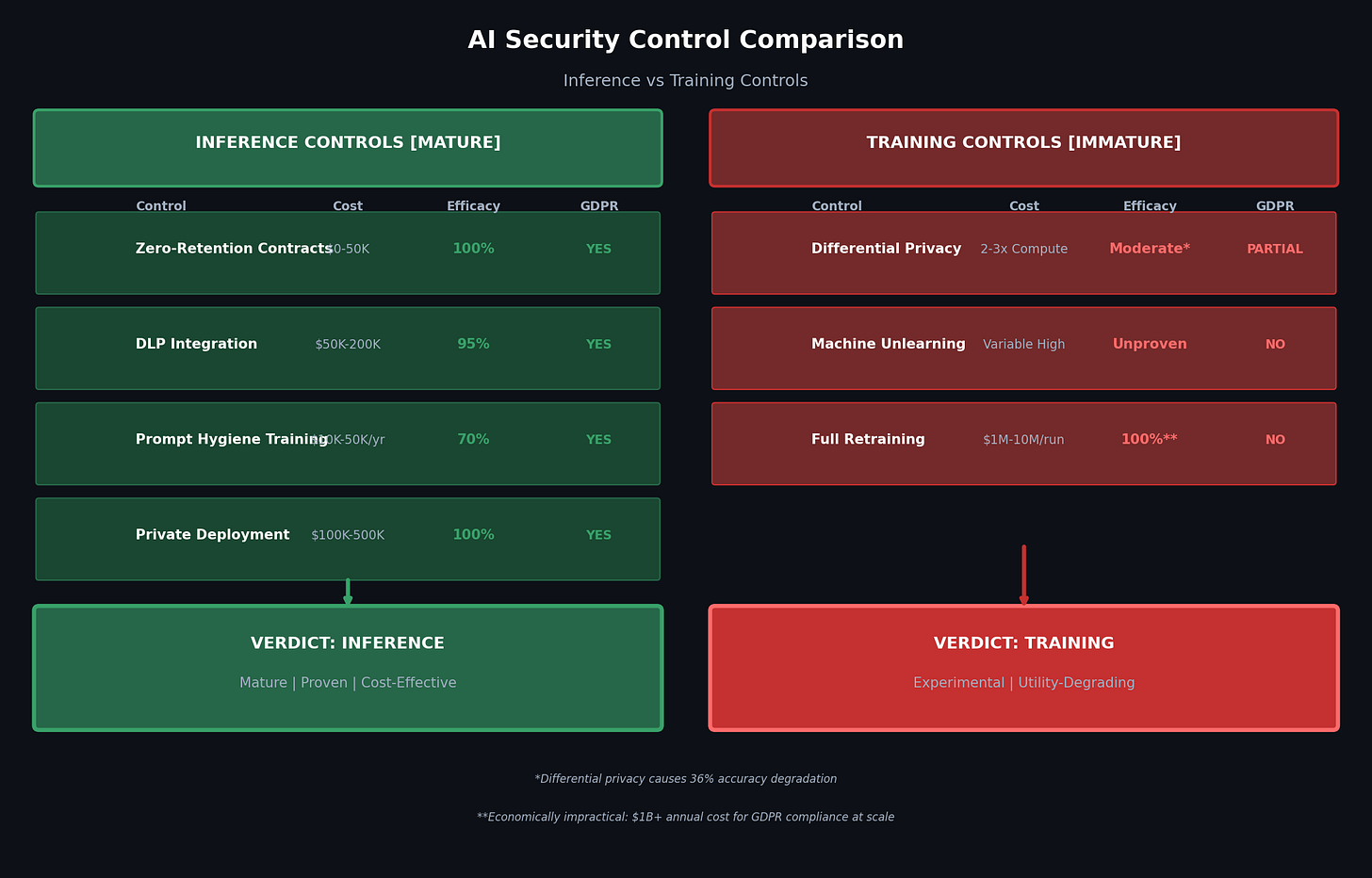

Control Efficacy: Why You Can Fix Inference But Not Training

The asymmetry extends beyond probability to controllability, and this is where the grumpy uncle in me really wants to shake some sense into the industry.

Inference controls are mature, proven, and cost-effective:

Zero-retention contracts exist. OpenAI’s API offers enterprise customers explicit no-data-retention options. Azure OpenAI provides stateless inference with double encryption. AWS Bedrock offers similar guarantees. You can contractually eliminate inference data retention today. The cost ranges from $0 (API configuration) to $50K (enterprise agreement negotiation).

Traditional DLP integration doesn’t work. Your existing stack watches file transfers and email attachments. Prompts are ephemeral text in encrypted API calls that legacy DLP never sees. What works is AI-native guardrails. Input validation that scans prompts before the model processes them. Output filtering that inspects responses before users receive them. These aren’t DLP products with “AI” slapped on the label. They’re purpose-built controls that understand prompt structure, embedding semantics, and conversational context. Cost: $50K-200K one-time plus annual licensing. Deployment time: weeks to months. Efficacy: 95%+ detection of sensitive data patterns when properly tuned.

Targeted prompt hygiene coaching changes behavior. Not a 45-minute module. Three rules, live examples, quarterly reinforcement. $10K-50K annually. ROI shows up in your logs within quarters.

Private deployment eliminates external data flow entirely. Run the model in your VPC. Air-gap if you’re paranoid. Cost: $100K-millions depending on scale. Efficacy: 100% against external exposure.

Total efficacy for inference controls: 100% risk reduction achievable. GDPR compliant. ISO 42001 auditable. HIPAA addressable. These are solved problems.

Training controls remain experimental and break your models:

Differential privacy provides mathematical guarantees that sound great in papers. The practical reality? Research shows 36% accuracy degradation for meaningful privacy protection at ε=10 (where lower epsilon means better privacy but worse model performance)

Training overhead increases 2-3x in compute cost. You’re paying more for a worse model that might protect against a threat that’s already unlikely.

Machine unlearning “significantly degrades model utility” and “scales poorly with forget set sizes.” Direct quote from the research literature. No vendor will tell you this because they’re selling you unlearning solutions.

Retraining from scratch costs $1M-10M per GPT-3-class training run. For 1,000 GDPR erasure requests per year (a modest estimate for any company with European customers), full compliance through retraining would cost:

That’s not a typo. Five billion dollars annually for GDPR training data compliance. The EU AI Act acknowledges this reality with careful language: “Once personal data is used to train AI model, deletion may not be possible.”

For inference logs? You delete them from the database. Query complete. Compliance achieved. Cost: approximately nothing.

Where Security Budgets Should Actually Go

The evidence demands a resource reallocation that most CISOs haven’t made because they’re still managing to the narrative rather than the data.

I don’t have a survey that breaks down AI security spending by lifecycle phase. Nobody does. That research gap tells you something about where the industry’s head is at. We’re still debating whether training data extraction is a real threat, while 13% of employees paste sensitive data into GenAI tools every day.

Here’s what I see in practice: security teams obsess over model security, training data provenance, and adversarial attacks that require nation-state capabilities to execute. Meanwhile, inference-time data governance gets a fraction of the attention despite representing the overwhelming majority of actual data exposure.

This allocation is backwards. It’s like spending most of your physical security budget on vault doors while leaving the loading dock propped open.

The fix isn’t complicated. Shift focus from theoretical training-time attacks to the inference-time exposures happening right now:

Inference data governance comes first. Forget legacy DLP. It watches the perimeter while your data evaporates into prompts. You need prompt guardrails that scan input for sensitive patterns before processing, output filters that inspect model responses for data leakage, and audit logging for every AI interaction. Implement zero-retention contracts with every AI vendor. The UK NCSC calls this “radical transparency” for a reason.

Prompt hygiene training changes behavior. This isn’t another awareness program destined for the ignore pile. Prompt hygiene is a discrete skill with immediate feedback. Three rules, live examples from your own environment, quarterly reinforcement. $10K-50K annually. Behavior changes because employees see the flags in real time, not because they clicked through a module.

Shadow AI visibility is non-negotiable. You can’t govern what you can’t see. Inventory every AI tool touching your environment, sanctioned or not.

Training data monitoring is maintenance, not a priority. Keep the controls you have. Don’t expand investment here until you’ve closed the inference gaps.

Your legal team negotiated “no training” clauses because they understood GDPR Article 17 implications. That was appropriate due diligence. But that clause doesn’t protect you from the engineer who pastes customer data into ChatGPT tomorrow morning because their deadline is today and the AI is helpful.

The vault door is secure. Your “no training” clause is airtight. Meanwhile, 13% of your workforce submits sensitive data through the front door every single day, and you’re congratulating yourself on your AI governance program.

I’ve been doing this long enough to know that most readers will nod along, agree with the analysis, and change nothing. The narrative is comfortable. The vendor demos are compelling. The boardroom expects training data extraction theater.

But for those of you willing to follow the evidence: your data leaks at inference. Fix that first. Fix that now. The training data extraction problem is real but rare. The inference exposure problem is certain and ongoing.

Key Takeaway: Training data extraction is a lottery you probably won’t lose. Inference data exposure is a certainty you’re experiencing right now. Stop guarding the vault and secure the front door.

What to do next

Your AI governance posture needs an honest assessment, not another vendor demo. The CARE Framework provides a structured approach to evaluating AI risk across the dimensions that actually matter. Use it to audit where your current controls focus versus where the evidence says your risks concentrate.

If your organization needs an external perspective - someone willing to tell you what your vendors won’t - RockCyber offers AI governance assessments built on the quantitative analysis in this post. We’ll tell you where your budget should go, not where your current vendors want it to go.

👉 Subscribe for more AI security and governance insights with the occasional rant.

👉 Visit RockCyber.com to learn more about how we can help you in your traditional Cybersecurity and AI Security and Governance Journey

👉 Want to save a quick $100K? Check out our AI Governance Tools at AIGovernanceToolkit.com

A great article touching essential and overlooked governance areas! No, I do not understand the calculations, but the concepts you elaborate on really resonate.