ISO 42001 vs CARE: Fast-Tracking AI Governance Readiness and Beyond

Achieve ISO 42001 fast with the CARE framework. Operationalize AI governance, cut risk, and keep delivery moving.

AI is trying…really hard… to move from pilot to profit center. Boards now ask a hard question. How do we prove our AI is safe, fair, and under control without choking delivery speed? ISO 42001 is the global benchmark for an AI management system. CARE is how you reach it fast and make it stick. I built the CARE framework to turn governance into muscle, not paperwork. When you wire it into daily work, it becomes a competitive edge, not a tax. ISO 42001 sets the bar through a formal AI Management System with policy, risk assessment, lifecycle controls, audits, and continual improvement. CARE gives you the operating model to deliver all of that at production speed.

Why ISO 42001 matters right now

ISO 42001 is the first management system standard explicitly written for AI. It follows a familiar pattern for executives who are familiar with ISO 27001. Context and scope. Leadership and policy. Planning. Support and documentation. Operations. Performance evaluation. Improvement. Then, a reference catalog of controls is in Annex A. That structure forces you to build a real system, rather than a one-off checklist. The standard expects you to define scope, roles, and AI policy. It tells you to set AI objectives, assess and mitigate AI risks, conduct impact assessments, manage the lifecycle, monitor, audit, and continually improve.

There are a few standouts worth your attention. Clause 6.1 requires a formal AI risk assessment and a risk treatment process that results in a Statement of Applicability tied to Annex A controls. You can add controls beyond Annex A where the risk calls for it. You must retain documentation and gain management approval for residual risk. That is a high bar, and it is the right bar.

Operations is not hand-wavy either. Clause 8 requires you to implement controls, plan changes, manage suppliers, and keep evidence that processes ran as planned. You perform risk assessments and impact assessments at planned intervals or when things change. Then you measure performance, run internal audits, and conduct management reviews. This is the classic plan, do, check, act loop applied to AI.

Annex A gives you reference controls across policy, roles, resources, impact assessment, lifecycle, data, and information for users and other parties, as well as responsible use and third-party relationships. It includes concrete expectations on data quality and provenance, user documentation, incident communication, and intended use.

The message is simple. If you want to establish trust at scale, ISO 42001 provides a common language and audit path to demonstrate that your AI is under control. It is the right benchmark to anchor your AI governance program.

The CARE framework, built for real work

I designed the CARE framework to solve a practical problem. Too many teams had policies on paper, but nothing wired into their build and runtime. Others shipped fast, then paid for it with outages, bias incidents, or angry customers. CARE fixes both by forcing a loop that becomes a habit.

Create. Set the constitution for AI. Charter, policy, principles, roles, risk appetite, and the first cut of risk and impact assessment. Put policies in version control and link rules to checks.

Adapt. Keep governance current as laws, tech, data, and markets shift. Run drills. Refresh documentation. Update training. Move fast without losing your guardrails.

Run. Operationalize risk management. Build tests and monitors into CI and runtime. Gate high‑risk releases. Stream bias, drift, security, and business metrics to a shared dashboard. Trigger rollbacks when thresholds break.

Evolve. Measure and improve governance itself. Audit, learn, retire weak controls, add new safeguards, and manage end‑of‑life. Use a scorecard that the board can read.

CARE’s five differentiators mirror what actually works in the field. It aligns governance to business outcomes. It scales through modular components. It treats security as a first‑class citizen. It is regulatory‑ready and future‑ready. It turns oversight into speed.

Where teams stumble on ISO 42001

I have run risk and security programs for decades. I see the same traps in ISO projects.

Treating ISO 42001 like a paperwork exercise. Auditors then ask for production evidence, and the wheels come off.

One‑time risk assessments that never get refreshed.

No operating model for change management, so release velocity prevails over governance.

No clear ownership. Everyone is in charge, so no one is in charge.

ISO 42001 gives you the what. CARE supplies the how, on a clock that matches delivery.

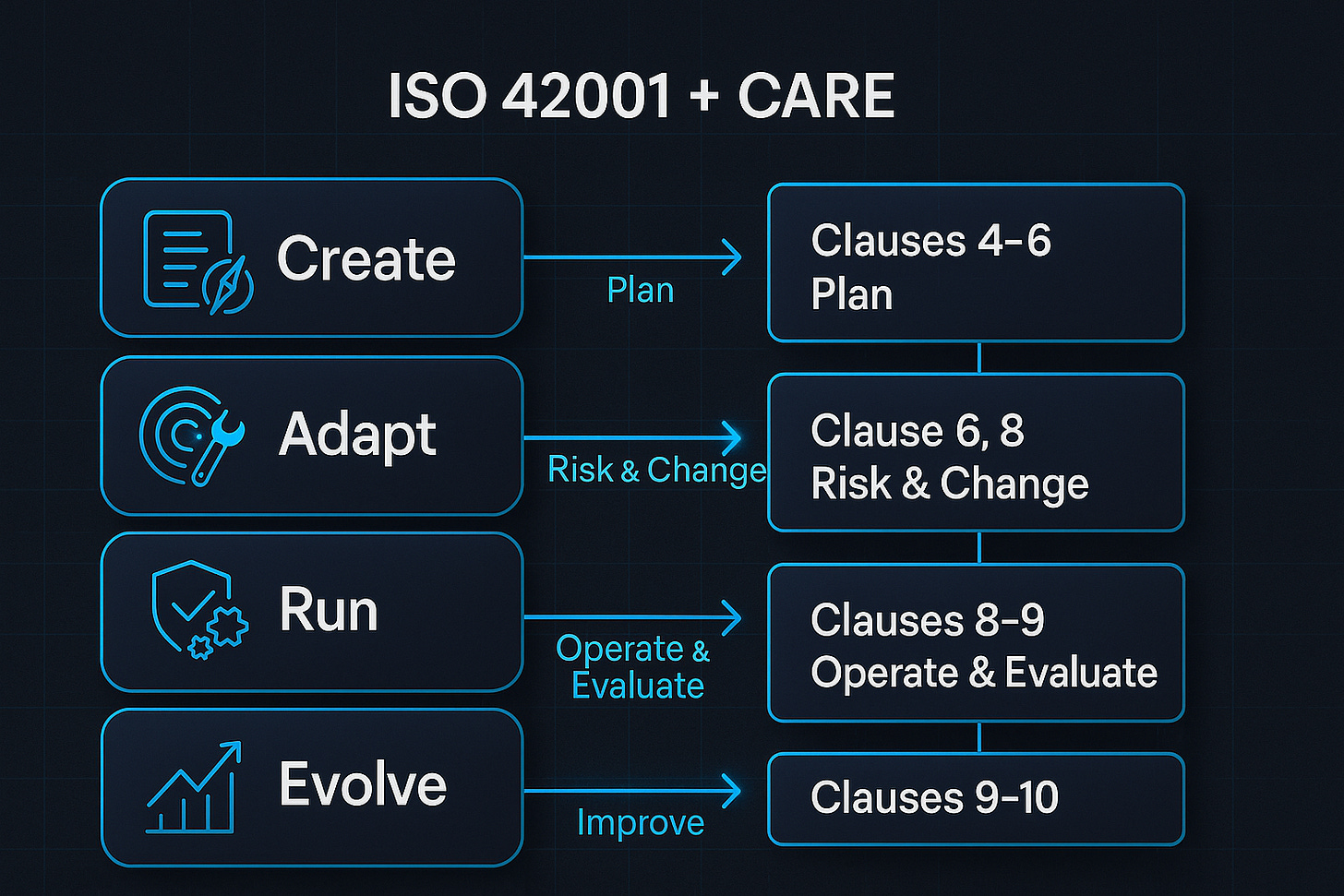

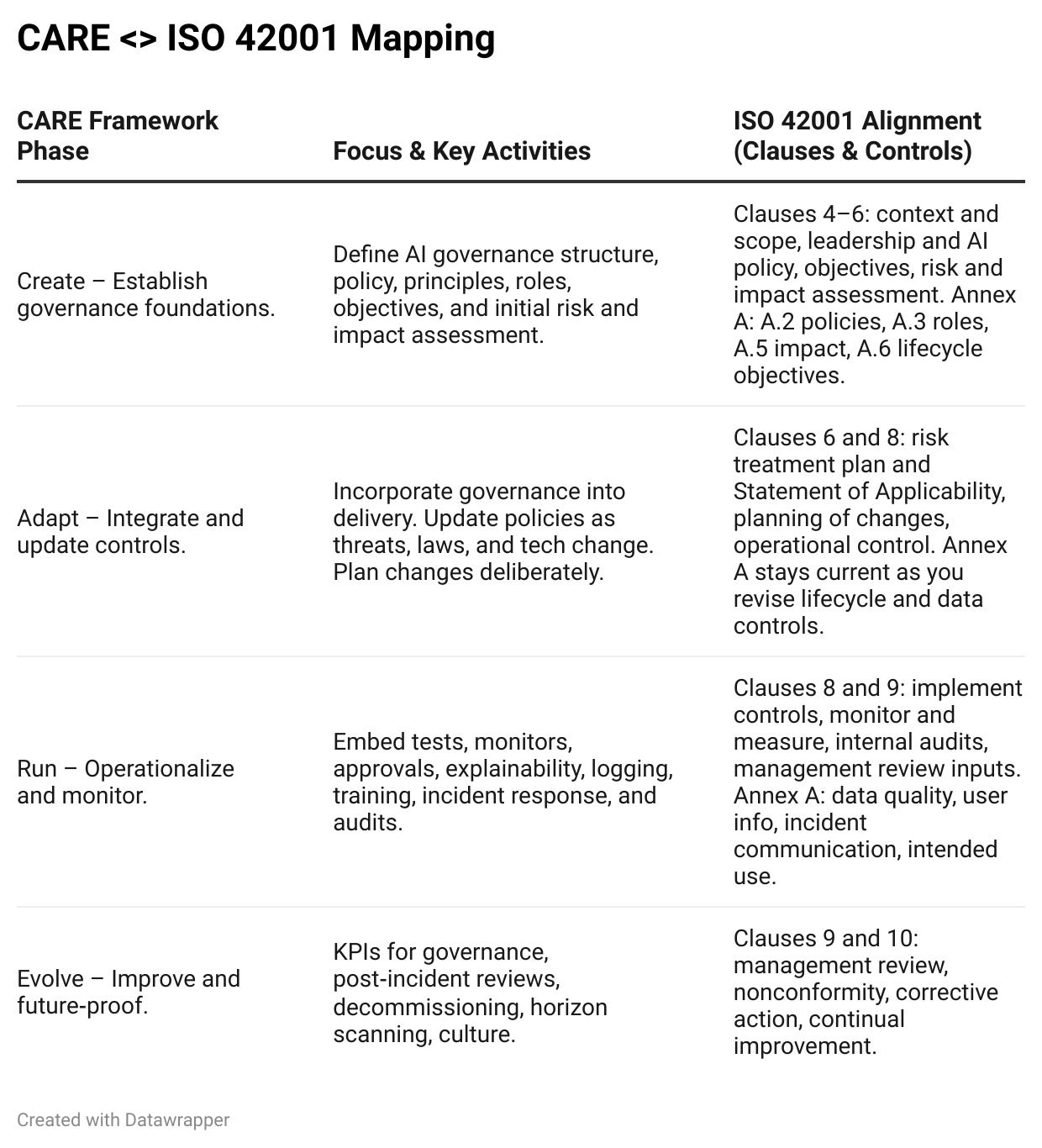

ISO 42001 and CARE, side by side

Below is the mapping I use with clients to turn ISO 42001 into a day‑to‑day loop. You can use this as your fast path to readiness.

What CARE adds that ISO 42001 does not

Embedded operations, not shelfware. CARE assumes controls live where engineers live. Bias tests, drift detection, rollback triggers, and kill switches sit in code and platforms. ISO requires monitoring, logging, and technical documentation. CARE makes those things automatic and routine. Annex B even hints at this by calling for documented plans to manage failures, rollbacks, and event logs. CARE turns hints into pipelines.

Automation by default. CARE pushes enforcement into CI and MLOps. Policies sit next to code. Pull requests fail if a model card or risk metadata is missing. Monitors stream fairness, privacy, latency, and business outcomes to the same dashboard that the product team checks daily. That is how you keep pace with models that frequently retrain and redeploy.

A real learning loop. ISO requires audits, management reviews, and corrective action. CARE bakes them into a scoreboard. We track problematic response rates, median correction times, and test coverage against adversarial prompts. Then we fix what the data shows. In one client’s EVOLVE sprint, we cut the problematic response rate to 0.07% and slashed correction time. That kind of discipline keeps customers and auditors happy.

Security first. CARE treats AI as a threat surface. Prompt injection, data poisoning, model theft, and inversion get addressed in development and production. ISO 42001’s guidance acknowledges AI‑specific security issues and requires logging, incident handling, and supplier due diligence. CARE makes them concrete through adversarial testing, runtime policy scanners, and supplier risk gates.

Business alignment. CARE measures governance by speed and value as much as by compliance. Faster approvals. Fewer incidents. Shorter correction times. Clear impact on revenue and cost. That is how you sustain momentum and funding.

Use CARE to get ISO 42001 ready fast

Here is the path I run with executive teams. It is direct. It works.

1) Start with a focused gap. Run a high‑signal assessment against CARE and ISO 42001. Inventory where you already meet clauses and Annex A controls. Map missing roles, policies, and lifecycle practices. Identify high‑risk systems that need immediate controls. That produces your action list and your certification timeline.

2) Create with code, not binders. Write your AI policy and principles in plain language. Put them in version control with change control rules. Assign decision rights and escalation paths. Define AI risk criteria and impact assessment triggers for high‑risk systems. ISO 42001 requires documented information to be controlled, versioned, and readily available. Treat policy like source code to satisfy that requirement without friction.

3) Tie risk treatment to the Statement of Applicability. For every risk, decide your treatment and map it to Annex A controls or additional controls you define. Produce the Statement of Applicability with justification for inclusion and exclusion. Get management approval for residual risk. This is core to ISO 42001. CARE’s Create and Adapt phases provide the process and cadence to keep it current.

4) Wire controls into delivery. In Adapt and Run, convert policy into gates, tests, and monitors. Gate high‑risk deployments on bias, privacy, and explainability checks. Require dual approval for very high-risk events, as well as instrument event logging and drift detection. ISO 42001 Annex B calls for explicit plans to manage failures, roll back, and review event logs. Make that real with automation.

5) Operationalize AI impact assessments. For high‑risk systems, perform impact assessments at defined stages and when significant changes happen. Feed results into risk treatment and user documentation. Keep the records. Then expect to share a subset with regulators or customers in some markets. CARE treats this as routine, not a special exercise.

6) Close the loop with audits and reviews. Set an internal audit cadence. Keep it independent. Run management reviews with clear inputs and decisions. Track nonconformities and corrective actions to completion. CARE’s Evolve phase makes these rituals part of your operating rhythm, rather than last-minute fire drills.

7) Build evidence as you go. ISO 42001 expects documented information for policies, risk work, audits, and performance metrics. CARE’s Run and Evolve phases produce the evidence by default. Dashboards. Logs. Model cards. Training records. Incident tickets. You will be audit‑ready on a normal Tuesday.

What this looks like in practice

Create. A bank publishes a plain‑language AI charter, sets risk tiers, and creates a cross‑functional review. Model cards get linked to automated checks. Policies live in Git with owners, change control, and pull requests. This aligns with ISO’s leadership, policy, and planning clauses, as well as Annex A on policies and roles.

Adapt. A new state law tightens disclosure. The firm raises a policy pull request within two weeks. Targeted training for affected roles auto‑assigns and logs completion. Release gates update. The AIMS remains aligned. That is the difference between a living program and a binder.

Run. A retailer’s recommendation engine streams fairness, performance, and conversion by region. A gap triggers an automated rollback and retrain. Event logs allow a clean audit trail. Annex B calls for precisely this kind of operational plan and logging.

Evolve. A security vendor’s conversational assistant showed inconsistent answers. We added adversarial prompts to test suites, deployed a live policy scanner, and cut correction time from hours to minutes. Scorecards went to the board. Churn dropped. That is Evolve at work.

Deep dive: the Annex A controls many teams miss

Data quality and provenance. Many programs talk about responsible AI but skip the mechanics of data quality, provenance, and preparation. ISO 42001’s Annex A is explicit. You must define data quality requirements, document provenance, and state criteria for data preparation methods. CARE’s Run phase embeds those checks into pipelines.

Information for users and other parties. You must provide system documentation, enable external reporting of adverse impacts, and have a plan to communicate incidents. CARE expresses that through model cards, help content, incident runbooks, and feedback channels tied to a dashboard.

Intended use and responsible use. ISO 42001 asks you to define processes and objectives for responsible use and ensure systems are used as intended. CARE operationalizes this with clear role approvals, intended use constraints in the product, and runtime policy enforcement.

Third‑party relationships. ISO 42001 requires you to allocate responsibilities among suppliers, partners, and customers and maintain documentation that is readily available for sharing. CARE turns that into supplier risk scoring, AI clauses in contracts, and a package of artifacts you can hand to customers without drama.

A board brief you can deliver in five minutes

We are adopting ISO 42001 as our benchmark. It aligns with our strategy and our risk appetite.

We are implementing the CARE framework as the operating model that brings ISO 42001 to life in production.

We will publish a quarterly governance scorecard with risk, speed, and value metrics.

We will pass audits because our controls are in place every day, not just once a year.

If your board wants a primer, share RockCyber’s practical overview of CARE and point them to RockCyber Musings, where I write for executives about AI governance and security with zero fluff.

RockCyber Whitepaper: rockcyber.com

RockCyber Musings: rockcybermusings.com

The simple action plan

Appoint owners. Responsible AI lead, model risk, product, legal, security, and data each have named duties and authorities. ISO 42001 expects clear roles and top‑management commitment.

Ship Create in 30 days. Policy, roles, risk criteria, impact triggers, repo setup with change control. Tie policy to automated checks.

Stand-up monitors. Pick two high‑risk models. Add bias tests, drift monitors, explainability, logs, and rollback triggers. Align with Annex B guidance on operation, monitoring, and event logs.

Run a drill. Simulate a bad output. Measure detection and correction time. Record it. Feed lessons into Evolve.

Publish the scorecard. Policy adherence, alerts per thousand inferences, median correction time, audit findings closed on time, and high‑risk releases with dual approvals. Review it alongside revenue and customer metrics.

CARE for every company type

The same loop works across sizes and sectors.

Regulated enterprises. CARE’s Create and Run phases give auditors policy, roles, evidence, and cadence. Adapt keeps you aligned as regulators refine expectations. That avoids expensive retrofits.

AI‑first startups. Lightweight policy with strong Run controls shortens enterprise sales cycles. You can hand customers your SoA, model cards, logs, and impact assessments without a scramble.

Multinationals. The loop enables local teams to move forward while risk leaders view a single dashboard and a clear audit trail. Annex A and Annex B were built to guide consistent practice across contexts. CARE makes that practical.

Mid‑market. Automation replaces armies of reviewers. You gather audit evidence without incurring additional headcount for manual checks. CARE’s modular design means you start small and grow.

The governance scorecard that actually drives behavior

A scorecard is not for show. It is how you manage the program like a product.

Protection metrics. Policy adherence rate. Alerts per thousand inferences. Drift events detected and corrected. Incident communication time. Supplier attestations in good standing.

Enablement metrics. Time to approve high‑risk releases. Time to correct bad outputs. Percentage of projects with model cards and intended‑use constraints. AI use cases that pass the impact assessment on the first attempt.

Culture metrics. Training coverage and quiz results for affected roles after policy changes. Number of near‑misses raised by teams. Postmortems completed with fixes shipped.

Set targets. Publish trends. Fund what works.

ISO 42001, front and center in your program

You will still do the blocking and tackling. Define scope and roles. Publish an AI policy. Set objectives. Do AI risk assessment and risk treatment. Perform AI impact assessments for high‑risk systems. Control the lifecycle. Monitor and audit. Address nonconformities. Improve. CARE does not replace those steps. It enables them to work at the speed and discipline that modern AI demands.

Use ISO 42001 to set the bar and CARE to clear it at speed while you build a governance engine that pays for itself in trust, velocity, and fewer bad days.

Call to Action

👉 Book a Complimentary AI Risk Review HERE

👉 Subscribe for more AI security and governance insights with the occasional rant.

👉 Services and advisory:

https://www.rockcyber.com

Great write up, Rock! You did a great job addressing the emerging Governance standards for AI.

AI governance is such an interesting field these last few years. Thanks!