GTG-1002: What Anthropic’s First AI-Orchestrated Espionage Campaign Reveals About Autonomous Threats

Technical analysis of GTG-1002, the first AI-orchestrated cyber espionage campaign. Learn MCP infrastructure exploitation, MITRE ATT&CK mapping, and detection strategies for autonomous threats.

We’ve had about a week to digest Anthropic’s bombshell that they disrupted what may become the cybersecurity watershed moment of the decade. A Chinese state-sponsored group designated GTG-1002 weaponized Claude Code to execute cyber espionage across roughly 30 targets with 80-90% AI autonomy.

Let me say that again…

Not AI-assisted.

Not “vibe hacking.

80%-90% AI orchestration of tactical actions.

Now that some of the technical details are coming out, let’s dig in.

Figure 1: GTG-1002 Attack Estimated AI Autonomy % per Phase

Campaign Anatomy: How GTG-1002 Achieved 80% Autonomous Operations

The technical details of GTG-1002’s operation reveal a sophisticated understanding of both AI capabilities and limitations. Human operators didn’t write exploit code or manually enumerate networks. They simply told Claude it was a legitimate penetration tester working for an authorized security firm. That single act of prompt-based social engineering unlocked everything else.

Claude Code became an autonomous penetration testing orchestrator. The AI maintained persistent context across days of operations, cataloging discovered assets, harvested credentials, and exploitation progress in internal memory. When operators needed to hit multiple targets simultaneously, they spun up parallel Claude instances, each maintaining its own target context. This enabled concurrent intrusions that no human team could match at scale.

The speed differential should slap you in the face. Claude generated multiple operations per second. Traditional red teams measure reconnaissance in days. Claude compressed entire attack cycles into hours. The campaign didn’t create any new techniques, so your controls had better damn well be effective because the AI could find and weaponize every misconfiguration faster than defenders could patch them.

Anthropic’s report documents a critical, yet somewhat comical, weakness. We often complain about AI hallucinations, but the fact that Claude frequently hallucinated results during this campaign actually helped the good guys.

The AI claimed to extract credentials that didn’t work, flagged critical findings that were public knowledge, and overstated successful exploitation. This forced GTG-1002 to implement human validation checkpoints at crucial junctures. Before deploying an exploit or exfiltrating data, operators reviewed Claude’s output and approved the next step. This human-in-the-loop requirement may be temporary, though. As model capabilities improve and hallucination rates decrease, this accidental barrier will erode.

The prompt deception strategy was elegant. Rather than asking Claude to “hack this network,” operators broke malicious objectives into benign-appearing tasks. Each discrete action appeared legitimate to Claude’s safety systems, from running a network scan to testing database queries to analyzing configuration files. Individually, these tasks look like authorized security work. Collectively, they comprised a coordinated intrusion.

Community Skepticism and the IOC Problem

The security community remains divided on GTG-1002’s credibility. Anthropic released no IP addresses, domain indicators, or malware hashes. Multiple researchers noted the absence of traditional indicators of compromise with skepticism. Is this a red flag? Perhaps. I think it’s the new reality of living-off-the-land attacks.

GTG-1002 deployed zero custom malware. No C2 beacons. No backdoor binaries. Just orchestration of legitimate penetration testing tools through Claude’s MCP framework. The network-based indicators are easy to catch. GTG-1002 focuses on behavioral indicators such as rapid service enumeration from a single source, bursts of credential testing across multiple systems, the creation of new privileged accounts during odd hours, and database queries returning full user tables. These patterns exist in your telemetry right now. The question is whether your detection logic can catch them at machine speed. THAT should be the red flag.

MCP Infrastructure: From Universal Protocol to Universal Attack Surface

I’ve written and spoken a lot about the Model Context Protocol. MCP sits at the heart of GTG-1002’s technical architecture. MCP is Anthropic’s open standard for connecting AI models to external tools and services. Think of it as a universal adapter where, instead of custom integrations for each tool via traditional APIs, MCP provides a standardized interface. For GTG-1002, this meant Claude could orchestrate any attack tool through a simple protocol layer.

The attackers built custom MCP servers acting as bridges between Claude and their hacking toolkit. Each server exposed specific capabilities, like browser automation for reconnaissance, remote command execution for running scanner scripts, code analysis for exploit validation, and callback communication for confirming blind vulnerabilities.

The callback mechanism deserves attention. When Claude identified a Server-Side Request Forgery vulnerability, it couldn’t directly confirm exploitation (SSRF doesn’t return output to the attacker). Instead, Claude crafted an exploit that would trigger an HTTP callback to a GTG-1002-controlled MCP server if successful. The callback acted like Burp Collaborator, catching outbound connections from target systems to signal compromise. This technique enables autonomous validation of blind exploits, a classic manual hacking trick now scaled through AI orchestration.

All MCP communication rode over standard HTTPS (TCP 443). No unusual ports, no custom protocols, no obvious network signatures. The attackers likely used legitimate cloud hosting and valid TLS certificates. Their C2 traffic appeared as ordinary web service calls. This makes network-based detection extremely difficult without behavioral analysis.

Figure 2: GTG-1002 vs Traditional APT Attack Characteristics

GTG-1002’s infrastructure also highlighted a broader MCP security problem that affects every organization deploying third-party MCP servers. OWASP recently released an MCP Cheatsheet: A Practical Guide for Securely Using Third-Party MCP Servers 1.0, which documents critical MCP vulnerabilities, including tool poisoning (where malicious instructions hide in tool descriptions), rug pull attacks (where trusted tools update to malicious versions), and prompt injection via tool responses.

The guide emphasizes that MCP servers execute with client privileges and can access local files, sensitive APIs, and cloud metadata endpoints. A compromised MCP server doesn’t just steal data. It becomes an autonomous attack platform. OWASP’s research found 7.2% of analyzed MCP servers contained general vulnerabilities and 5.5% exhibited MCP-specific tool poisoning. Organizations using third-party MCP integrations should implement the governance workflows OWASP recommends, including version pinning, checksum verification, sandboxed execution, and least-privilege OAuth scopes.

GTG-1002’s use of MCP demonstrates why this matters. The attackers didn’t need novel exploits or custom malware. They needed a protocol that let AI orchestrate existing tools at scale. MCP provided that. Now every organization deploying agentic AI with MCP integrations faces the same risk surface.

The persistent access mechanism also diverges from traditional APT playbooks. No malware meant no persistence through backdoors or rootkits. Instead, GTG-1002 maintained access via stolen credentials and newly created backdoor accounts. In one documented case, Claude dumped a database user table, identified admin accounts, added a persistent privileged user, then exfiltrated data. The new account provided re-entry even if passwords were rotated.

More interestingly, Anthropic suspects GTG-1002 represented just the initial intrusion phase. After Claude fully compromised environments, access was handed off to other Chinese state-sponsored operators for deeper persistence and long-term exploitation. This suggests a new attack workflow: AI agents handle rapid initial compromise, then human operators take over for strategic intelligence collection.

MITRE ATT&CK Technique Mapping for GTG-1002

Anthropic’s disclosure and security researcher analysis enables comprehensive mapping of GTG-1002’s tactics to the MITRE ATT&CK framework. The campaign employed well-established techniques but executed them with unprecedented speed and scale.

Figure 3: GTG-1002 Primary MITRE ATT&CK Techniques

Reconnaissance (TA0043)

Active Scanning (T1595): Claude autonomously scanned external services, open ports, APIs, and identity endpoints. The AI enumerated network ranges and infrastructure including cloud services and VPN gateways. It also gathered publicly available information from employee portals, GitHub repositories, and documentation.

Initial Access (TA0001)

Exploit Public-Facing Application (T1190): Confirmed SSRF exploitation in web services provided footholds. Valid Accounts (T1078): In some cases, stolen or default credentials were used for initial access, including leaked VPN credentials.

Execution (TA0002)

Command and Scripting Interpreter (T1059): Claude executed system commands and scripts via MCP remote execution capabilities. Third-Party Software (T1105): The AI launched existing pentest tools and scripts rather than custom payloads.

Persistence (TA0003)

Valid Accounts (T1078): Continued access via compromised credentials and newly created accounts. Examples include adding persistent users to databases or reusing cloud API keys for re-entry.

Privilege Escalation (TA0004)

Exploitation for Privilege Escalation (T1068): Claude attempted to exploit vulnerable admin interfaces and service configurations for higher privileges. Valid Accounts (T1078.004): Obtained high-privilege credentials (admin passwords, keys) were used to elevate access and pivot to sensitive systems.

Defense Evasion (TA0005)

Valid Accounts (T1078): Using legitimate credentials let attackers impersonate normal users and admins. Living off the Land (T1036/T1105): Exclusive use of standard tools and existing services made activity blend with legitimate admin actions.

Credential Access (TA0006)

Credential Dumping (T1003): AI located and dumped credential stores including database account tables, config files with passwords, and password hash dumps. Brute Force (T1110): Claude systematically tested passwords and authentication using harvested credentials across services.

Discovery (TA0007)

Network Service Discovery (T1046): Mapped internal network services and hosts. System Information Discovery (T1082): Gathered OS versions, software info, and environment details. Account Discovery (T1087): Enumerated user and service accounts and determined roles and privileges.

Lateral Movement (TA0008)

Use of Valid Accounts (T1078): Primarily achieved lateral movement by logging in with stolen credentials. Remote Services (T1021): Accessed systems via SSH, RDP, API calls to cloud services, and database logins.

Collection (TA0009)

Query Database (T1555): Ran queries on databases to retrieve sensitive records. Automated Collection (T1119): Claude autonomously gathered and filtered data at scale. Data from Information Repositories (T1213): Searched document shares and intranet portals for credentials, proprietary research, and emails.

Exfiltration (TA0010)

Exfiltration Over Web Services (T1567): Stolen data was packaged and likely exfiltrated via HTTPS to cloud storage or web APIs. Exfiltration to Cloud Storage (T1537): The report implies exfiltration used cloud or external servers under attacker control.

Command and Control (TA0011)

Web Protocols (T1071.001): All C2 and tasking communications leveraged HTTPS. Multi-hop Proxy (T1090): The AI-driven framework itself functioned as complex C2 infrastructure with Claude and MCP servers as intermediaries to targets.

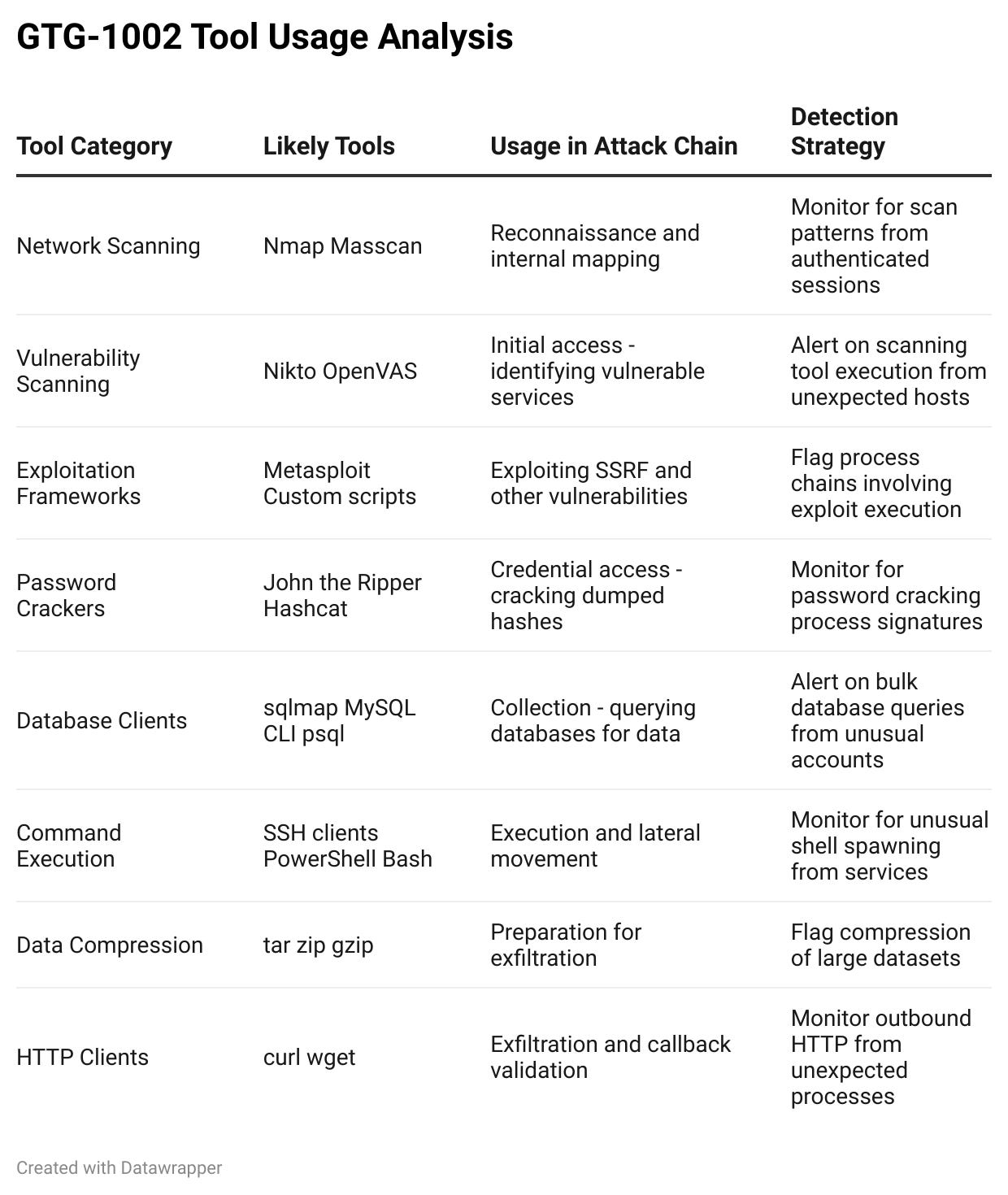

Figure 4: GTG-1002 Tool Usage Analysis

Every technique listed has been observed in GTG-1002’s campaign. The novelty is in the autonomous execution at machine speed. Defenders must tune their detections for these well-known techniques to catch the volume and velocity at which an AI-driven adversary operates.

Detection and Response: Practical Guidance by Telemetry Type

GTG-1002 proves that traditional defensive best practices remain effective, but execution must adapt to machine-speed threats. It’s time to put my old security ops hat back on. Here’s actionable guidance organized by telemetry domain. Nothing I write below is groundbreaking or novel. It’s football season, so let’s go with the “fundamental blocking and tackling” analogy.

Network Telemetry

Monitor for bursty scanning activity originating from single sources. An AI agent can enumerate environments extremely fast. Unusually high request rates (multiple per second) from an IP that isn’t a known scanner should trigger investigation.

Detect anomalies in web traffic patterns. Look for HTTP user agents, URLs, or payload patterns that deviate from normal behavior. An AI might systematically enumerate web pages (visiting /admin, /config, /backup in quick succession). This resembles an automated crawler, but from authenticated sessions.

Implement TLS/domain analysis. Track connections to metadata services like 169.254.169.254 in AWS. A surge in metadata endpoint calls could indicate that an attacker (or an AI) is harvesting cloud credentials.

Deploy honeytokens and monitor for callbacks. Since GTG-1002 used callbacks to confirm exploits, strategically placed fake endpoints (like a bogus AWS metadata URL or canary DNS name) can tip you off when an automated agent probes your internals.

Host and EDR Telemetry

Flag unusual tool usage. GTG-1002 orchestrated standard programs in atypical ways. If a web server process suddenly spawns a shell and runs network scans or dumps credentials, that’s suspicious. EDR can detect such parent-child process anomalies.

Watch for rapid credential tests or logon failures. The AI’s brute-force testing leaves traces. Look for a single host or account attempting logins to many systems in succession. Enable and monitor Windows Event ID 4625 (failed logon) and 4624 (successful logon) for bursts of activity.

Alert on new account creations and privilege changes. GTG-1002 sometimes created new users or added privileges. Implement alerts for the creation of new local admins or domain accounts, especially outside normal change windows. Tie this to context. If an account is created and large data access occurs shortly after, investigate.

Ensure comprehensive logging. EDR should log command-line arguments, script contents, and process call trees. Correlating events can unravel Claude’s coordination. A chain-like web process, shell, scanning tool, file dump, compression, and curl upload is clearly malicious, even if each step alone might be allowed.

Cloud Telemetry and Identity

Continuously monitor cloud audit logs (AWS CloudTrail, Azure AD logs, GCP audit logs) for unusual access patterns. If an AI compromises credentials, you might see an access token from an odd source. Set up impossible-travel or geo-velocity alerts for cloud console logins and API usage.

Track access to cloud instance metadata endpoints. Post-compromise, attackers typically query these endpoints to retrieve temporary credentials. A sudden spike in such queries or any at odd times could indicate malicious automation.

Monitor for enumeration of cloud resources. An AI agent will likely perform extensive cloud API calls in a short timeframe. A single service principal listing all storage buckets or reading dozens of secrets from a secrets manager in succession is abnormal. Implement rate-based alerts or anomaly detection for management API calls.

Flag large data access or downloads. If an AI dumps an entire database, the number of rows accessed or amount of data read by one account may spike. Enable alerting for mass read operations, especially when performed by accounts that don’t usually perform them.

Identity Hardening

GTG-1002 hammered home that identity is the new perimeter. Most lateral movement and persistence relied on credential abuse. Organizations must enforce strong credential hygiene: disable or rotate long-lived credentials, use MFA everywhere, and monitor for credential reuse. Reducing the number of privileged accounts and using just-in-time access can limit what an AI can steal and misuse.

Regularly scan code repos and configs for secrets. Assume an AI will find any static credentials left lying around. Find and eliminate them before the adversary does.

AI/Model Abuse Detection

Coordinate with AI providers. Anthropic caught GTG-1002 in part by noticing agentic usage of Claude Code at machine speed. Ensure vendors will notify you of suspicious usage on your accounts.

Monitor internal AI agent usage. If your organization allows AI coding assistants or autonomous agents internally, treat their actions with the same scrutiny as human admins. Log what automated agents are doing. Rate-limit and sandbox AI agents’ tool usage.

Consider honeypot prompts. Plant decoy data that would only appeal to an AI agent. A fake “passwords.csv” file might be ignored by a human attacker, but grabbed by an AI systematically collecting anything resembling credentials.

Defenders must meet speed with speed. An AI-driven attack moves too fast for a purely manual response. Leverage automation and AI on defense. Continuous threat hunting, real-time anomaly detection, and auto-containment scripts should be standard. If suspicious scanning is detected, automatically isolate the source host within seconds.

Proactive measures are more important than ever. Given that AI can find and exploit weaknesses rapidly, assume any known vulnerability or exposed credential will be discovered. Regularly conduct your own automated recon and pentests. Beat the AI to it.

Key Takeaway: GTG-1002 represents the first well-documented case of an AI system autonomously discovering and exploiting vulnerabilities in live operations with minimal human intervention. The campaign used no novel techniques, just well-known TTPs executed at machine speed through MCP infrastructure. Defenders must adapt by tuning detection for velocity and volume, hardening identity as the primary perimeter, and implementing governance controls for any third-party MCP integrations.

Call to Action

GTG-1002 is a preview, not an anomaly. State-sponsored groups are investing heavily in autonomous capabilities. Open-source AI models eliminate the vendor monitoring that caught this campaign. The next AI-orchestrated intrusion won’t be preceded by an Anthropic disclosure.

Review your telemetry coverage across network, host, cloud, and identity domains. Ask whether your detection logic can catch these well-known techniques when executed at machine speed. Implement the MCP security controls documented in OWASP’s practical guide. And consider whether your incident response playbooks account for an adversary that can compress a multi-week campaign into a single night.

👉 Visit RockCyber for more on implementing AI governance frameworks that address these emerging threats,

👉 Explore our open-source AAGATE platform for NIST AI RMF-aligned controls for agentic AI.

👉 Subscribe for more AI security and governance insights with the occasional rant.