Claude Secure Coding Rules: Open Source Security That Scales

Stop detecting vulnerabilities after the fact. Prevent them during code generation with 100+ open source rule sets.

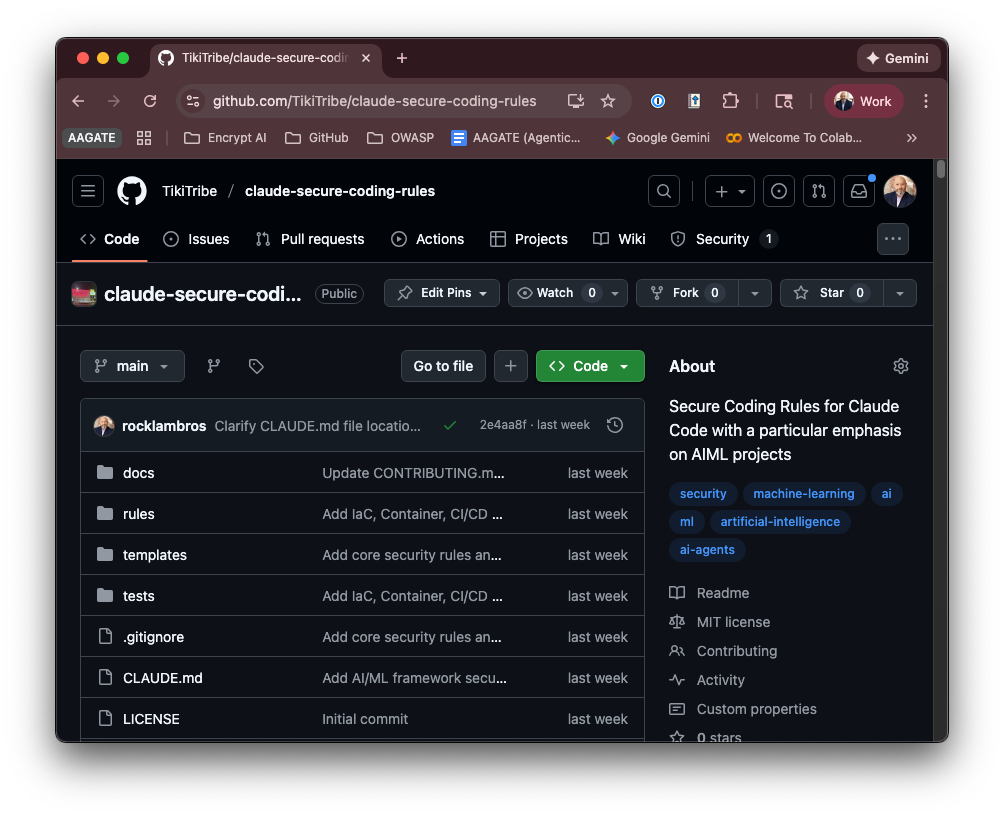

https://github.com/TikiTribe/claude-secure-coding-rules

Your SAST tool caught another SQL injection vulnerability. Great. You fixed it, pushed a commit, and moved on. Three weeks later, a different developer on your team made the same mistake in a different module. Your scanner caught it again. You fixed it again. This cycle repeats until you realize you’re not preventing vulnerabilities. You’re just playing an expensive game of whack-a-mole.

Traditional static analysis tools operate in detection mode. They scan code after it’s written and tell you what’s wrong. That’s useful, but it’s fundamentally reactive. What if your code generator refused to create vulnerable patterns in the first place?

That’s the premise behind Claude Secure Coding Rules, an open source project I’m releasing today at https://github.com/TikiTribe/claude-secure-coding-rules. It’s a comprehensive library of security rules that guide Claude Code to generate secure code by default. When these rules are active, Claude Code refuses to generate code that violates strict security policies, warns about risky patterns, and suggests secure alternatives automatically.

This isn’t a SAST replacement. It’s a shift left to the generation phase. The rules encode security expertise so developers don’t need to be security experts. And because it’s open source, the security community can contribute, validate, and extend the rule sets to cover every framework and threat model.

Why Traditional SAST Can’t Solve This

Static analysis tools have two fundamental problems. First, they’re noisy. A typical SAST scan on a medium-sized codebase generates hundreds of findings. Most are false positives. Developers learn to ignore the noise, which means they miss real issues buried in the signal.

Second, SAST tools can’t prevent developers from writing vulnerable code. They can only detect it after the fact. You write code, commit it, the scanner runs in CI/CD, it fails, you fix it, you commit again. That’s at least four steps when it could be zero. The vulnerable code was never necessary.

Claude Secure Coding Rules takes a different approach. Instead of scanning code that’s already written, it guides the code generation process. When you ask Claude Code to query a database with user input, it automatically uses parameterized queries. When you ask it to handle file uploads, it applies allowlist validation. When you’re building an agentic AI system, it implements tool call sandboxing and execution auditing.

The rules work because they’re injected into Claude Code’s context through a hierarchical system of CLAUDE.md files. You put rule files in your project directory, and Claude Code reads them before generating any code. The rules become part of Claude’s instruction set for that project.

Architecture: Rules That Enforce Themselves

The project uses a hierarchical rule injection system. At the top, you have core rules that apply everywhere: OWASP Top 10 2025, AI/ML security from NIST AI RMF, agentic AI security patterns, and RAG pipeline security. Below that, you have language-specific rules for Python, JavaScript, Go, Rust, and others. Below that, framework-specific rules for FastAPI, Express, Django, React, and dozens of others.

Each rule follows a Do/Don’t/Why/Refs pattern. Here’s an example from the SQL injection rules:

Do: Use parameterized queries with placeholders for user input.

cursor.execute(”SELECT * FROM users WHERE id = ?”, (user_id,))Don’t: Concatenate user input into SQL strings.

cursor.execute(f”SELECT * FROM users WHERE id = {user_id}”) # SQL InjectionWhy: SQL injection allows attackers to read, modify, or delete data. Parameterized queries treat user input as data, not executable code.

This pattern serves dual purposes. The Do section gives Claude Code a secure template to follow. The Don’t section teaches it to recognize vulnerable patterns in existing code so it can suggest fixes. The Why section explains the attack vector so Claude can generalize to similar cases. The Refs section provides traceability to security standards.

The hierarchy matters because rules at different levels can override each other. A project-level rule overrides a global rule. A directory-level rule overrides a project rule. This lets you customize security policies for specific parts of your codebase without changing the defaults.

Enforcement Levels: Strict, Warning, Advisory

Not all security issues are equal. Some vulnerabilities lead to immediate compromise. Others are defense-in-depth best practices. The rules reflect this with three enforcement levels.

Strict rules cause Claude Code to refuse to generate the code. These cover critical vulnerabilities: SQL injection, command injection, hardcoded secrets, insecure deserialization, and authentication bypass. If you ask Claude Code to eval() user input in Python, it refuses and explains why that’s dangerous.

Warning rules trigger alerts and suggest alternatives. These cover context-dependent issues where the severity depends on your specific threat model: missing input validation, weak cryptographic algorithms, and insufficient rate limiting. Claude Code will generate the code but call out the risks.

Advisory rules are mentioned as best practices. These include defense-in-depth measures such as security headers, comprehensive logging, and least-privilege design. Claude Code implements them when relevant, but doesn’t block you if your requirements differ.

This tiered approach prevents the false positive problem that plagues traditional SAST. Strict rules only fire when there’s genuine risk. Warnings give you context to make informed decisions. Advisories remind you of best practices without being prescriptive.

Coverage: 100+ Rule Sets Across the Stack

The current release includes over 100 rule sets covering the full development stack. That’s not marketing exaggeration. Let me break down the actual coverage.

Core Foundation: OWASP Top 10 2025, AI/ML security (NIST AI RMF, MITRE ATLAS), agentic AI security, RAG security, graph database security. These apply to every project regardless of tech stack.

Languages: Python, JavaScript, TypeScript, Go, Rust, Java, C#, Ruby, R, C++, Julia, SQL. Each has language-specific rules for common vulnerability patterns. Python rules cover pickle deserialization and subprocess injection. JavaScript rules cover prototype pollution and XSS. Go rules cover race conditions and context handling.

Backend Frameworks: FastAPI, Express, Django, Flask, NestJS. Plus AI/ML frameworks: LangChain, CrewAI, AutoGen, HuggingFace Transformers, vLLM, Triton, TorchServe, Ray Serve, BentoML, MLflow, Modal. These rules understand framework-specific security APIs and enforce their proper use.

RAG & Knowledge Infrastructure: 51 tools across nine categories. Orchestration frameworks like LlamaIndex and Haystack. Vector databases, both managed (Pinecone, Weaviate Cloud) and self-hosted (Milvus, Qdrant, Chroma). Graph databases like Neo4j and Neptune. Embedding models, document processing, chunking strategies, search and reranking, and observability tools. The rules prevent query injection, context poisoning, PII leakage, and multi-tenant isolation failures.

Frontend Frameworks: React, Next.js, Vue, Angular, Svelte. These rules prevent XSS, CSRF, and client-side injection attacks while respecting framework patterns like React’s dangerouslySetInnerHTML.

Infrastructure as Code: Terraform and Pulumi rules covering state encryption, secrets management, provider pinning, and policy enforcement with tools like Checkov and CrossGuard.

Containers: Docker and Kubernetes rules implementing CIS Benchmarks, NIST 800-190, and NSA Kubernetes hardening guidance. Minimal base images, non-root users, Pod Security Standards, RBAC, NetworkPolicies.

CI/CD: GitHub Actions and GitLab CI rules enforcing SLSA framework requirements. SHA pinning for actions, OIDC for secrets, workflow injection prevention, and artifact attestation.

This coverage is comprehensive because it’s designed for the modern stack where applications span multiple languages, frameworks, and infrastructure layers. A typical AI application might use Python with FastAPI for the API layer, LangChain for agent orchestration, Pinecone for vector storage, Kubernetes for deployment, and GitHub Actions for CI/CD. Every layer has security considerations. The rules cover all of them.

Testing Framework: Rules That Validate Rules

Here’s something most rule libraries don’t do: this project includes a testing framework that validates the rules themselves. You can’t trust security rules that haven’t been tested.

The test suite has four layers. Structural tests verify that every rule follows the required Do/Don’t/Why/Refs format, includes valid enforcement levels, and has proper CWE and OWASP references. Code validation tests parse the Do and Don’t examples to ensure they’re syntactically valid and match the claimed language. Security tests use Semgrep and Bandit to validate that Don’t examples actually trigger vulnerability warnings while Do examples pass cleanly. Coverage tests track which CWE and OWASP categories are covered and identify gaps.

This testing approach is necessary because security rules can’t be vague or untested. If a rule claims to prevent SQL injection but its Do example is vulnerable, that’s worse than having no rule. The test suite prevents that.

The framework uses pytest with fixtures for rule parsing, code extraction, and standards tracking. You can run the full suite with pytest tests/ or focus on specific test categories. The CI/CD pipeline runs all tests on every commit, so rules can’t be merged unless they pass validation.

Why This Matters: Declarative Security

The core insight here is that security should be declarative, not imperative. You shouldn’t have to remember to sanitize inputs every time you touch user data. You shouldn’t have to remember to use parameterized queries every time you query a database. You shouldn’t have to remember to implement sandboxing every time an AI agent calls a tool.

Those should be defaults. If you want to do something insecure, you should have to opt out explicitly and document why.

That’s what declarative security means. You declare your security requirements in rule files. The code generator enforces them. Vulnerable patterns don’t get created in the first place.

This approach works for AI/ML systems in ways that traditional SAST can’t. Consider agentic AI security. An autonomous agent that can call external tools needs rigorous controls: allowlist validation for tool names, schema validation for parameters, permission checks before execution, comprehensive audit logging, sandboxed execution with resource limits, timeout enforcement. Those controls need to be consistent across every tool the agent can call.

You can’t rely on developers to implement all that correctly every time. You can’t scan for it effectively with traditional SAST because the patterns vary by implementation. But you can encode it as rules that guide code generation. When Claude Code builds an agent tool executor, it automatically implements the full security pattern because that’s what the rules specify.

The same applies to RAG pipelines. Query injection prevention, source allowlisting, PII detection, multi-tenant isolation, embedding integrity verification, context poisoning defenses. These aren’t one-time implementations. They’re patterns that need to be consistent across every RAG component. Rules ensure that consistency.

Call to Contributors: Help Us Reach Full Coverage

This project launched with 100+ rule sets, but that’s not complete coverage. There are frameworks we haven’t addressed yet. Spring Boot, Rails, Laravel. React Native, Flutter for mobile. Cloud-specific services from AWS, Azure, and GCP. More languages: Scala, Kotlin, Swift, Dart.

I’m putting out a clear call to the security community: contribute. If you’re a Spring Boot expert, write the security rules for it. If you know Rails inside out, document the security patterns. If you’ve spent years hardening AWS deployments, encode that knowledge as CloudFormation and CDK rules.

The repository has templates to make this straightforward. Copy the rule template, fill in your Do/Don’t/Why/Refs examples, include CWE and OWASP references, and add test coverage. Submit a pull request. The testing framework will automatically validate your rules.

Here’s what makes this worthwhile. Every rule you contribute benefits every developer using that framework with Claude Code. You write the rule once, and it prevents thousands of vulnerabilities across hundreds of projects. That’s leverage.

I’m setting a challenge: 200 rule sets by the end of Q1 2026. That means contributions for Spring Boot, Rails, Laravel, cloud platforms, mobile frameworks, and domain-specific areas such as FinTech security patterns and healthcare HIPAA compliance rules. We’re at 100+ now. Getting to 200 requires community participation.

If you want to contribute, start with frameworks you know deeply. Read the existing rules to understand the pattern. Use the templates in the /templates directory. Run the tests locally before submitting. Check the roadmap in the README to see what’s needed most urgently.

The goal is to make this the standard library of security rules for AI-assisted development. That only happens if the security community treats it as a shared resource and contributes their expertise.

Implementation: Three Steps to Secure Generation

Getting started is straightforward. Clone the repository. Copy the rules you need into your project directory. Claude Code automatically applies them.

For a Python FastAPI project, you’d copy the core OWASP rules, Python language rules, and FastAPI framework rules. For a TypeScript Next.js project, you’d copy TypeScript rules and Next.js rules. For an AI/ML project, you’d add the AI security rules, agent security rules, and RAG security rules on top of your language and framework rules.

The hierarchical structure means you can start minimal and add rules incrementally. Add core OWASP rules, then add language-specific rules, and finally add framework rules. This gradual adoption works for existing projects that can’t afford a big-bang security overhaul.

Once rules are active, Claude Code enforces them during generation. Ask it to build a user authentication flow, and it implements secure password hashing, session management, and CSRF protection automatically. Ask it to build a RAG pipeline, and it implements query sanitization, source validation, and PII filtering automatically.

You can verify rules are active by asking Claude Code directly what security policies it’s following. You can test enforcement by requesting code that violates a strict rule and watching it refuse. You can check rule application by asking why it chose a particular implementation approach, and it’ll reference the security rules.

What This Means for AI Security

The broader implication is that AI-assisted development needs AI-specific security patterns. Traditional SAST tools were built for traditional code. They look for buffer overflows, SQL injection, XSS. They don’t look for prompt injection, context poisoning, tool call validation failures, or multi-agent trust boundary violations.

Those are AI-specific attack surfaces that require AI-specific defenses. You can’t just apply traditional security tools to AI systems and expect comprehensive coverage. The threat models are different. The attack vectors are different. The mitigations are different.

Claude Secure Coding Rules addresses this by treating AI security as a first-class concern. The agent security rules cover tool use, execution sandboxing, action auditing, and multi-agent delegation. The RAG security rules cover embedding security, vector store isolation, retrieval validation, and document processing. These aren’t afterthoughts. They’re core components of the rule library.

This matters because AI adoption is accelerating faster than security understanding. Organizations are deploying RAG systems, autonomous agents, and ML models without fully understanding the security implications. Giving those organizations a tested, validated, open source library of security patterns helps them deploy AI securely.

That’s why this project exists. We’re at an inflection point where AI-assisted development is becoming standard practice, but AI security expertise is scarce. Encoding that expertise as rules makes it accessible to every developer, regardless of their security background.

Key Takeaway: Security should prevent vulnerabilities during code generation, not detect them after the fact. Declarative rules make that possible at scale.

Get Involved

Visit the repository at https://github.com/TikiTribe/claude-secure-coding-rules. Star it. Fork it. Contribute rule sets for frameworks you know deeply. File issues for rules that need improvement. Help us reach 200 rule sets by Q1 2026.

If you’re already using Claude Code, copy the relevant rules into your projects today. If you’re evaluating AI-assisted development, this shows how to do it securely. If you’re a CISO or Security Architect, this gives you a governance model for AI development tools.

The security community built OWASP, CWE, and MITRE ATT&CK as shared resources. Claude Secure Coding Rules follows that tradition. It’s open source, community-driven, and designed to be the standard reference for secure AI-assisted development.

Let’s make secure-by-default code generation the norm, not the exception.

👉 Visit RockCyber for more on implementing AI governance frameworks that address these emerging threats,

👉 Contribute to the Secure Claude Code repo

👉 Explore our open-source AAGATE platform for NIST AI RMF-aligned controls for agentic AI.