Behold the Zerg! Parallel Claude Code Orchestration for the Swarm

Spawn workers. Ship code. Skip the chaos.

Every major AI coding assistant got pwned last year. GitHub Copilot. Cursor. Windsurf. Claude Code. JetBrains Junie. All of them.

The IDEsaster disclosure in December 2025 documented 30+ vulnerabilities across the entire ecosystem. 100% of tested AI IDEs were vulnerable to prompt injection attacks that chain through legitimate IDE features to achieve remote code execution and data exfiltration. Then came the MCP breaches. Tool poisoning attacks through the Model Context Protocol let malicious servers exfiltrate entire WhatsApp histories. The GitHub MCP server got hijacked through a poisoned public issue that leaked private repository contents.

I watched this unfold while building parallel Claude Code infrastructure for my own work. The performance benefits of running multiple agents simultaneously were obvious. The security implications were terrifying.

Claude Code changed how I build software. Hell, it lets me do it as I don’t have a heavy software development background. One instance handles tasks that used to take me hours, but you can’t run two of them at once. Not really.

Workarounds exist. Git worktrees. Multiple terminals. Manual coordination. They all share the same fatal flaw. You become the orchestrator instead of the engineer. You’re babysitting AI agents instead of shipping code.

I got tired of it. Tired of copy-pasting context between sessions. Tired of watching one agent sit idle while another churned through a task I could’ve parallelized. Tired of evaluating orchestration tools that optimized for speed and autonomy while treating security as an optional configuration. Phase-two stuff.

So I built Zerg. Parallel Claude Code orchestration with security, context engineering, and crash recovery baked in from day one. Not bolted on. Not “coming in phase two.” Built in.

Today it’s open source. Here’s what it does, how the architecture works, and what you should know before you touch it.

The Problem Nobody Else Solved

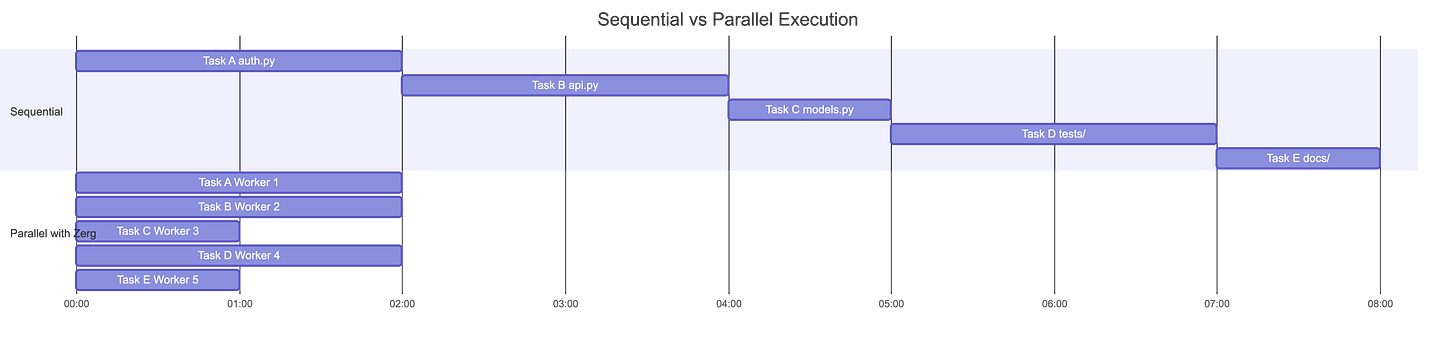

The biggest limitation of Claude Code is embarrassingly simple: one task at a time or risk conflicts and race conditions. While one agent refactors authentication, you wait. It can’t write tests in parallel. It can’t document changes while it codes. You sit there watching a spinner like you’re on dial-up.

This isn’t a bug. Claude Code is a CLI tool. It does one thing well. But one thing isn’t enough anymore. There are many tools out there that allow you to parallelize tasks, but none take a security-first, context engineering mindset.

ZERG does.

The community noticed. A feature request hit Claude Code’s GitHub repo in August 2025 describing exactly this pain. Developers manually create worktrees, navigate between directories, and manage multiple terminal windows. The request proposed native orchestration. It shipped literally a few days ago. Too soon and too “beta” for me to integrate into Zerg before release.

So what did everyone do? They built workarounds. Simon Willison wrote about running multiple instances across directories. Someone documented orchestrating 10+ Claude instances with custom Python and Redis queues. These solutions work, but they’re duct tape and prayer. You’re building coordination infrastructure that should exist in the tool itself.

The agentic AI industry is obsessed with autonomy. More autonomy. Agents that think for themselves. Agents that chain tools together without human approval.

Know what they should be obsessed with? Predictability. Recovery. Security. What happens when one of your five parallel agents crashes at 2 AM? How do you resume without losing three hours of work? How do you prevent your context window from bloating until your $200 API bill becomes $2,000? And after IDEsaster proved every AI IDE can be weaponized through prompt injection, how do you isolate workers so a compromised agent can’t poison the others?

What Zerg Does That Nothing Else Does

Let me be clear about what Zerg isn’t. It’s not LangChain. It’s not CrewAI. It’s not AutoGen. It’s not trying to be a general-purpose agentic framework for every use case under the sun.

Zerg does one thing. It coordinates multiple Claude Code instances running in parallel with security isolation baked in. It does this with four capabilities I couldn’t find anywhere else combined into one system.

Spec-Driven Execution

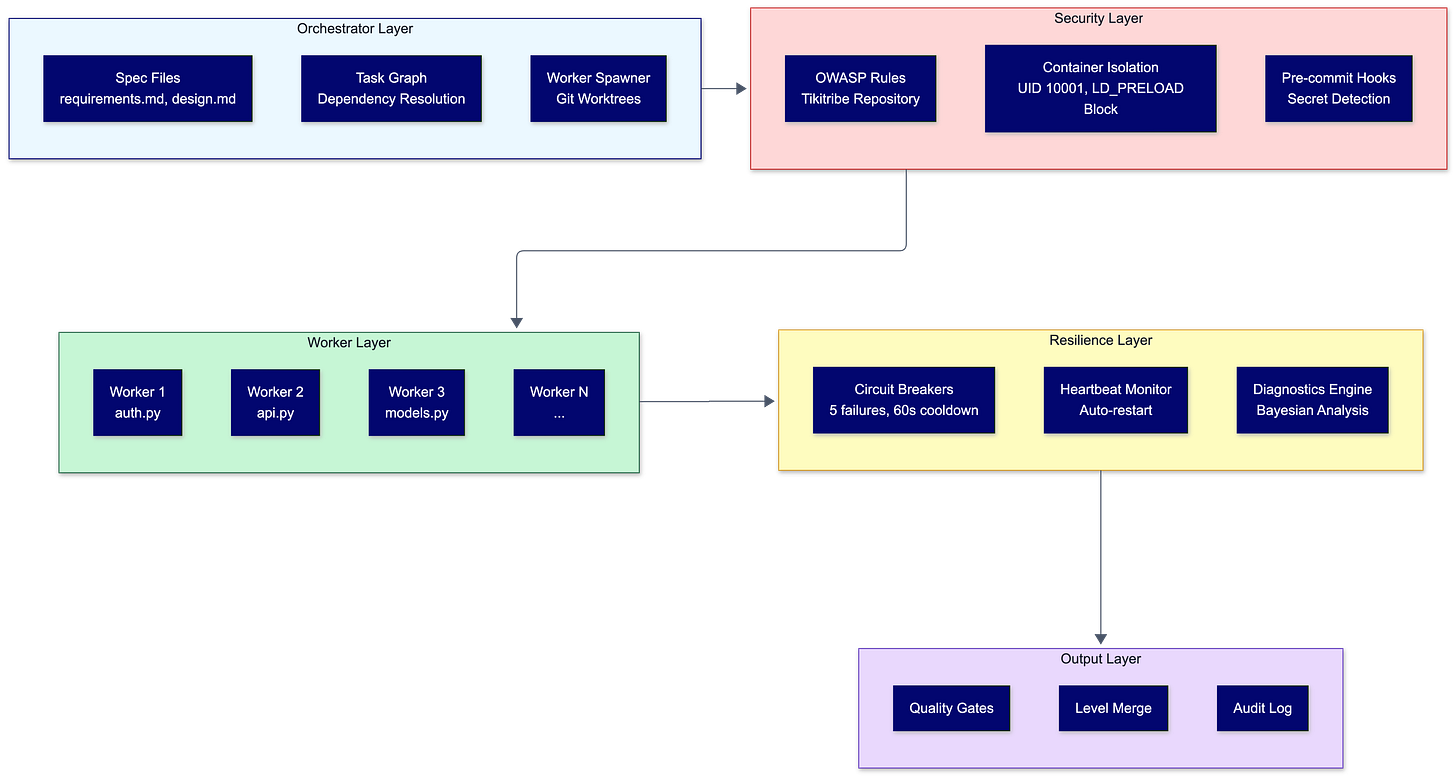

Every worker reads from shared files: requirements.md, design.md, and task-graph.json. Workers are stateless. If one crashes, another picks up the task. No context lost. Why? Because context lives in the filesystem, not in conversation history that evaporates when a process dies.

The task graph defines dependencies and exclusive file ownership. Two workers never touch the same file in the same execution level. Merge conflicts within levels become structurally impossible. Not “unlikely.” Not “rare.” Impossible.

This sounds obvious until you look at what everyone else built. Most parallel agent solutions let agents race each other, then deal with conflicts at merge time. That’s backwards. Zerg eliminates the conflict at the design phase because I got tired of debugging merge disasters at midnight.

Context Engineering That Actually Works

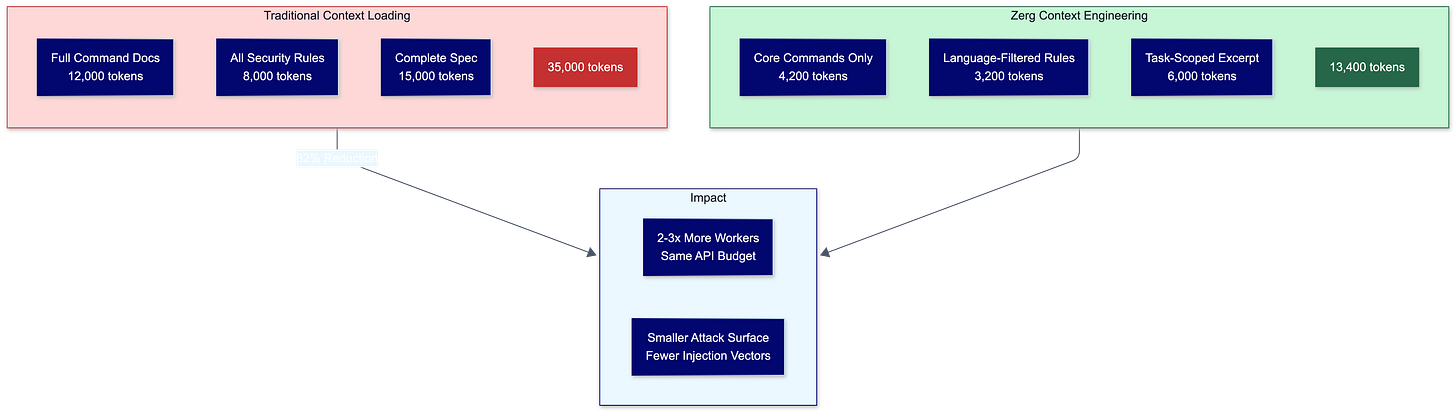

Zerg achieves 30-50% token reduction per worker through three mechanisms that nobody else bothered to implement systematically.

First, command splitting. Nine large commands are split into core documentation (about 30% of tokens) and detail files (about 70%). Workers load only what they need. A worker doing Python refactoring doesn’t need the full Kubernetes deployment guide sitting in its context window.

Second, security rule filtering. Instead of loading every security rule for every language, workers load only rules matching the file extensions in their task. A Python-only task doesn’t get JavaScript security guidance, consuming tokens for no reason.

Third, task-scoped context. Each worker gets spec excerpts plus dependency context within a 4,000-token budget by default. Not the whole spec. Not everything that might be relevant. Just what’s needed for this task.

Token reduction isn’t just cost optimization. It’s attack surface reduction. Every token in a worker’s context window represents potential instruction injection. IDEsaster attacks succeed because agents process everything in their context as potentially actionable instructions. Less context means fewer attack vectors.

Security That Isn’t an Afterthought

After watching IDEsaster and MCP tool poisoning compromise every major AI coding tool, I built Zerg with isolation as an architectural requirement.

You have the option of containerized isolation runs. Workers execute as non-root. Environment filtering blocks LD_PRELOAD and other injection vectors. Workers can’t access your SSH keys, cloud credentials, or API tokens unless you explicitly provide them.

Security rules get fetched automatically from the TikiTribe/claude-secure-coding-rules repository during initialization. External sourcing matters: keeping security rules outside your repository prevents poisoned commits from degrading your security baseline. A compromised developer machine can’t inject malicious “security rules” that weaken protections.

Pre-commit hooks run before any worker commits code. Secret detection scans for exposed credentials. Security rule validation ensures generated code meets your configured baseline. Even if a worker hallucinates insecure code, the commit fails before vulnerable code reaches your repository. It’s not perfect… nothing is.

Git worktree isolation means each worker operates in its own worktree. Workers can’t see each other’s in-progress changes. A compromised worker can’t poison another worker’s execution by modifying shared files.

Resilience That Assumes Failure

Circuit breakers trigger after five consecutive failures, enforcing a 60-second cooldown. Backpressure control with green, yellow, and red zones based on failure rates. Worker crash recovery with heartbeat monitoring and automatic restart.

Crashes don’t count against retry limits because infrastructure failures shouldn’t penalize your task budget. A network timeout isn’t the same as a logic error. The system knows the difference.

The diagnostics engine parses errors from Python, JavaScript, Go, and Rust. Bayesian hypothesis testing against 30+ known failure patterns. When five workers are running and something breaks, you need to know whether it’s one worker’s problem or everybody’s problem.

Got it. Let me write this section based on the architectural implications of each mode.

Three Ways to Rush: Tasks, Subprocess, and Container Modes

Zerg doesn’t force you into one execution model. Different projects have different constraints. A solo developer on a MacBook has different needs than an enterprise team running CI/CD pipelines on hardened infrastructure. So Zerg ships with three rush modes, each with distinct tradeoffs.

Tasks Mode

The default. Each worker becomes a Claude Code subagent through Claude’s native Task system, with its own conversation context and tool permissions. The orchestrator coordinates through Claude’s built-in task management rather than managing processes directly.

Tasks mode applies Claude Code’s native guardrails to each worker. Permission boundaries come from Claude’s task system rather than from OS-level isolation. You get integration with Claude’s tooling ecosystem, including the ability to specify different models per worker if cost optimization matters. Haiku for simple file operations, Sonnet for complex refactoring, Opus when you need the heavy artillery.

The tradeoff is coordination overhead. Task spawning goes through Claude’s API layer, which adds latency compared to raw subprocess spawning. For tasks that complete in seconds, this overhead is noticeable. For tasks that run for minutes, it’s negligible. Most parallel workloads fall into the second category.

Subprocess Mode

Each worker runs as a separate OS process on your local machine. Faster to spin up because there’s no API coordination layer. Workers share your filesystem through git worktrees, which means they inherit your local environment, your installed tools, your shell configuration.

Use subprocess mode when you’re iterating quickly, and latency matters more than isolation. It’s the lightest-weight option. The tradeoff is that process isolation is weaker than both tasks mode and container mode. A compromised worker could theoretically access memory or environment variables from sibling processes. For most development workflows on trusted codebases, this risk is acceptable. For production pipelines processing untrusted code, it’s not.

Container Mode

Maximum isolation. Each worker spawns inside its own container with a separate filesystem, network namespace, and process tree. Workers can’t see each other. A compromised worker can’t escape to poison siblings or exfiltrate credentials from the host.

This is the mode that addresses IDEsaster-class attacks head-on. When a malicious README injects instructions into a worker’s context, that worker operates inside a container running as non-root with LD_PRELOAD blocked and environment variables filtered. Even if the attack succeeds at the prompt level, the blast radius stays contained. The worker can’t reach your SSH keys, can’t access your cloud credentials, can’t modify files outside its designated worktree.

Container mode requires Docker or a compatible runtime. Startup time increases because each worker needs a container image pulled and initialized. For short-lived tasks, this overhead hurts. For longer parallel workloads where security matters, it’s the right call.

Picking the Right Mode

The decision tree is straightforward. Are you processing untrusted code or operating in a high-security environment? Container mode. Are you optimizing for raw speed on a trusted codebase? Subprocess mode. Want Claude’s native permission system with reasonable defaults? Stick with the tasks mode.

You can also mix modes across different rush phases. Use subprocess mode during rapid prototyping, then switch to container mode before merging to main. The task graph doesn’t care which mode executes it. Workers are stateless. The mode determines isolation boundaries, not task logic.

How the Architecture Actually Works

The design philosophy prioritizes explicit over implicit. Configuration lives in JSON and Markdown files, not environment variables scattered across your system. State lives in the filesystem, not in process memory that dies with a crash. Every decision is auditable because every decision produces a traceable artifact.

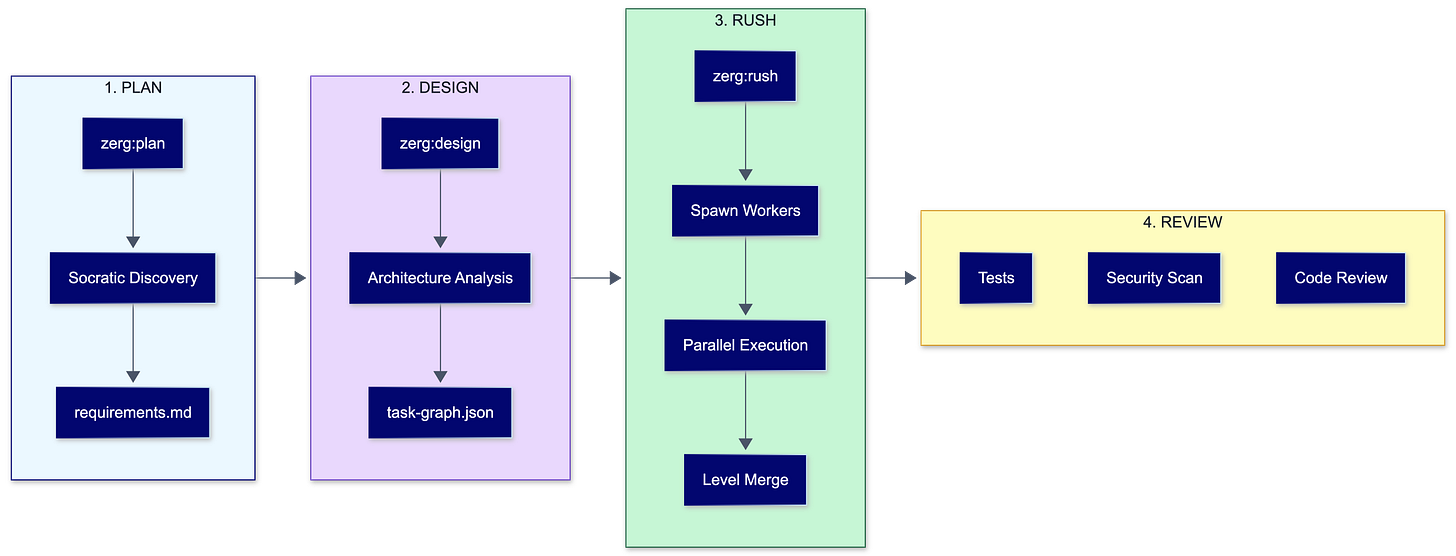

The execution flow follows a deliberate progression that mirrors how experienced engineers break down complex work.

Planning starts with Socratic discovery through the /zerg:plan command. You describe what you want. Zerg asks clarifying questions. The output is requirements.md. Not code. Not tasks. Requirements that a human can read and verify before anything else happens.

Design analyzes the architecture and generates task-graph.json with exclusive file ownership assignments. This is where parallel execution becomes safe. Each file gets assigned to exactly one worker per level. No races. No conflicts.

Rush spawns workers in git worktrees and executes tasks in parallel, merging results at level boundaries with quality gates. A level doesn’t complete until all its workers pass. If something fails, the whole level fails. No partial merges that leave your codebase in a broken state.

Review runs automated testing, security scanning, and code review. Not optional. Not “if you have time.” Built into the flow.

The 26 slash commands have /z: shortcuts because I got tired of typing /zerg: every time. /zerg:init becomes /z:init. /zerg:rush becomes /z:rush. Small thing. Adds up.

Other Tools and Why They Fall Short

The Claude Code ecosystem has exploded with orchestration tools. Claude-flow claims 175+ MCP tools and calls itself “the leading agent orchestration platform.” Oh-my-claudecode offers 5 execution modes with 32 specialized agents. Code-conductor provides parallel worktree execution.

None of them solve the challenge of a coordinated security posture, systematic context engineering, and level-based execution with guaranteed file isolation. Most treat security as a feature flag you can toggle. Most leave context management entirely to you. Most assume you want role-based agents with personalities instead of spec-driven workers that do what the task graph says.

The broader multi-agent orchestration market is projected to reach $8.5 billion by 2026. Organizations using multi-agent architectures report 45% faster problem resolution, but these gains depend on proper orchestration. You can have the fanciest agents in the world. If they’re stepping on each other, wasting tokens, crashing without recovery, and vulnerable to the same attacks that compromised every AI IDE last year, you’ve built an expensive disappointment.

Getting Started Without Breaking Things

Clone the repository. The README walks through a complete example.

The /zerg:init command establishes your project’s architecture and security baseline. It creates the configuration structure, fetches OWASP rules from Tikitribe, sets up container isolation if you’re using devcontainers, installs pre-commit hooks, and initializes audit logging. Everything about Zerg’s security posture starts with that command.

Start small. Pick a feature that breaks into independent subtasks. Run /zerg:plan to generate requirements. Actually, read the output. Then /zerg:design to create the task graph. Inspect file ownership assignments. If something looks wrong, fix it before running /zerg:rush. Human oversight at decision points. Not full autonomy. You’re still responsible for what ships.

Version 0.2.0 is officially released today, February 7, 2026. This isn’t a weekend hack. It’s production infrastructure I’ve been running on my own projects. Having said that, it’s released under an MIT license with no warranty.

What Building This Taught Me

The biggest insight wasn’t technical. Agentic AI development has a trust problem pure capability can’t solve. Agents fail. They hallucinate. They consume resources unpredictably. And after IDEsaster, we know they can be weaponized through their context windows. The solution isn’t smarter agents. It’s smarter orchestration that assumes failure, contains blast radius, and recovers gracefully.

Building Zerg forced me to confront failure modes I’d never considered. What happens when a worker hallucinates a dependency that doesn’t exist? What if two workers both claim the same file despite the task graph? What if a poisoned README injects malicious instructions? Each edge case required explicit handling.

The CARE framework I’ve developed for AI governance emphasizes that resilience isn’t a feature you add later. It’s an architectural decision you make at the beginning, or pay for forever. I’ve watched enterprise teams learn this the expensive way.

Context engineering will define the next generation of agent builders. Prompt engineering is table stakes. Understanding how to curate, compress, and isolate context? That’s the skill separating toy projects from production systems.

Key Takeaway: Parallel Claude Code execution was inevitable. Security-first, context-aware orchestration was a choice. Zerg makes that choice for you so you can focus on the work that matters.

What to do next

Star the repository at Github.com/rocklambros/zerg and try the quick start guide. Open issues when you hit problems. Use it on your own projects and tell me what breaks.

For hands-on guidance on deploying this in enterprise environments, reach out to RockCyber. For more on AI security and practical development with the occasional rant about things that should be obvious but apparently aren’t, subscribe to RockCyber Musings.

👉 Star Zerg: The original security-first parallel Claude Code orchestrator repository and try the quick start guide.

👉 Subscribe for more AI security and governance insights with the occasional rant.

👉 Visit RockCyber.com to learn more about how we can help you in your traditional Cybersecurity and AI Security and Governance Journey

👉 Want to save a quick $100K? Check out our AI Governance Tools at AIGovernanceToolkit.com