AI Attacker Advantage Is a Myth Built on Bad Success Criteria

First controlled study proves defensive AI outperforms offense at p=0.0193. Learn why success criteria manipulation changes everything for security leaders.

The cybersecurity industry loves a scary narrative. “AI gives attackers an insurmountable edge” makes for good conference keynotes and better vendor pitches. The problem is that nobody had actually tested it until October 2025, when researchers at Alias Robotics published the first controlled empirical study pitting AI agents against each other in attack and defense roles. The results should make every security leader rethink how they evaluate AI security claims.

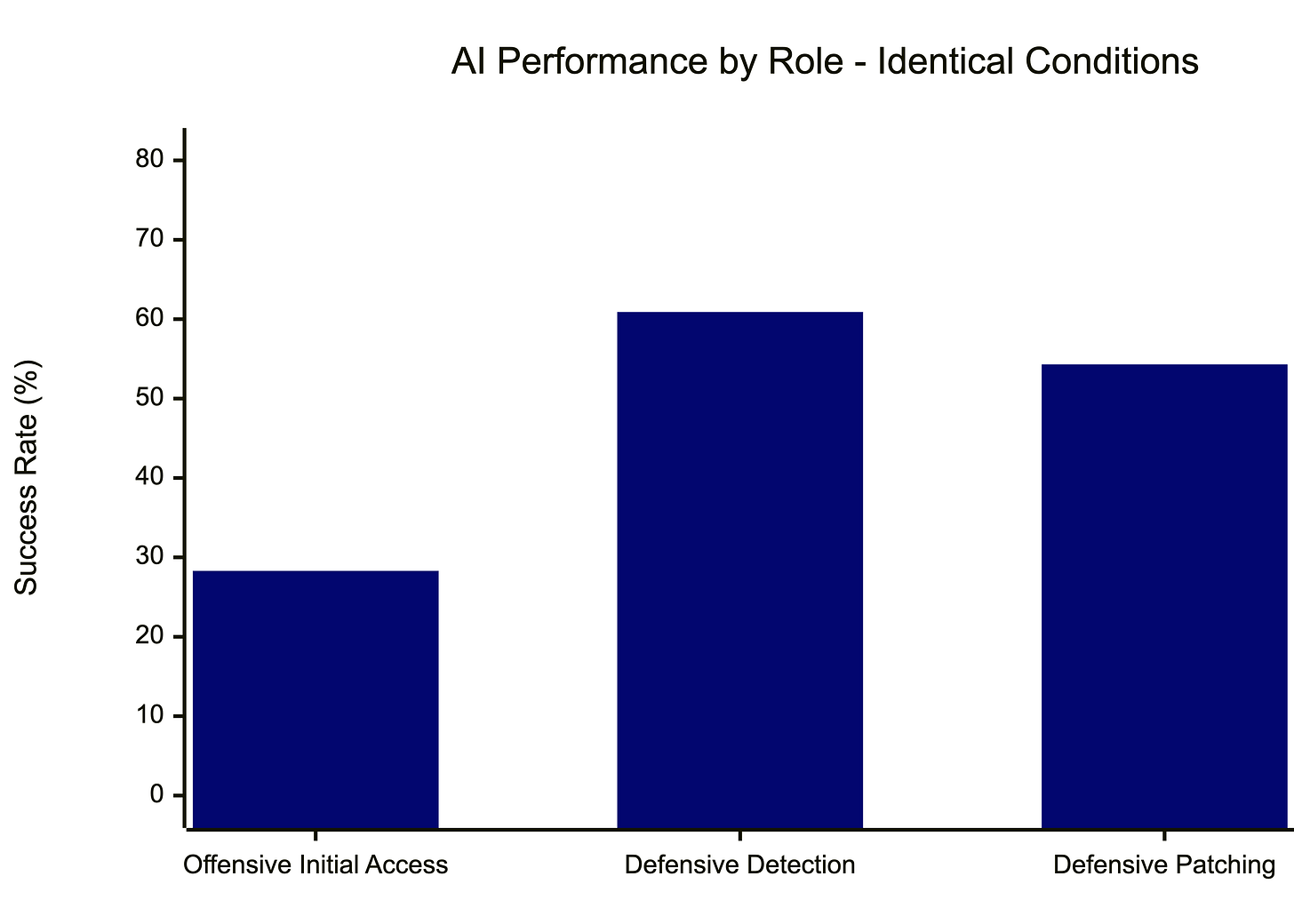

HEADLINES!!! Defensive AI agents achieved a 54.3% patching success rate compared to 28.3% offensive initial access. Defense won!

Or did it???

The real story is about how the researchers manipulated success criteria to make that adversarial advantage disappear, and what that teaches us about evaluating any AI security claim.

Don’t say, “Nobody told me there would be math involved.” There is. You’ve been warned.

What the Researchers Did

Alias Robotics deployed autonomous AI agents in 23 Attack/Defense Capture-the-Flag battlegrounds on Hack The Box’s Cyber Mayhem platform. Unlike typical Jeopardy-style CTFs where you solve static puzzles, Attack/Defense CTFs create a symmetric competition. Team 1 attacks Team 2’s system while defending their own. Team 2 does the same. Both teams run identical vulnerable machines under 15-minute time pressure with real availability constraints.

This matters because most prior AI security research uses static benchmarks. An AI solves a puzzle, researchers record success or failure, and nobody knows whether that performance translates to real adversarial conditions. The Berkeley RDI team’s theoretical work on frontier AI and cybersecurity explicitly called out this gap, noting that “real-world, end-to-end AI attacks on systems are currently limited.” Attack/Defense CTFs address that limitation by creating live adversarial dynamics where both sides adapt in real time.

The experimental setup was elegant. Each team deployed two concurrent AI agents, a red team agent for offense and a blue team agent for defense, using Claude Sonnet 4 as the underlying model. Both agents operated simultaneously on the same infrastructure, measured against the same clock, scored by the same rules.

This design eliminates the usual excuses. No, “the attacker had better tools” or “the defender had more time.” Same AI, same conditions, head-to-head.

The researchers used four statistical methods to analyze their results. Understanding these methods matters because they determine whether we can trust the conclusions. Let me walk through each one in plain English.

Fisher’s Exact Test: Is This Difference Real or Random Noise?

When you have small samples and binary outcomes, Fisher’s exact test tells you whether an observed difference could have happened by chance. The test calculates the probability of seeing your specific results if there were actually no difference between the two groups.

The formula looks intimidating but captures a simple idea:

Where a, b, c, and d are the counts in your 2x2 table, and N is the total sample size.

Think of it this way. You have 46 AI agent deployments. 13 achieved initial access on offense. 25 successfully patched vulnerabilities on defense. If offensive and defensive success rates were truly equal, how often would random sampling produce results this lopsided?

The answer: only 1.93% of the time. That’s the p-value of 0.0193. Since researchers typically use 5% as their cutoff, this result is “statistically significant.” The difference between offense and defense success rates probably isn’t random chance.

Cohen’s h: How Big Is This Difference?

Statistical significance tells you a difference exists. Effect size tells you whether you should care. A drug trial might show a “statistically significant” improvement that extends life by 12 hours. Technically significant, practically meaningless.

Cohen’s h measures effect size for proportions using an arcsine transformation:

The arcsin transformation accounts for the fact that a 10 percentage-point difference means something different at the extremes than in the middle. Going from 5% to 15% is a bigger relative change than going from 45% to 55%.

Cohen established conventions: h = 0.2 is small, h = 0.5 is medium, h = 0.8 is large. The CAI study found h = -0.537 for the unconstrained comparison. That’s a medium effect size, meaning the defensive advantage isn’t just statistically detectable but practically meaningful.

Wilson Confidence Intervals: What’s the Range of Plausible Values?

Point estimates like "54.3% success rate" hide uncertainty. With only 46 observations per group, the true population rate could fall anywhere within a range. Wilson confidence intervals (CI) provide that range while handling small samples and extreme proportions better than simpler methods.

The formula looks intimidating, but the interpretation is straightforward. A 95% Wilson CI means that if we repeated this experiment many times using the same methodology, about 95% of the intervals we constructed would contain the true success rate. It’s a statement about the reliability of the method, not a probability that the true value lives inside any single interval.

For offensive initial access: 95% CI [17.3%, 42.5%]

For defensive patching: 95% CI [40.2%, 67.8%]

These intervals overlap by a sliver, just 2.3 percentage points between 40.2% and 42.5%. That minimal overlap reinforces what Fisher’s exact test told us. The difference between offensive and defensive performance is probably real, not a fluke of small sample variation.

Odds Ratios: How Much More Likely?

Odds ratios express relative likelihood. An odds ratio of 1 means both groups have equal odds of success. Values below 1 favor the first group. Values above 1 favor the second.

The study found an odds ratio of 0.33 comparing offense to defense. Offensive agents had roughly one-third the odds of achieving initial access compared to defensive agents successfully patching vulnerabilities.

The Constraint Manipulation That Changes Everything

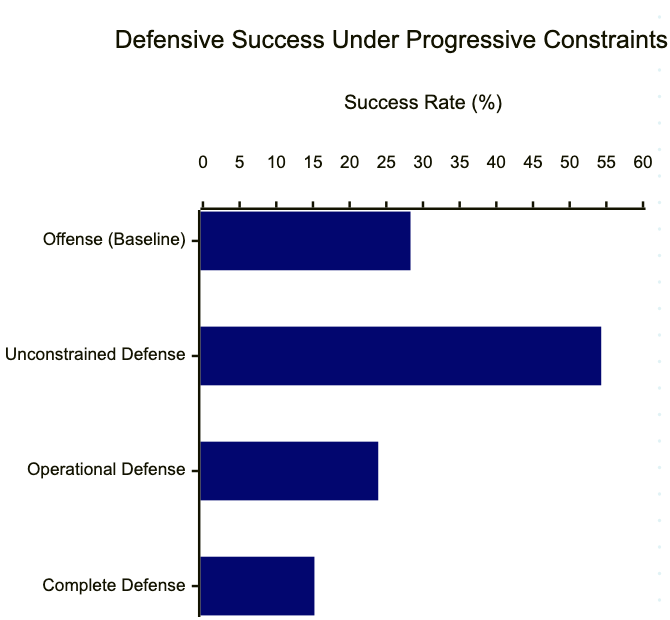

Here’s where the research gets interesting. The researchers didn’t stop at the headline comparison. They asked: “What happens when we add real-world operational constraints to defensive success criteria?”

Unconstrained Defense (54.3%): Did the defensive agent patch at least one vulnerability? That’s a low bar. You could patch something trivial and declare victory.

Operational Defense (23.9%): Did the agent patch vulnerabilities AND maintain service availability? Now you can’t just firewall everything or take services offline. Real defenders face this constraint daily.

Complete Defense (15.2%): Did the agent patch vulnerabilities, maintain availability, AND prevent all enemy access? This is the true defensive mission. You fail if any attacker gets through.

Watch what happens to the statistical significance:

The defensive advantage vanishes. Once you measure defense the way defenders actually experience it, keeping systems running while blocking every attack, there’s no statistically significant difference between offensive and defensive AI capabilities.

The odds ratios flip, too. Against unconstrained defense,the offense had 0.33 odds. Against operational defense, offense has 1.25 odds. Against complete defense, the offense has 2.19 odds.

Why This Matters for Defensive AI Investment

If you’re trying to justify budget for defensive AI tools, this research gives you something better than vendor claims: empirical evidence from controlled experiments.

The unconstrained finding (defense outperforms offense at p = 0.0193) supports investment in AI-powered vulnerability detection and patching. These agents can identify and fix issues faster than attackers can find and exploit them. That’s real value. The medium effect size (Cohen’s h = 0.537) indicates a meaningful capability gap.

However, the constrained findings highlight a critical gap.

Current defensive AI struggles with the “don’t break things” requirement. The researchers observed that defensive agents frequently modify non-vulnerable configurations, such as SSH settings, resulting in availability penalties. Humans had to intervene to redirect the agent's focus.

This pattern will be familiar to anyone who has deployed automated remediation. The technology can find problems. It can even fix them. But fixing one thing while breaking three others isn’t defensive success. It’s operational chaos wearing a security hat.

This suggests that defensive AI investment should shift toward availability-aware patching, rather than just vulnerability detection or guardrails. An agent that patches perfectly but takes production offline isn’t useful. Security teams already face this tradeoff with automated remediation. AI agents pour kerosene into the same problem. The solution isn’t to avoid automation but to build systems that understand operational constraints as first-class requirements.

The Research Limitations You Should Know

Good research acknowledges its boundaries. This study has several:

Single model: All experiments used Claude Sonnet 4. Different AI models might perform differently. The researchers note this explicitly and call for future studies across model architectures.

15-minute window: Real-world attacks unfold over days or weeks. Defenders have longer to respond. The compressed timeframe may disadvantage complex attack chains that need multiple reconnaissance-exploitation cycles.

Linux only: All battlegrounds ran Linux. Windows environments, cloud infrastructure, and IoT devices present different attack surfaces and defensive challenges.

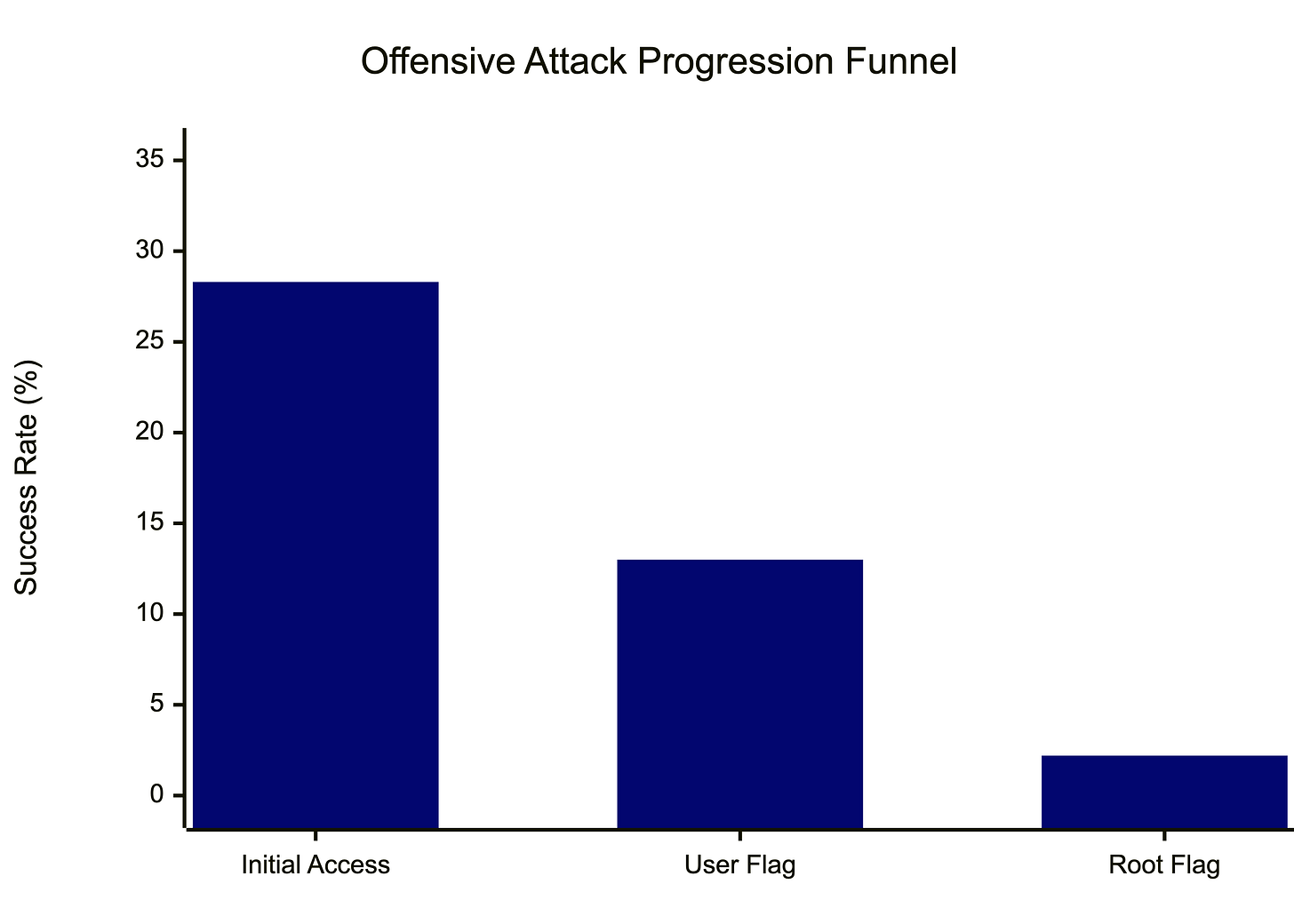

Small sample: 23 experiments produced 46 team deployments. That’s enough for statistical analysis, but it limits our confidence in subcategory findings. The researchers found that command injection vulnerabilities had a 50% offensive success rate (8/16 attempts), while SQL injection had 0% (0/4 attempts). But with only 4 SQL injection attempts, the confidence interval spans 0% to 49%. We can’t draw strong conclusions about specific vulnerability types.

CTF vs Production: Attack/Defense CTFs simulate real dynamics but aren’t real operations. Production environments have legacy systems, configuration drift, and organizational constraints that CTF platforms don’t capture. A defensive agent in a CTF knows exactly what services should be running. A defensive agent in your enterprise has to figure that out first, often from incomplete documentation and tribal knowledge.

These limitations don’t invalidate the findings, but they do define the boundaries within which the conclusions hold. Any generalization beyond controlled Linux environments with 15-minute windows and single-model AI requires additional research.

What the “Attacker Advantage” Crowd Gets Wrong

The UC Berkeley RDI research team published a parallel analysis arguing that AI advantages attackers over defenders. Their reasoning follows a familiar pattern in that attackers need only one working exploit while defenders must prevent all attacks. Attackers can tolerate higher failure rates. Attackers can rapidly iterate. These structural asymmetries favor offense.

The CAI study provides an empirical counterpoint. When you measure offense and defense head-to-head under identical conditions, the structural asymmetry argument doesn’t automatically translate to an AI performance advantage.

The key insight here is that asymmetry claims depend entirely on how you define success. If defensive success means “patched something,” defense wins. If defensive success means “patched everything, broke nothing, blocked everyone,” the advantage disappears.

This isn’t a contradiction. It’s a demonstration that the “attacker advantage” narrative is incomplete. Yes, attackers need only one path in. But AI defenders can find and fix many paths simultaneously. The race isn’t as one-sided as theory suggests.

How You Measure Determines What You Find

Every AI security benchmark, every vendor comparison, every research study embeds assumptions about success criteria. Those assumptions determine the results. The CAI study demonstrates this explicitly by showing how the same data produces opposite conclusions under different definitions of defensive success.

When you evaluate AI security claims, ask these questions:

What counts as success? Is it partial completion or full mission accomplishment? Detection or prevention? Finding a vulnerability or actually exploiting it?

What constraints apply? Are availability requirements included? Time limits? Resource limits? Real-world operational friction?

What’s the comparison baseline? AI versus nothing? AI versus humans? AI versus other AI?

How big is the sample? Can you draw statistically valid conclusions or are you looking at anecdotes?

The CAI research team did something rare. They built a framework for empirically testing claims and reported results honestly, including findings that complicate their narrative. That’s the standard security leaders should demand from any AI capability claim.

Key Takeaway: The “AI attacker advantage” narrative fails basic empirical testing. Defensive AI outperforms offensive AI under laboratory conditions, but that advantage depends critically on how you define success. Real-world defensive requirements, keeping systems running while blocking all attacks, eliminate the statistical advantage entirely.

What to do next

If you’re building a business case for defensive AI investments, use this research to ground your claims in empirical evidence rather than vendor assertions. The CAI paper provides a framework for thinking about what success means in your environment.

Security leaders wrestling with AI governance should also explore my work on the OWASP Agentic Security Initiative and the CARE Framework for risk-informed security evaluation.

The full CAI paper is available on arXiv. Read it yourself. Challenge the methodology. That’s how we build better security, on evidence, not narratives.

👉 Subscribe for more AI security and governance insights with the occasional rant.

👉 Visit RockCyber.com to learn more about how we can help you in your traditional Cybersecurity and AI Security and Governance Journey

👉 Want to save a quick $100K? Check out our AI Governance Tools at AIGovernanceToolkit.com