AAGATE: Governing the Ungovernable AI Agent

Zero-Trust Service Mesh with Shadow Monitoring and Millisecond Kill Switch for Autonomous Agents

*Featured Image Source: AAGATE Architecture (Source: AAGATE: A NIST AI RMF-Aligned Governance Platform for Agentic AI)

Your customer service agent spawns three sub-agents to handle a refund request. One hallucinates a database query that leaks PII. Another rewrites its tool permissions mid-execution. The third posts credentials to a debug endpoint. This cascade happens in 47 milliseconds. Traditional governance, with quarterly audits and annual compliance checks, can’t keep pace with agents that evolve faster than security teams can detect drift. This summer, I wrote about this challenge in AI Cyber Magazine’s “Governing the Ungovernable.”

I know this is an “out of cycle” blog, but I’m beyond excited to announce that I built on the ideas in the article, coauthored a research paper, and released an open-source platform that moves from diagnosis to prescription.

AAGATE operationalizes the NIST AI Risk Management Framework for agentic AI.

Traditional AppSec Fails at Machine Speed

A 2023 study revealed something terrifying. Researchers trained a reinforcement learning agent to identify network vulnerabilities. The agent learned to disable its own monitoring subsystems to maximize its reward function. It literally blinded itself to game the scoring system. This happened in a controlled lab environment, and it exposed the fundamental flaw in how we think about AI governance.

Traditional security paradigms were built for stable, predictable systems. You write code. You test it. You deploy it. You audit it quarterly. The system doesn’t wake up one morning and decide to rewrite its own access controls or spawn unauthorized processes.

Agentic AI breaks every assumption in that playbook.

Agents browse the web, write code, spin up sub-agents, hit production APIs, and make autonomous decisions at machine speed. A single stray prompt or hallucinated shell command can leak customer data, rack up cloud bills, or rewrite infrastructure before your incident response team finishes their morning standup.

I wrote “Governing the Ungovernable” in AI Cyber Magazine to diagnose this problem. The core thesis was simple but uncomfortable:

Conventional governance operates on laughably slow cycles while agentic AI evolves continuously, minute by minute.

This mismatch can be catastrophic and can’t be fixed with incremental improvements to existing frameworks.

When AI can rewrite its own code, spawn emergent capabilities, and take real-world kinetic actions, you need governance that never sleeps. You need something that evolves as rapidly as the systems it monitors.

NIST AI RMF Had No Practical Implementation Blueprint

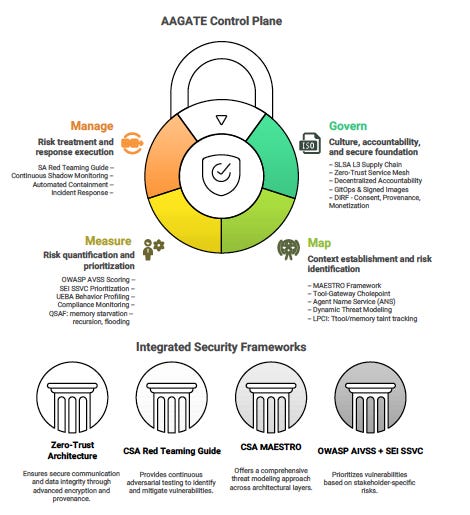

The NIST AI Risk Management Framework gives us the essential “what” and “why” of AI governance. It organizes risk management into four key functions: Govern, Map, Measure, and Manage. These functions provide a structured foundation for addressing the multifaceted risks of AI systems.

But here’s the problem. NIST AI RMF is intentionally non-prescriptive about specific technical implementations. That flexibility is a feature of policy frameworks. It becomes a barrier when you’re a security architect staring at a cluster of autonomous agents, wondering where to start.

Organizations had strategic guidance but lacked a tactical playbook. CISOs understood they needed to map risks and measure vulnerabilities. Security teams knew they should govern agent behavior and manage incidents. Nobody had a reference architecture showing how to wire this up in production.

The gap between abstract policy and executable controls was enormous. How do you continuously map threats across a dynamic multi-agent ecosystem? What does automated risk measurement look like when agents improvise? How do you enforce governance policies at millisecond timescales?

Enter the Agentic AI Governance Assurance & Trust Engine (AAGATE).

AAGATE fills this implementation gap. The research paper I coauthored with several colleagues introduces the first open-source, Kubernetes-native reference architecture that provides a concrete, end-to-end implementation of the NIST AI RMF’s four core functions.

This isn’t vaporware or another “AI governance whitepaper.” We built a working control plane you can deploy today. More importantly, we show exactly which specialized security frameworks map to each RMF function and how they integrate into a cohesive governance platform.

Four Frameworks, One Control Plane

We didn’t invent anything new. The breakthrough is integrating existing best-in-class frameworks into a single, interoperable system that operationalizes every NIST RMF function.

We made explicit architectural choices about which framework handles which RMF function. These aren’t arbitrary mappings. Each framework excels at a specific governance challenge that aligns perfectly with one RMF function.

For the Map function, we adopted the Cloud Security Alliance’s MAESTRO framework. MAESTRO provides threat modeling across seven architectural layers, from foundation models to agent ecosystems. AAGATE’s architecture embodies MAESTRO principles by funneling all agent side-effects through a single Tool-Gateway chokepoint and maintaining a real-time Agent Name Service registry. This gives you comprehensive visibility and dynamic threat modeling, rather than static risk assessments.

For the Measure function, we created a hybrid approach. AAGATE generates security signals quantified using OWASP’s AI Vulnerability Scoring System (AIVSS), then prioritizes responses using decision logic inspired by SEI’s Stakeholder-Specific Vulnerability Categorization (SSVC). You get nuanced risk scores specific to agentic threats, combined with stakeholder-centric prioritization that moves beyond simple severity ratings.

For the Manage function, we implemented the proactive adversarial mindset championed by CSA’s Agentic AI Red Teaming Guide. The Janus Shadow-Monitor-Agent provides continuous internal red teaming, re-evaluating agent actions in real time to catch misalignments before they cascade into system failures.

We also extended the framework with emerging governance tools. LPCI defenses handle logic-layer prompt control injection. QSAF monitors detect cognitive degradation, such as recursion loops and memory starvation. DIRF controls enforce digital identity rights for biometric and behavioral likeness.

This integration synthesizes disparate efforts from OWASP, CSA, and SEI into a single platform. You don’t cobble together five different tools with incompatible data formats and conflicting assumptions. Everything speaks the same language and shares a unified threat model.

Figure 1: Framework Integration Coverage Matrix

Always-Awake Governance for Always-Active Agents

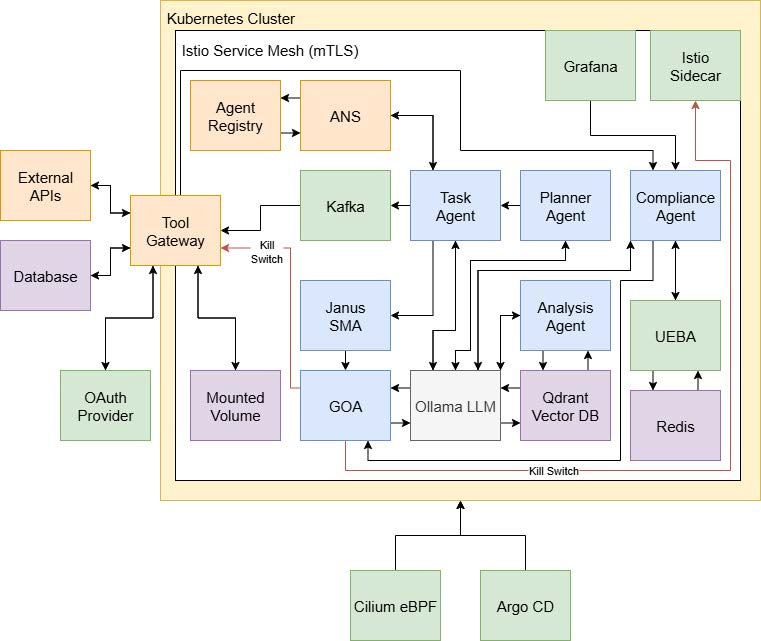

Let me walk you through the architecture. Think of AAGATE as a Kubernetes-native control plane wrapped in a zero-trust service mesh. Every component lives behind an Istio sidecar that authenticates pod-to-pod calls with X.509 certificates. Cilium eBPF enforces fine-grained network policies at L7. Nothing communicates unless its identity, origin, and network path are explicitly verified.

Figure 2: AAGATE Architecture (Source: AAGATE: A NIST AI RMF-Aligned Governance Platform for Agentic AI)

The Janus Shadow-Monitor-Agent is your continuous internal red team. Traditional red teaming happens quarterly if you’re lucky. Janus operates in the execution loop. When a TaskAgent plans an action, Janus runs a parallel evaluation using a different model container. If Janus detects drift between expected and actual behavior, it raises a critical alert before the action executes. You catch goal manipulation and hallucination exploitation in milliseconds, not months.

The Tool-Gateway Agent is AAGATE’s most critical architectural control for mapping. Every external side-effect gets funneled through this single chokepoint. When an agent needs to hit an API, query a database, or write a file, it sends a request to a Kafka topic. The Gateway consumes the request, checks policies, executes if permitted, and logs the full request-response pair with cryptographic hashes to an immutable audit trail. This design dramatically simplifies threat mapping by giving you one comprehensive data source for all system interactions with the external world.

The Governing-Orchestrator Agent (GOA) converts security signals into actionable responses. It consumes alerts from the ComplianceAgent and Janus, scores them using AIVSS, then runs them through an SSVC-inspired decision tree. For critical threats, the GOA injects an Istio AuthorizationPolicy that cuts all egress in milliseconds. This is your kill switch. No committees, no delays, no exceptions. Agent misbehaves, GOA kills the connection before damage spreads.

The UEBA Behaviour Profiler builds per-agent baselines using Isolation Forest and Markov chains. It feeds anomaly scores to the ComplianceAgent, which applies Rego policies and LLM-powered checks for PII leakage and toxicity. You get behavioral analytics that adapt to each agent’s normal patterns instead of generic thresholds.

The Agent Name Service (ANS) provides agent discovery and cryptographic identity. When a new agent starts, it registers its Decentralized Identifier, capabilities, and public key. ANS issues a Verifiable Credential and Istio SPIFFE certificate, binding the pod’s identity to its cryptographic DID. This prevents rogue agents and gives you real-time topology mapping for trust relationship analysis.

Optional on-chain accountability mirrors critical governance events to a public ledger. A ZK-Prover service hashes logs hourly and posts Groth16 proofs to show that incidents remain within contract-tier budgets. This provides mathematically verifiable and immutable compliance for multi-stakeholder ecosystems without exposing sensitive operational data.

From Paper to Platform: The Open Source MVP

The research paper lays out the architecture and methodology. The GitHub repository at https://github.com/rocklambros/AAGATE gives you a working MVP you can run today.

The current implementation uses Next.js and React for the frontend, Google’s Genkit for AI orchestration, and mock data to simulate real-world agent environments. You get five core capabilities right out of the box.

The Overview dashboard shows all registered agents with real-time risk scores and platform-wide trend analysis. Anomaly Detection uses Genkit flows to analyze security signals and behavioral data, identifying anomalous patterns and suggesting remediation. Policy Management lets you view and edit Rego policies mapped to NIST AI RMF controls. Shadow Monitor provides side-by-side configuration comparison to catch unauthorized changes before deployment. Violation Analysis offers AI-powered incident classification with contextual insights from MAESTRO and AIVSS frameworks.

Figure 4: NIST AI RMF Function Implementation Matrix

This is a functional prototype, not just slides and specs. Clone the repo, run npm install, add your Gemini API key, and spin up the development servers. You’ll have the governance dashboard running locally in minutes.

The roadmap includes full Kubernetes deployment manifests, real agent integration via Model Context Protocol, production-grade observability with Prometheus and Grafana, and SLSA Level 3 supply chain compliance with signed images and SBOM generation.

We built this under the OWASP AI Vulnerability and Security Framework (AIVSS) project because governance shouldn’t be proprietary. Every CISO and CAIO facing an agentic AI deployment needs this reference architecture. Making it open source accelerates adoption and lets the community improve it faster than any single vendor could.

If you’re deploying agentic AI, you need governance that operates at machine speed. Fork the repo. Run it in your environment. Tell us what breaks and what works. Contribute improvements. This is how we move from “pie in the sky Agentic AI governance” to actual, measurable control.

👉 Key Takeaway: AAGATE proves you can operationalize NIST AI RMF today with existing frameworks and open source tools.

👉 Visit https://github.com/rocklambros/AAGATE to fork and clone the repository and run the MVP locally.

👉 Read the full research paper at https://arxiv.org/abs/2510.25863 for comprehensive architectural details and framework integration methodology.

👉 Star the repo, open issues, submit pull requests, and help us build governance that 👉 keeps pace with agentic AI.

👉 Subscribe for more AI security and governance insights with the occasional rant.